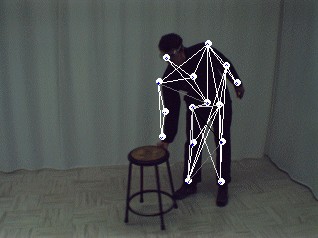

This work presents a vision system for tracking a 3D articulated human model from the observation of isolated features from multiple viewpoints. A generic model is instantiated by estimating invariant elements (limb lengths) during tracking. The model is used as feedback both in the estimation module for filtering and in the segmentation module where it predicts the feature's position and size. Filtering is carried out with a Kalman filter with improved numerical stability using Joseph's implementation.The robustness of this implementation is compared to the basic formulation on real sequences. Results demonstrate a rapid convergence of the filtered parameters despite large observation variances.

This work presents a vision system for tracking a 3D articulated human model from the observation of isolated features from multiple viewpoints. A generic model is instantiated by estimating invariant elements (limb lengths) during tracking. The model is used as feedback both in the estimation module for filtering and in the segmentation module where it predicts the feature's position and size. Filtering is carried out with a Kalman filter with improved numerical stability using Joseph's implementation.The robustness of this implementation is compared to the basic formulation on real sequences. Results demonstrate a rapid convergence of the filtered parameters despite large observation variances.

All available information at the time of acquisition, e.g. the calibration and synchronization parameters of the cameras, is used in order to produce the 3D temporal description of a person, for a multi-camera sequence. The high-level description includes lenghts, pose and position.

The parameters of a skeletal representation – the model – of a person are estimated in 3D space. Feedback from the model in each image allows a simple segmentation to isolate feature points on the subject. The invariant elements of the description – the limb lengths – are filtered to produce the final result. An approach based on the extended Kalman filter is used for this purpose.

The 3D description of a person is generally made by fitting a model specific to each individual. The challenge here is to propose a method using a generic model of a person whose invariant elements are estimated by observation. To ensure the robustness of the segmentation to occlusions is also a challenge.

More details:

- Project page

- COGNOIS

- Acquisition system

- Camera calibration videos (MPEG): internal parameters, external parameters

Results (MPEG):

- Multiple cameras, arm: output;

- Multiple camera, whole body, seq. #1: output;

- Multiple camera, whole body, seq. #2: output;

- Multiple camera, whole body, seq. #3: output;

- Single camera, whole body, seq. #3: output.

Publications:

Stéphane Drouin, Patrick Hébert and Marc Parizeau. Simultaneous Tracking and Estimation of a Skeletal Model for Monitoring Human Motion. In 16th International Conference on Vision Interface, pp. 81-88, June 11-13 2003. (PDF)

Stéphane Drouin, Régis Poulin, Patrick Hébert and Marc Parizeau. Monitoring Human Activities: Flexible Calibration of a Wide Area System of Synchronized Cameras. In 16th International Conference on Vision Interface, pp. 49-56, June 11-13 2003. (PDF)