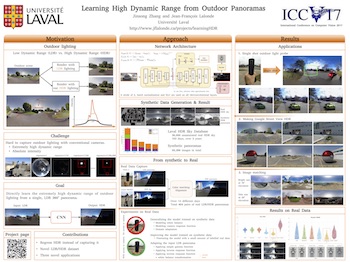

Learning High Dynamic Range from Outdoor Panoramas

Outdoor lighting has extremely high dynamic range. This makes the process of capturing outdoor environment maps notoriously challenging since special equipment must be used. In this work, we propose an alternative approach. We first capture lighting with a regular, LDR omnidirectional camera, and aim to recover the HDR after the fact via a novel, learning-based inverse tonemapping method. We propose a deep autoencoder framework which regresses linear, high dynamic range data from non-linear, saturated, low dynamic range panoramas. We validate our method through a wide set of experiments on synthetic data, as well as on a novel dataset of real photographs with ground truth. Our approach finds applications in a variety of settings, ranging from outdoor light capture to image matching.