Learning High Dynamic Range from Outdoor Panoramas (#1954) — Supplementary Material

This document is an extension of the explanations, results and analysis presented in the main paper. More results of qualitative synthetic test images, prediction over a course of day on the synthetic dataset, LDR/HDR pairs in the real dataset and the quantitative comparison on the real dataset with examples are given. We also show more examples of the IBL render with Theta S panoramas and Google Street View images. In addition, we show the screenshot of the virtual 3D model which is used to generate the synthetic dataset.

Table of Contents

- Synthetic Result

- Real data (extends fig. 6)

- Quantitative result on real data (extends fig. 7)

- Applications

- Rendering result using larger object

- Virtual city model

1. Synthetic Result

In this section, we present some additional examples from the synthetic test dataset, extending the results in sec. 4.

1.1 Synthetic prediction

The following images show the extension of fig. 4, each row shows a different example. The first column shows the prediction from our network and its render. The second column is the ground truth panorama and its render. The last column is the linear LDR image which is the input of our approach. Each panorama is shown at two different exposures as in the fig. 4, a gamma tone mapping is applied to the panoramas and a global tone mapping is applied to the rendering for display purpose.

Ours

Ground truth

LDR

1.2 Full day prediction

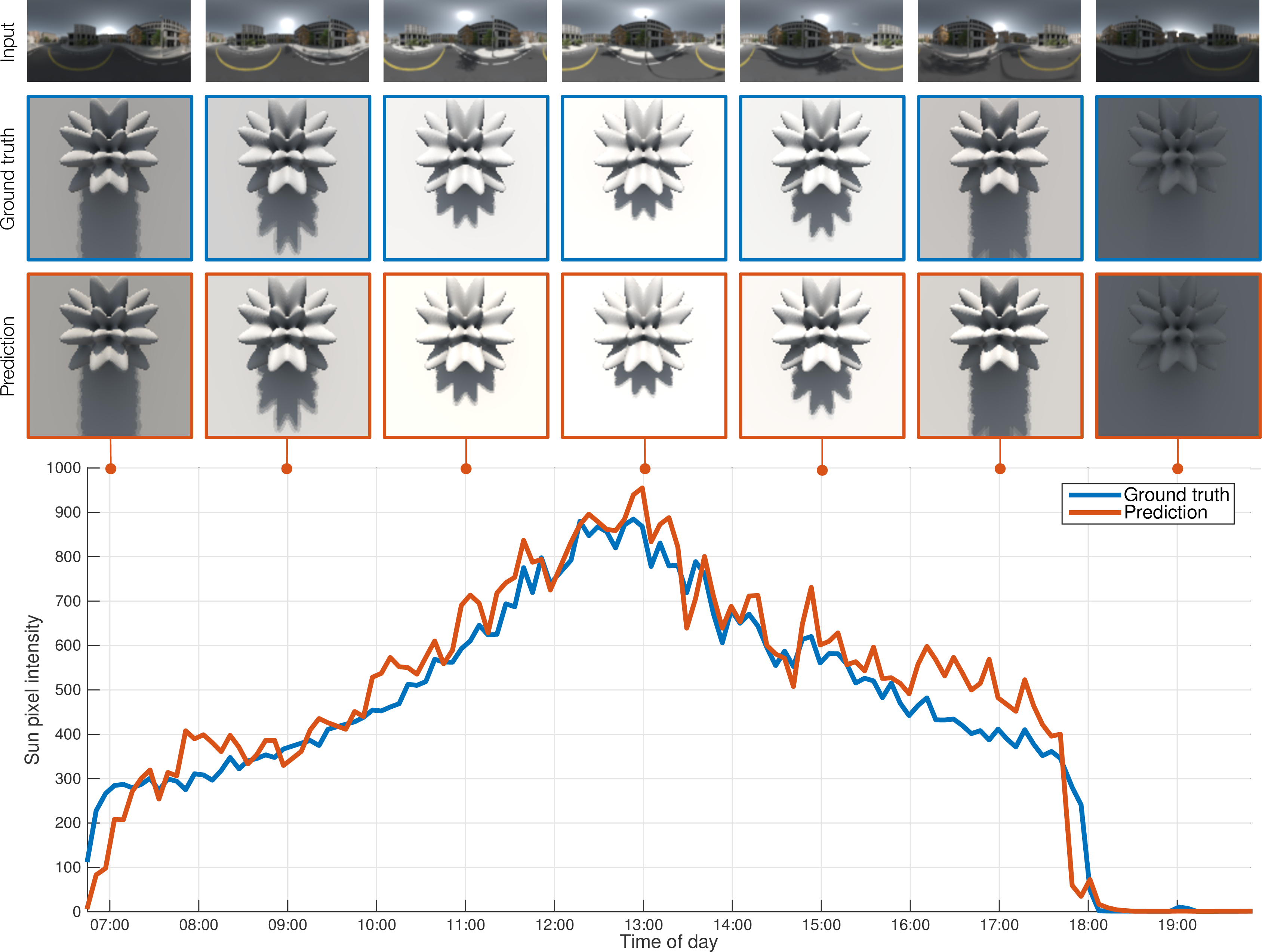

This part is an extension of fig. 5, which shows the prediction over the course of one day. The predicted intensity from our network (orange) follows the ground truth sun intensity (blue). Over the plot are shown: the input LDR (top), a rendering with the ground truth (middle), and a rendering with the predicted HDR (bottom). Our method achieves a relatively stable temporal coherence, even if it is working on one panorama at a time.

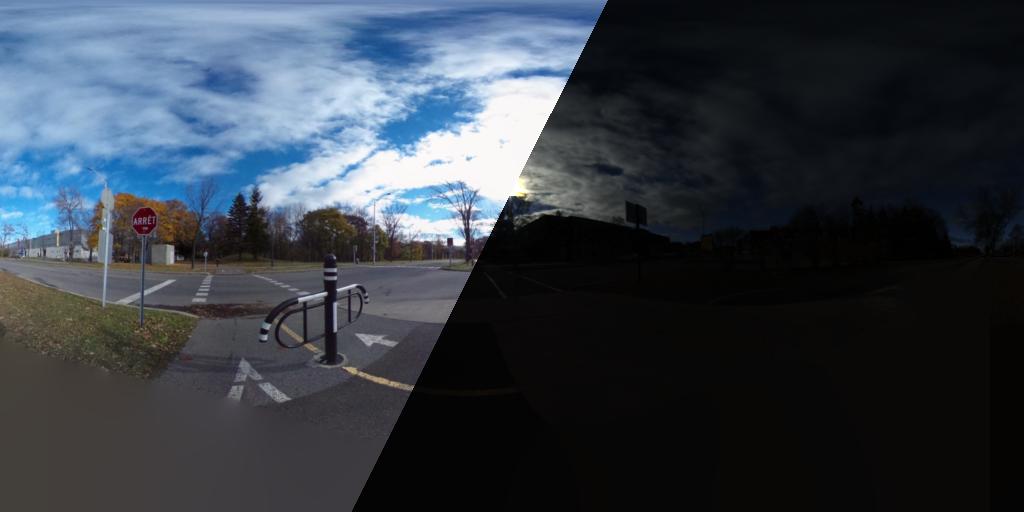

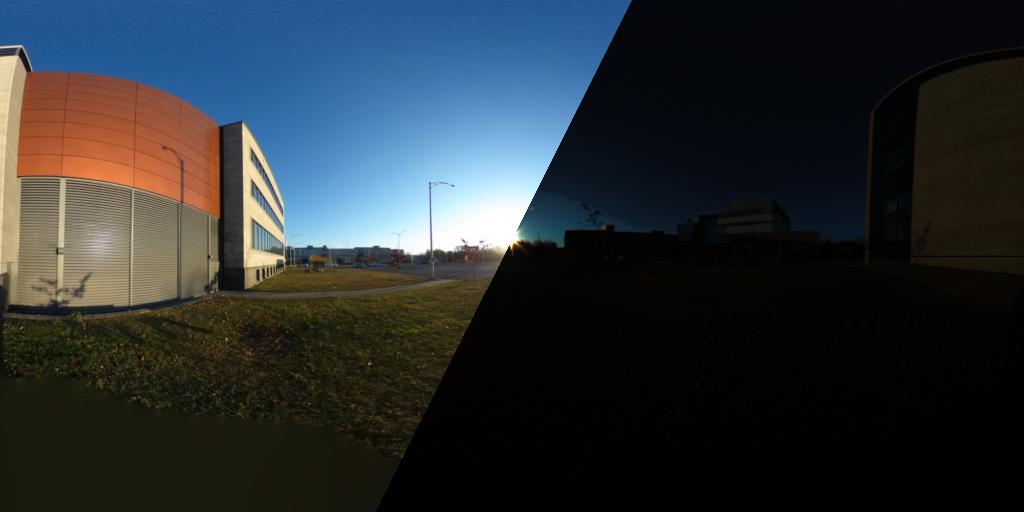

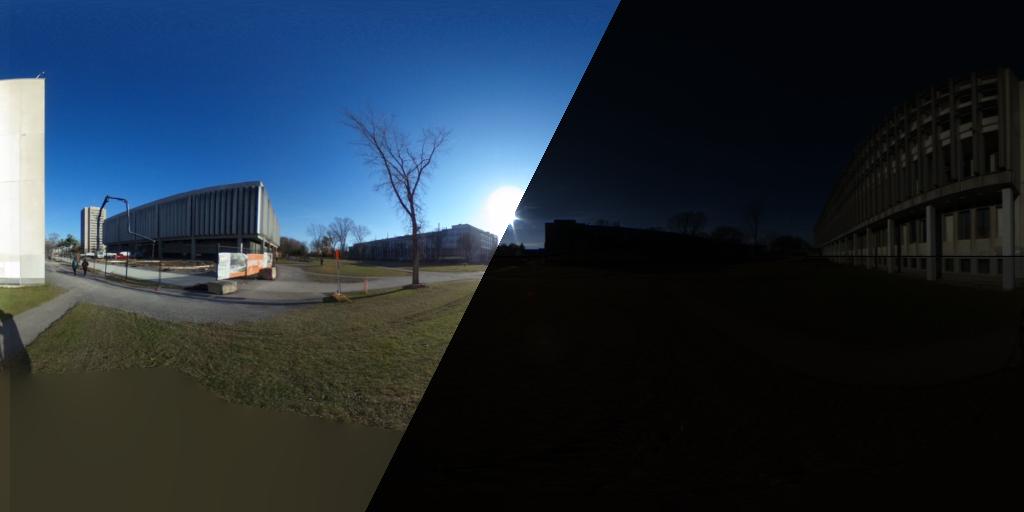

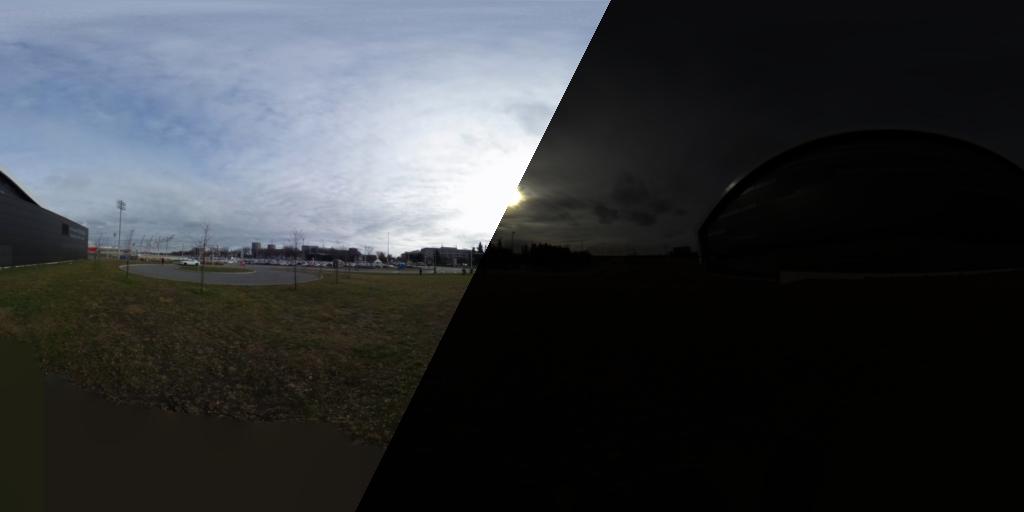

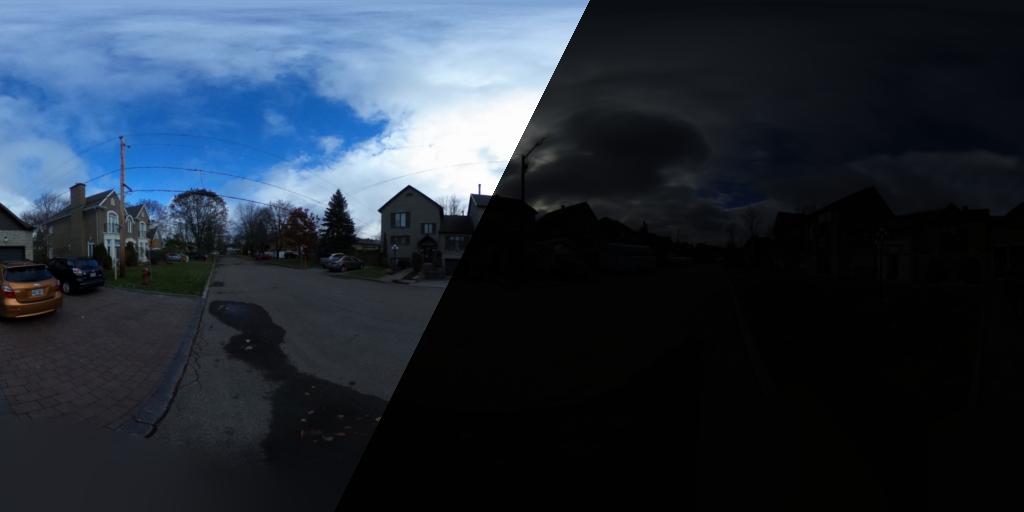

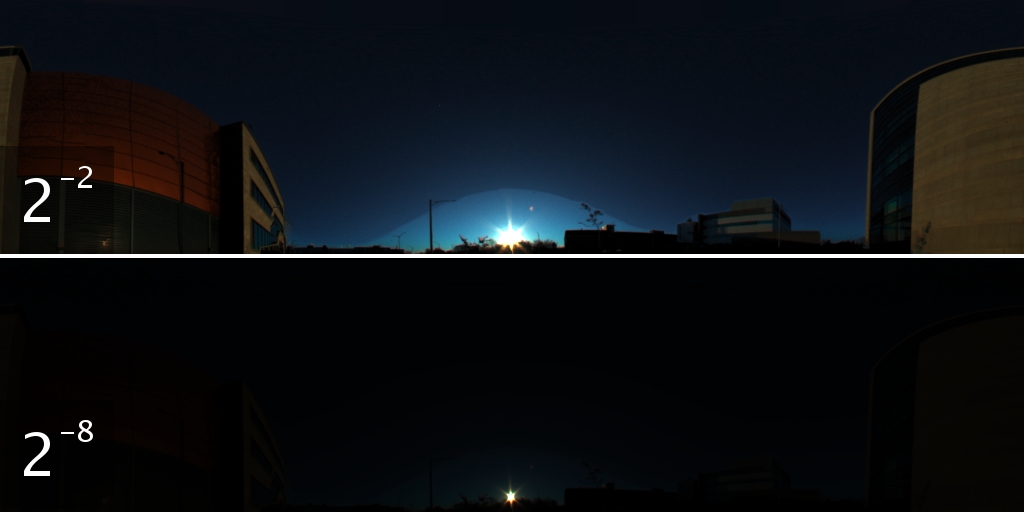

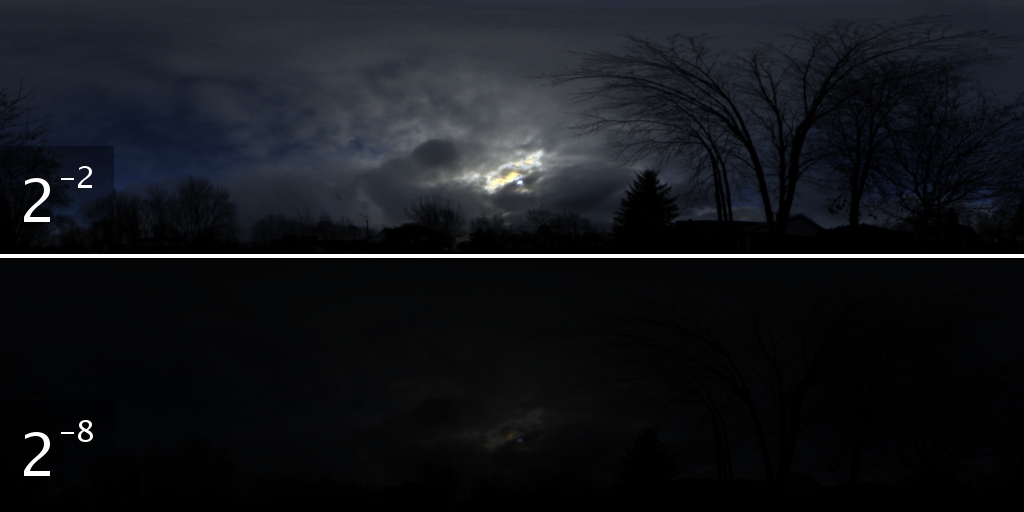

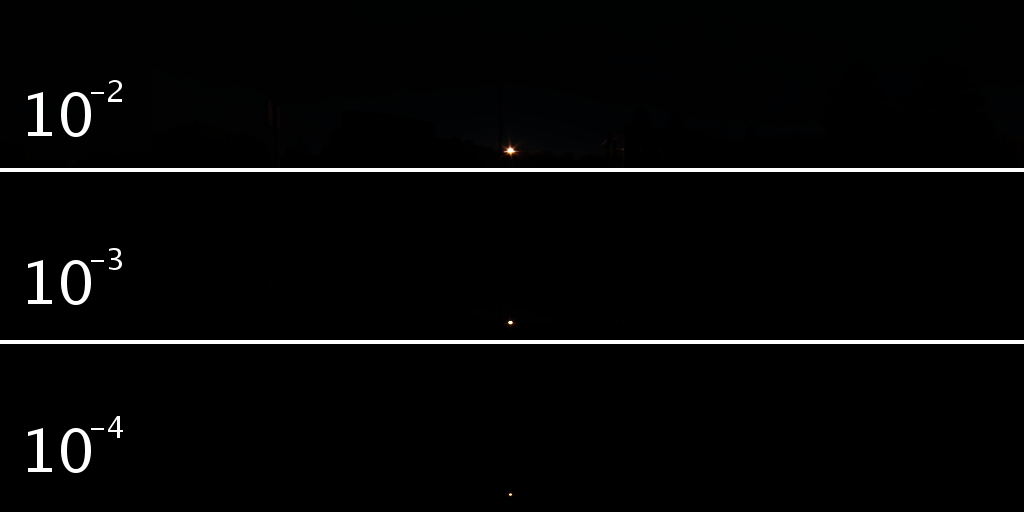

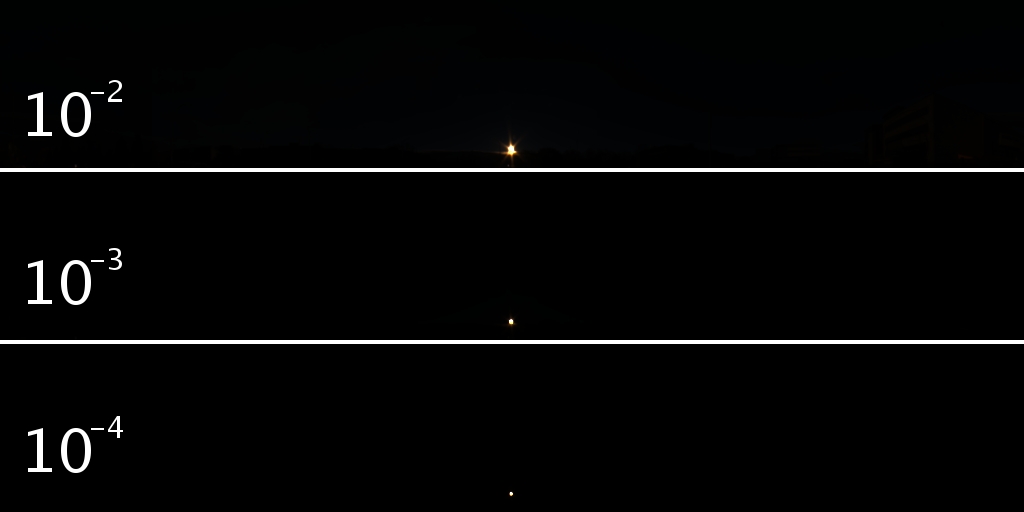

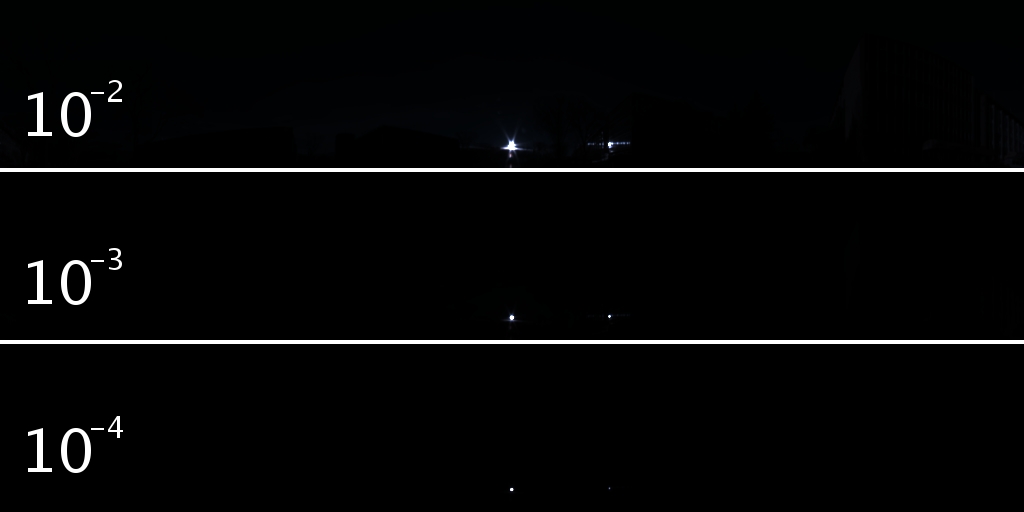

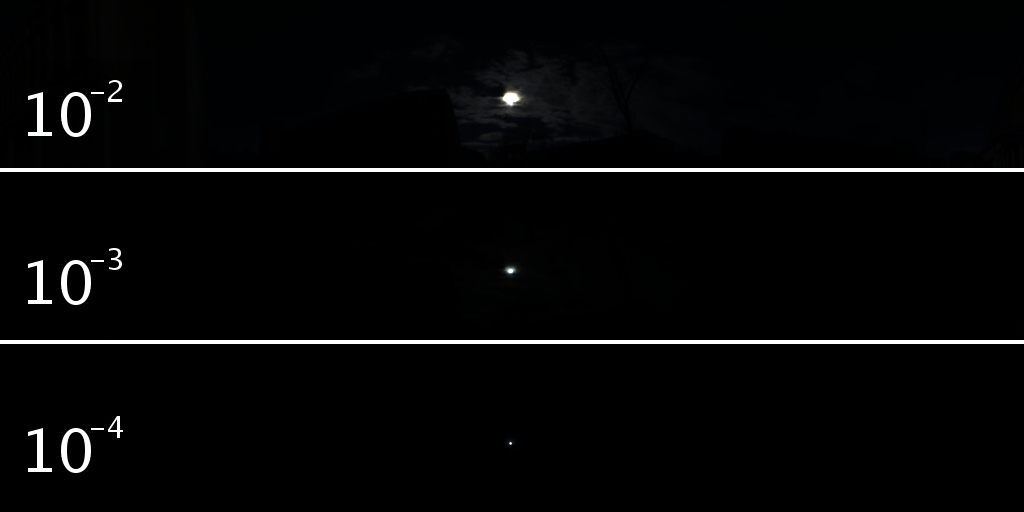

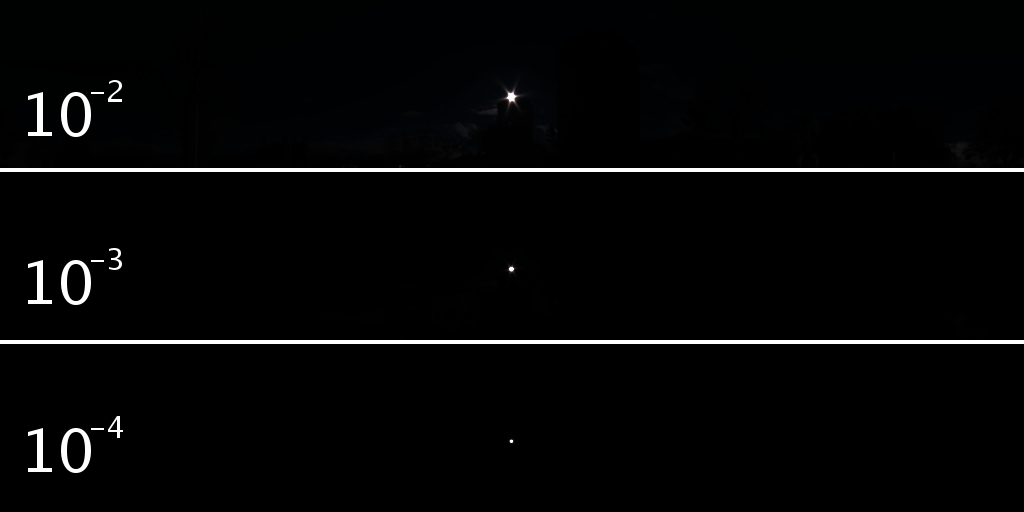

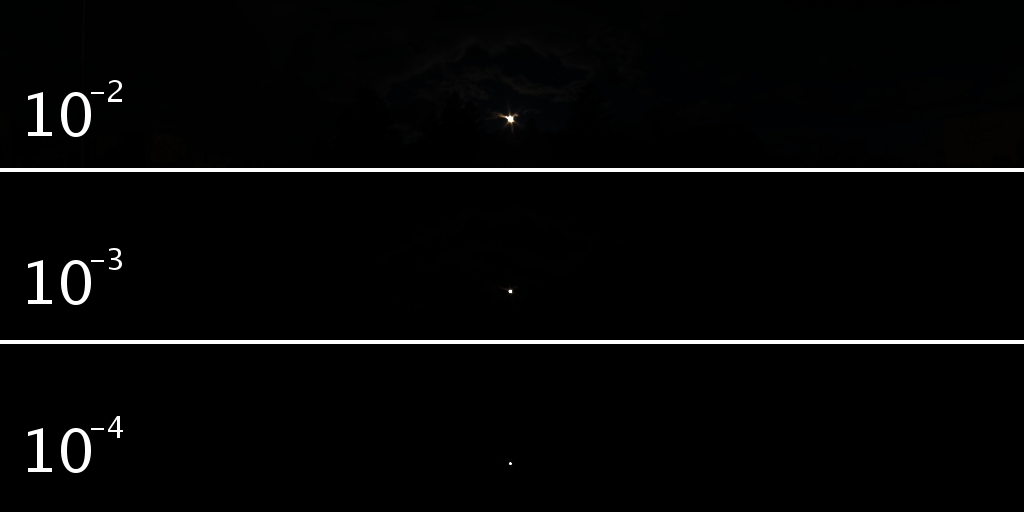

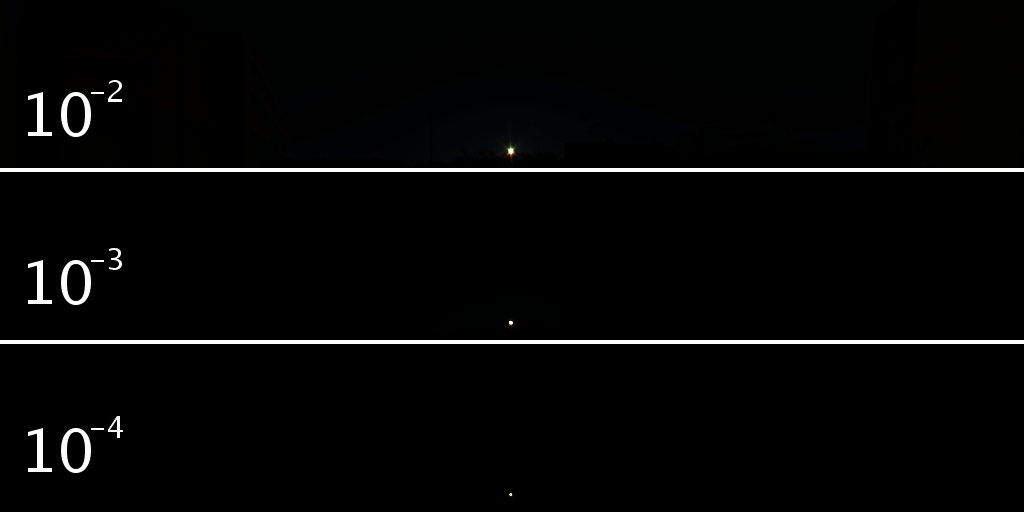

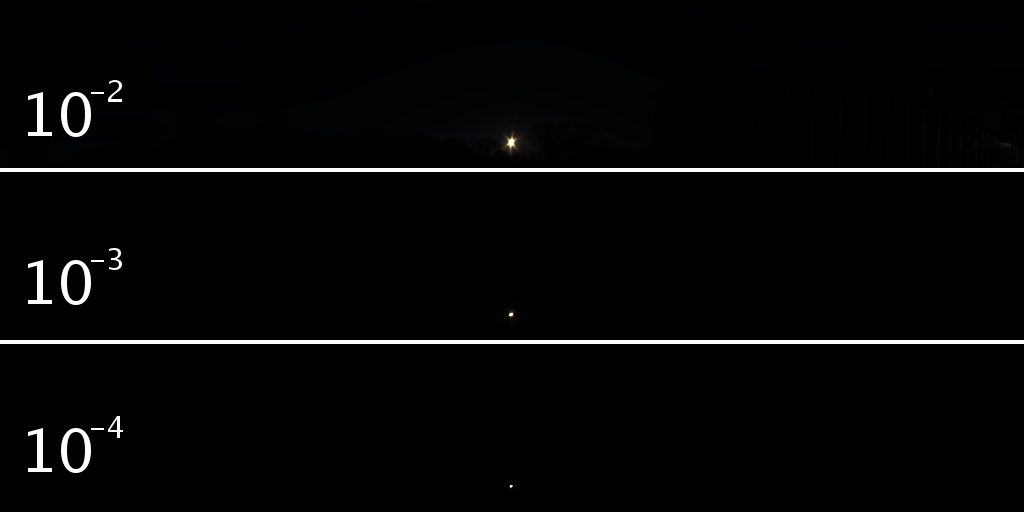

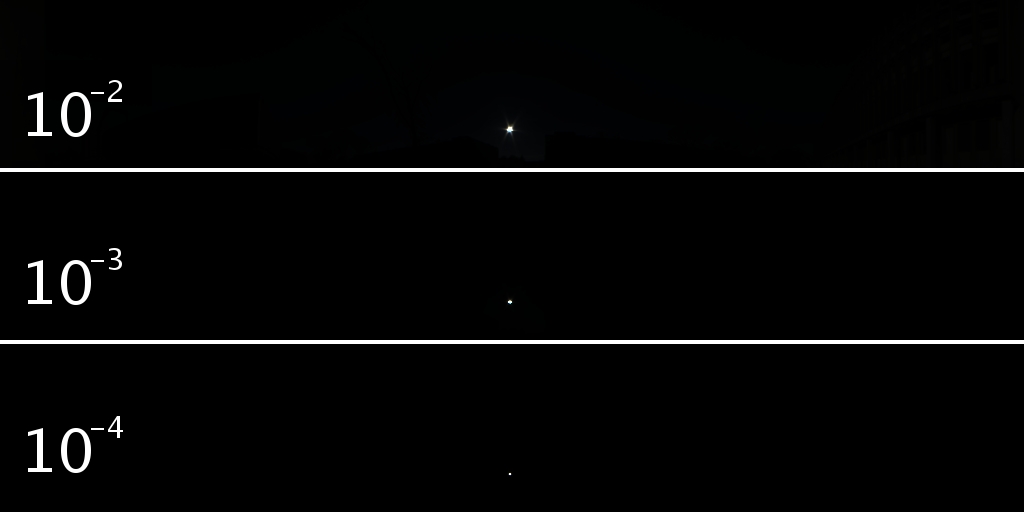

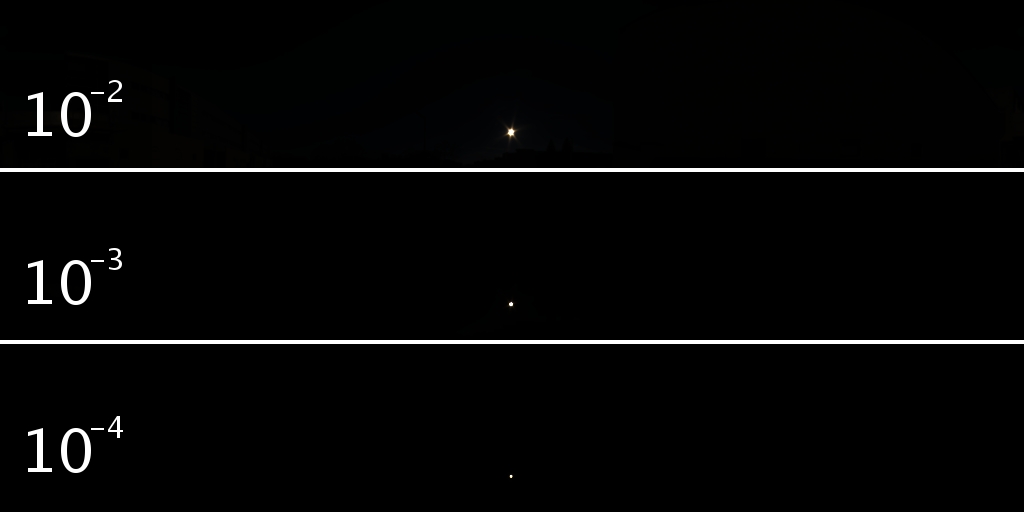

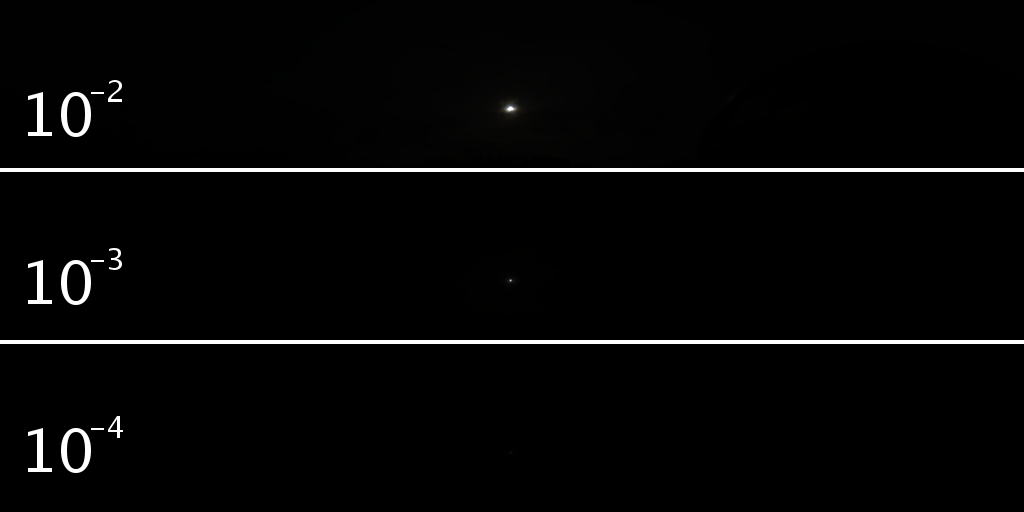

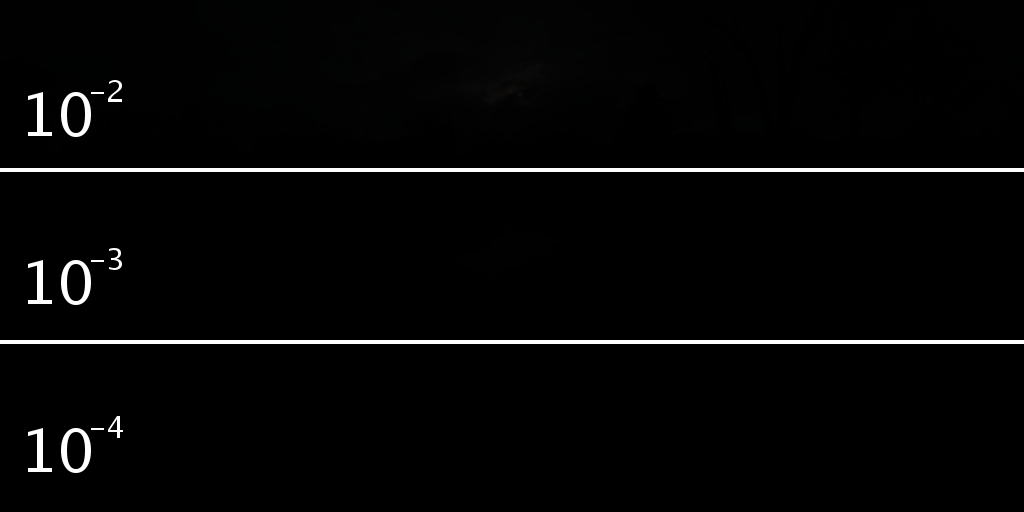

2. LDR/HDR pairs from real dataset

More examples of the LDR/HDR pairs in the novel real dataset are shown below. For each case, we show the LDR panorama captured by the Ricoh Theta S (left), and the corresponding HDR panorama captured by the Canon 5D Mark iii (right, shown at a different exposure to illustrate the high dynamic range) in the first column. We also show the full dynamic range of the cropped sky hemisphere with different exposures in the second and third columns. Notice the extreme HDR of outdoor panorama, the sun can be roughly 10,000 times brighter than the other regions in the panorama, accurate representation of the sun intensity is a key issue to the outdoor lighting.

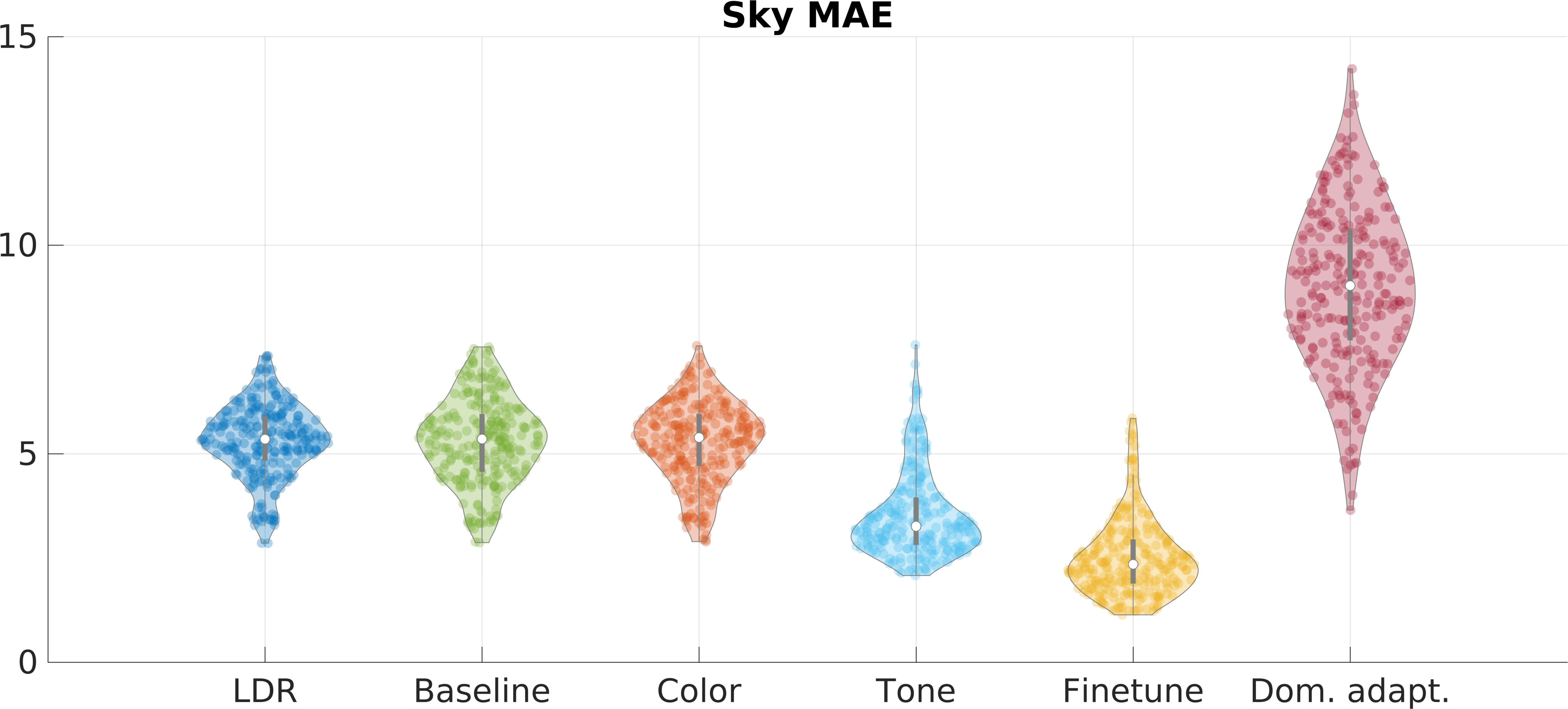

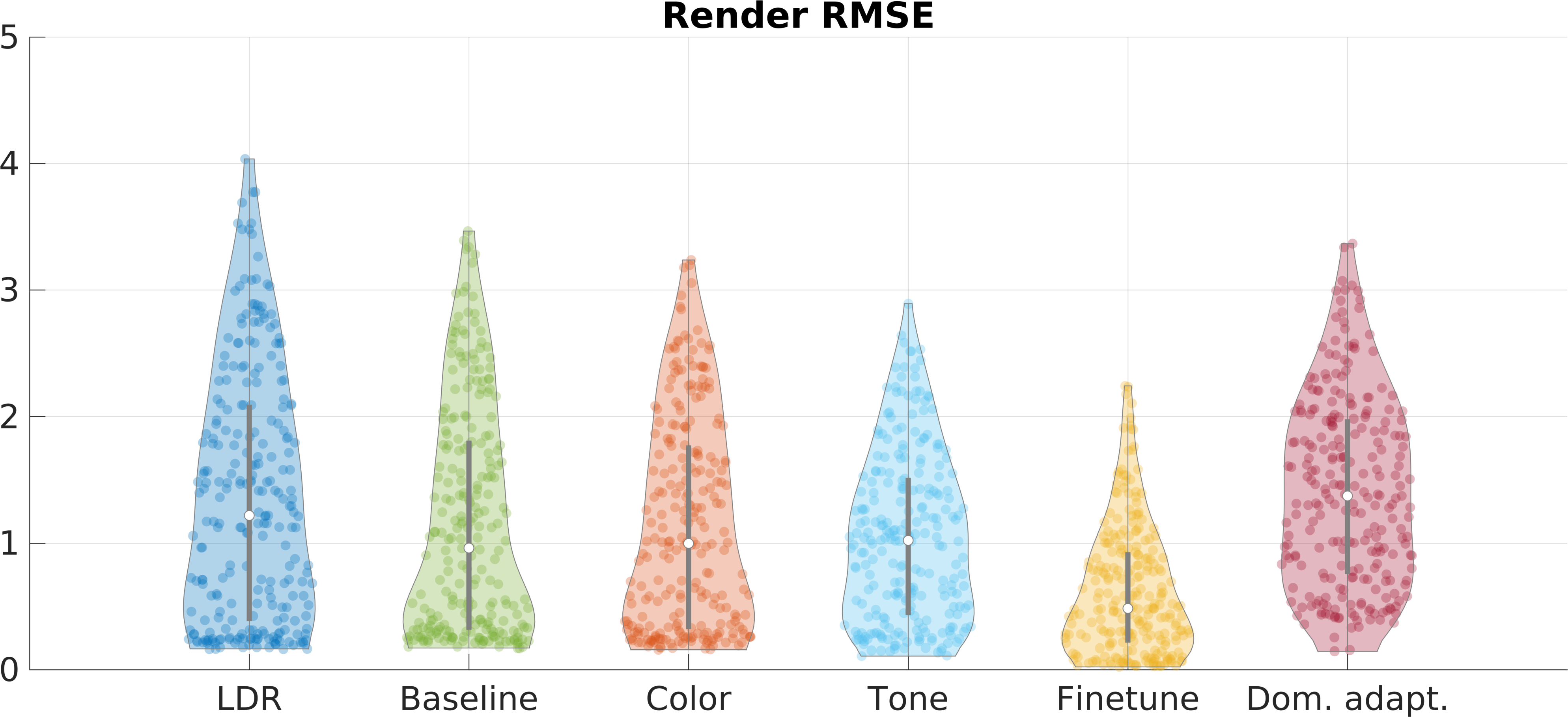

3. Quantitative result on real data

We compare the different models on the real test dataset, the following result is an extension of fig. 7 in the paper. It shows the full distribution of errors (curved shapes) on the real test dataset, as well as the 25th, 50th and 75th percentiles. More qualitative examples for the fine-tuned network are provided.

25th percentile of the fine-tuned network

Ground truth

Prediction

50th percentile of the fine-tuned network

Ground truth

Prediction

75th percentile of the fine-tuned network

Ground truth

Prediction

25th percentile of the fine-tuned network

Ground truth

Prediction

50th percentile of the fine-tuned network

Ground truth

Prediction

75th percentile of the fine-tuned network

Ground truth

Prediction

4. Applications

4.1 Single shot outdoor light probe

In this section, we estimate the HDR for the LDR panorama captured with Ricoh Theta S, then we use the HDR prediction as a light source to render a virtual object. We use the same model as in sec. 6.1 in the paper. All the provided examples are taken from our test set, which the neural network has never seen during training.

The following images show different rendering models with different panoramas.

Our method

(mouse hover for GT rendering)

Render with Ground Truth

Render with LDR

(mouse hover for GT rendering)

Background

We use many different panoramas to render the same model, the rendering results are shown below.

Our method

(mouse hover for GT rendering)

Render with Ground Truth

Render with LDR

(mouse hover for GT rendering)

Background

4.2 Object insertion in Google Street View

For the following results, we insert a virtual object in the Google Street View imagery. First we compute the center of the largest saturated region in the panorama, then we rotate the sky to center the sun. Then the HDR was predicted from our network (the same model is used as in sec. 6.2.), then we use the estimated HDR as the only light source to render the object. The first column shows a virtual object relit with the HDR panorama predicted from our network, the second column is the render with the LDR panorama, and the last column shows a cropped regular image from the panorama.

Render with our prediction

LDR rendering

(mouse hover for our rendering)

Google Street View Image

(mouse hover for our rendering)

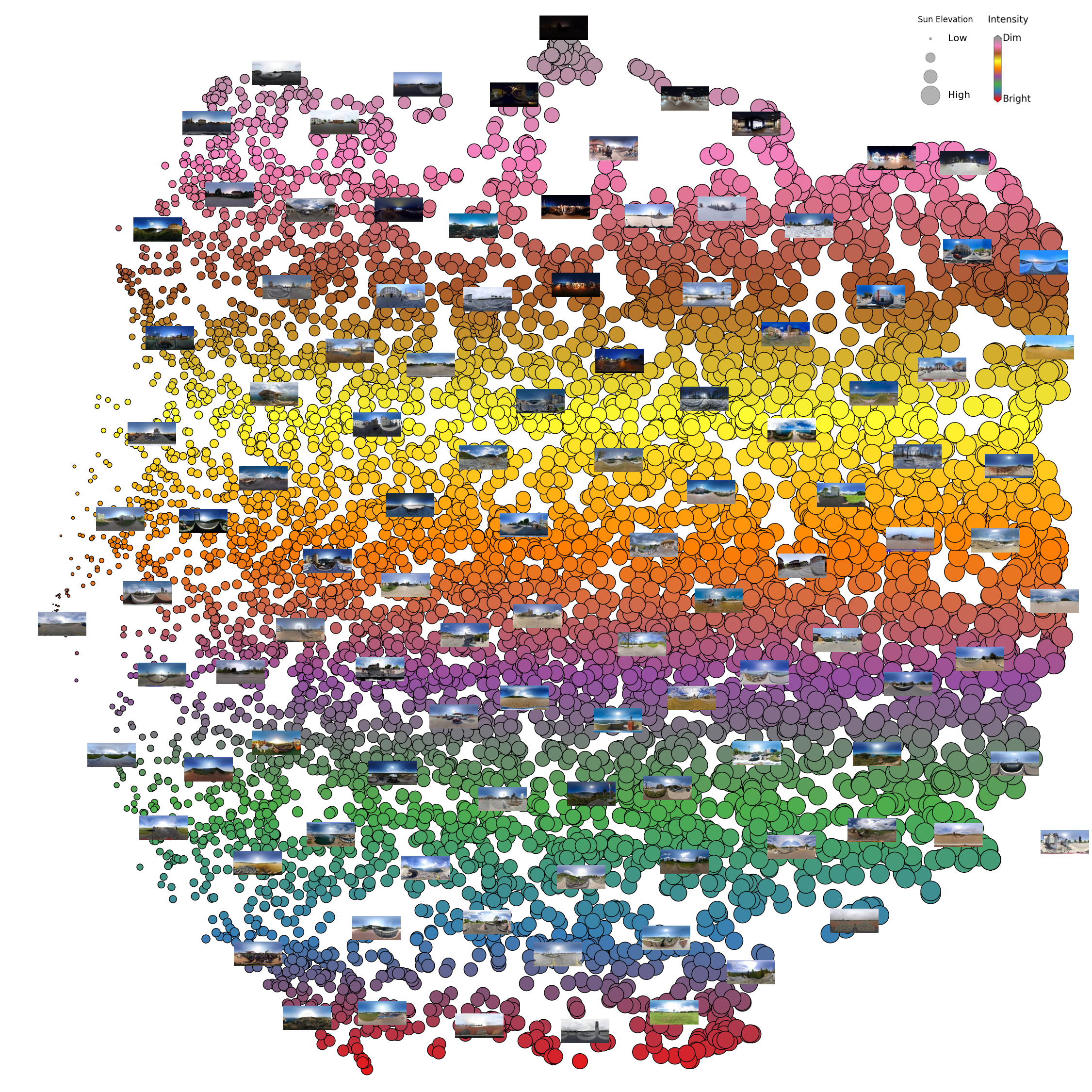

4.3 Image matching in the SUN360 dataset

The following figure shows the t-SNE visualization result on SUN360 dataset. We use the 64-D embedding vector obtained from the encoder in our network as a feature for each LDR image. Then we use t-SNE to reduce the dimension to 2, and visualize the SUN360 database in the following figure. We also show the sun elevation (size of the circle) and the sun intensity (color of the circle) obtained from the predicted HDR images. The left of the figure shows the panoramas with a lower sun elevation, the right part shows a higher sun elevation. The top of the t-SNE figure shows the dim panoramas, the bottom shows the brighter panoramas. The 64-D vector is a potential descriptor for outdoor LDR panoramas, it is able to describe the physical property of the captured sky.

5. Rendering result using larger object

In this section, we present additional rendering result using larger object that reflects the environment, the buildings and high lights of the predicted HDR panorama are reflected in the rendered sphere. The first row shows the full resolution LDR panorama from Google Street View, the second row shows the rendering at different directions. Our HDR prediction is adequate to store the HDR lighting: the shadows and highlights are clearly visible in the renders. The 3D model used in this example is from here.

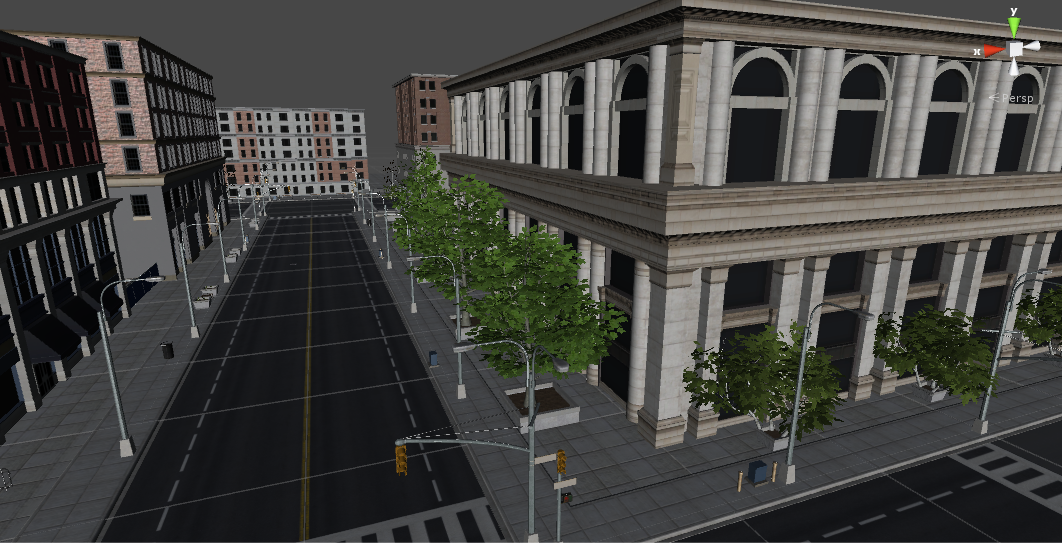

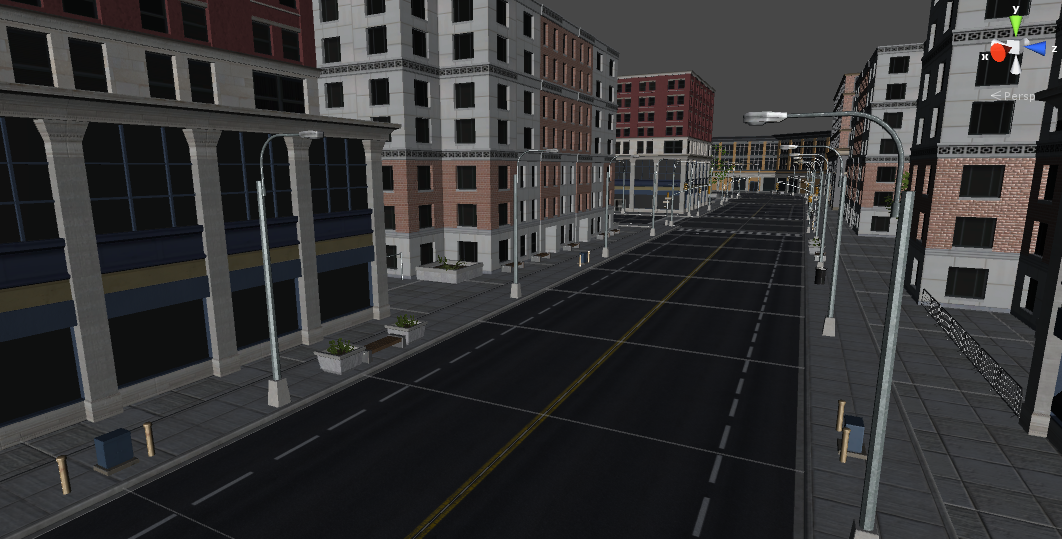

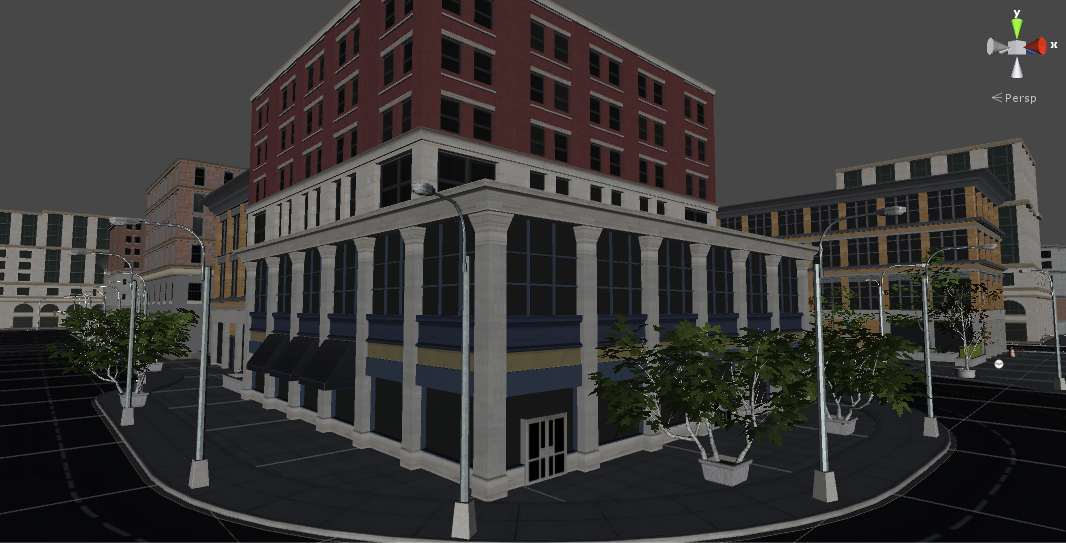

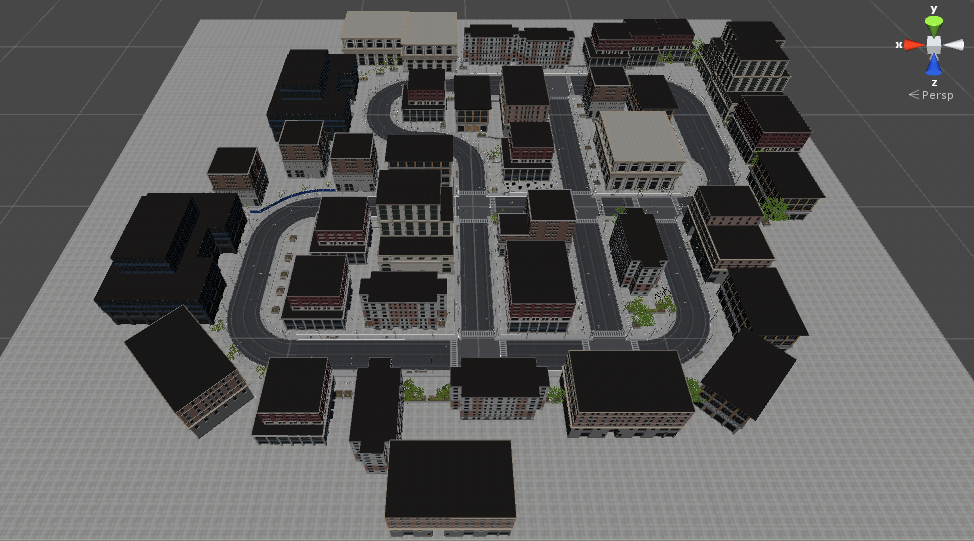

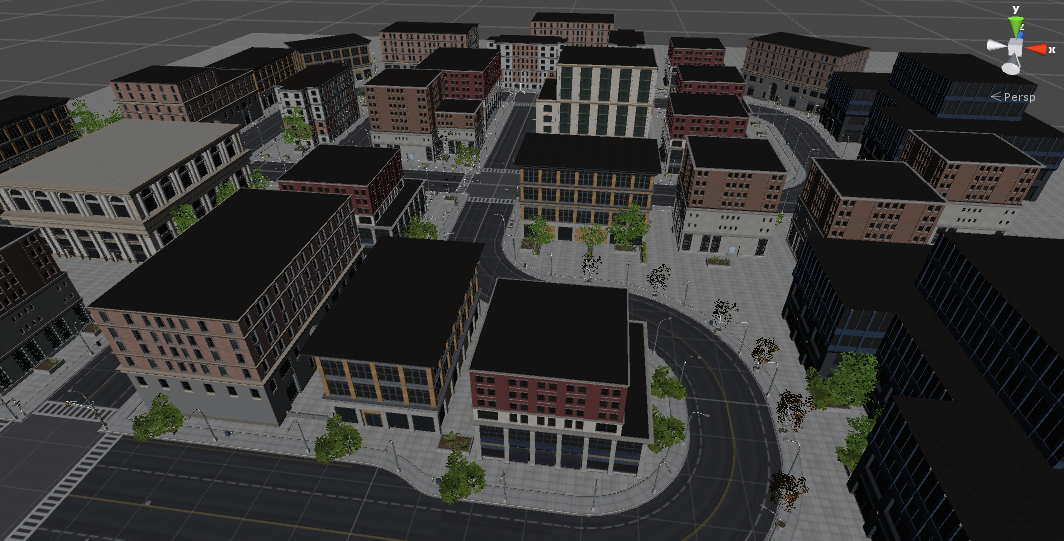

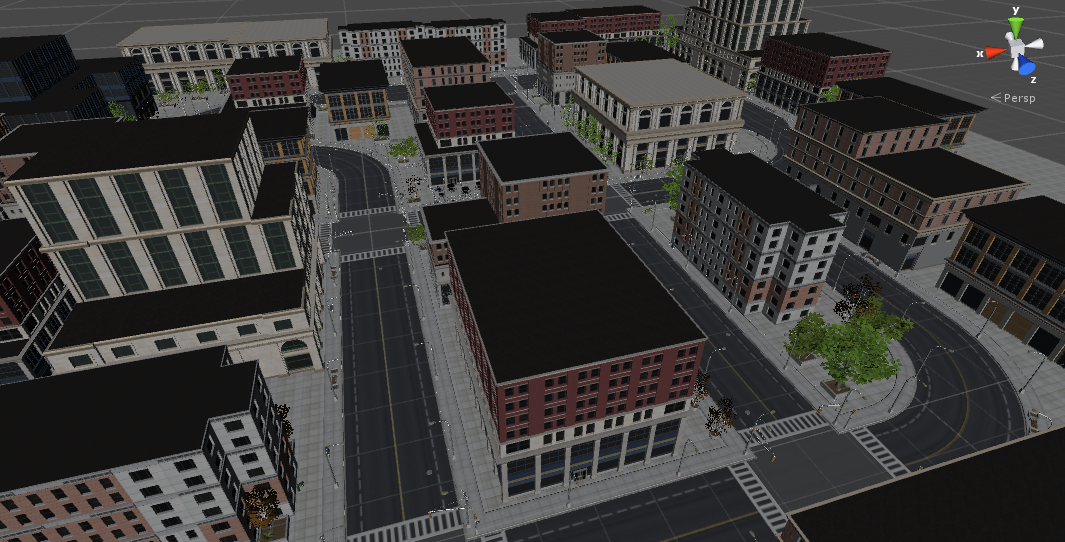

6. Virtual city model

The following figures show the realistic virtual 3D model of a small city which is used to generate the synthetic dataset. The 3D model was obtained from the Unity Store, and contains over 100 modular building pieces with different styles and materials, including realistic roads, sidewalks, and foliage.

Overview of the city model

Detail of the city model