x-hour Outdoor Photometric Stereo

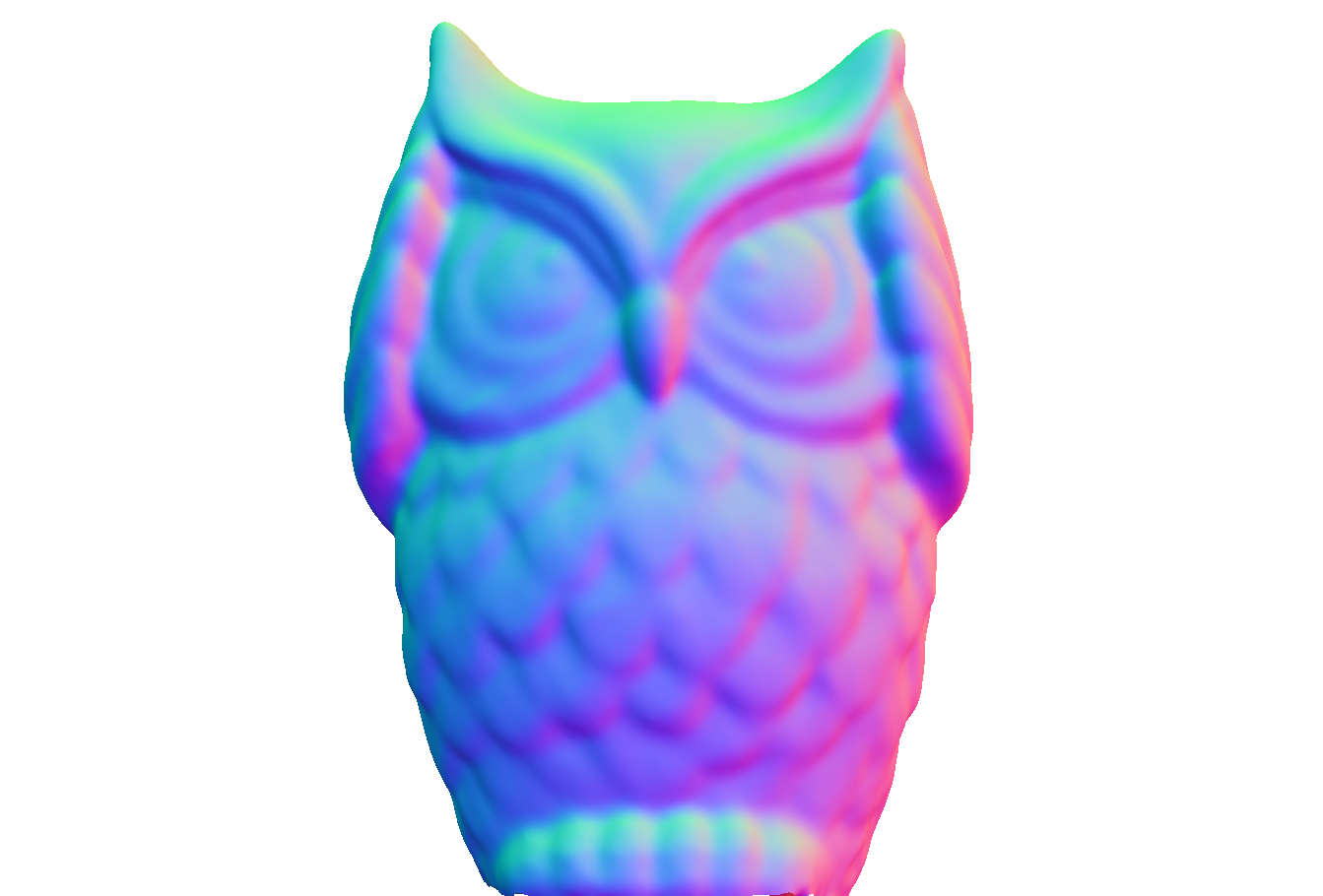

While Photometric Stereo (PS) has long been confined to the lab, there has been a recent interest in applying this technique to reconstruct outdoor objects and scenes. Unfortunately, the most successful outdoor PS techniques typically require gathering either months of data, or waiting for a particular time of the year. In this paper, we analyze the illumination requirements for single-day outdoor PS to yield stable normal reconstructions, and determine that these requirements are often available in much less than a full day. In particular, we show that the right set of conditions for stable PS solutions may be observed in the sky within short time intervals of just above one hour. This work provides, for the first time, a detailed analysis of the factors affecting the performance of x-hour outdoor photometric stereo.