The goal of this project is to familiarize with high dynamic range (HDR) imaging, image-based lighting (IBL), and their applications. We should create HDR images from sequences of low dynamic range (LDR) images and also composite 3D models seamlessly into photographs using image-based lighting techniques. High-dynamic-range imaging (HDR) is a set of techniques used in imaging and photography to reproduce a greater dynamic range of luminosity than standard digital imaging or photographic techniques can do. It is a digital photography technique whereby multiple exposures of the same scene are layered and merged using image editing software to create a more realistic image, or a dramatic effect. The combined exposures can display a wider range of tonal values than what the digital camera is capable of recording in a single image. Most methods for creating HDR images involve the process of merging multiple LDR images at varying exposures, which is what we will do in this project. HDR images have a lot of applications such as contrast enhancement, hyper-realistic art, post-process intensity adjustments, and image-based lighting. For this project we will focus on their use in image-based lighting, specifically relighting virtual objects. We need a 360 degree image. Capturing 360 degree image is difficult with standard cameras, for solving this problem we capture an HDR photograph of a spherical mirror, which provides the same omni-directional lighting information. In this homework, we will take the spherical mirror approach. With this panoramic HDR image, we can then relight 3D models and composite them seamlessly into photographs. In conclusion, this project has 4 main part: 1- Data collection 2- Produce HDR image 3- Panoramic transformation 4- Blender .

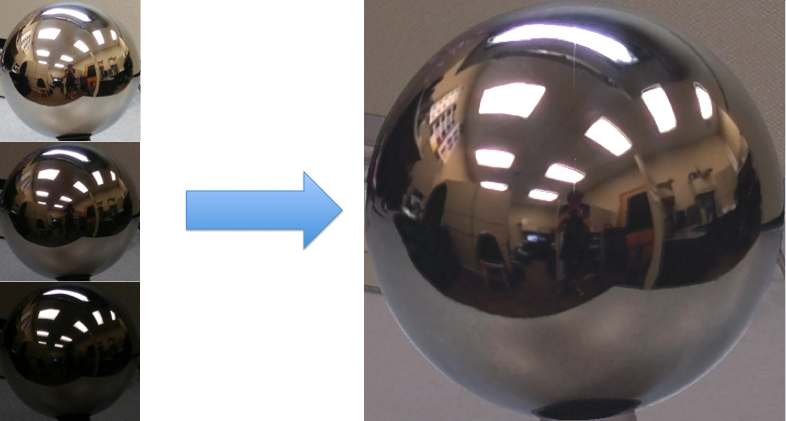

In this part we need to capture multiple exposures of a metal ball placed in the scene of interest,

and merge these exposures into one image with high dynamic range. For this purpose we will need equipment:

o Spherical mirror

o Camera with exposure control

o Tripod / rigid surface to hold camera / very stead hand

For doing this part we should find a good scene, we want to put our objects on the scene. We use a tripod for camera and spherical mirror

and then take photo with different exposure (at least three different exposures).

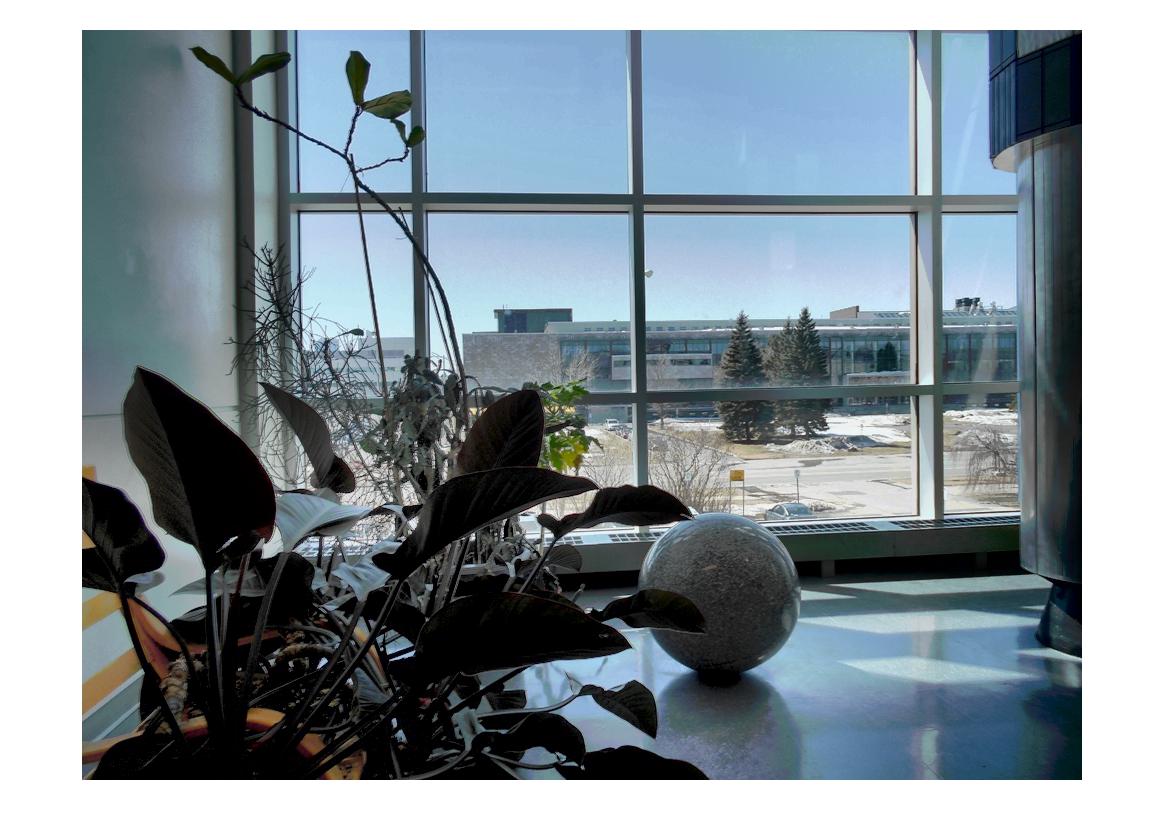

Finally, we remove mirror from the scene and take photo with normal exposure. This is background image.

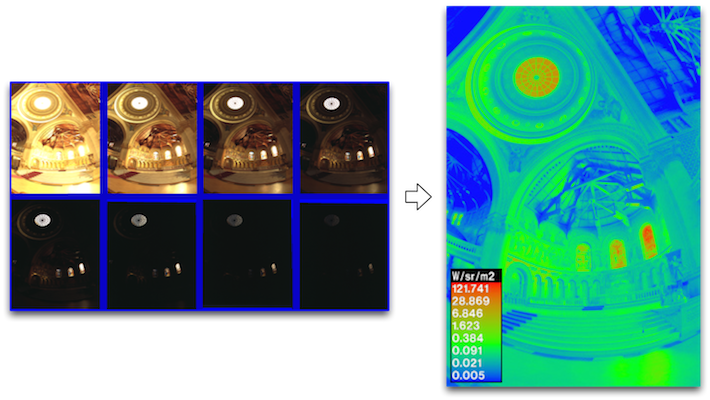

We want to build an HDR radiance map from several LDR exposures. For this purpose I used the idea of [Debevec and Malik 1997]

(see the code ‘radiance_map.m’).

Radiance is light or heat as emitted or reflected by something. Irradiance is the flux of radiant energy per unit area

(normal to the direction of flow of radiant energy through a medium). In photography, exposure is the amount of light per unit area

(the image plane illuminance times the exposure time) reaching a photographic film or electronic image sensor,

as determined by shutter speed, lens aperture and scene luminance.

The observed pixel value Zij for pixel i in image j is a function of unknown scene radiance and known exposure duration:

I took five images with different exposure time. I started from +2 to -2 in camera mod. [+2 +1 0 -1 -2].

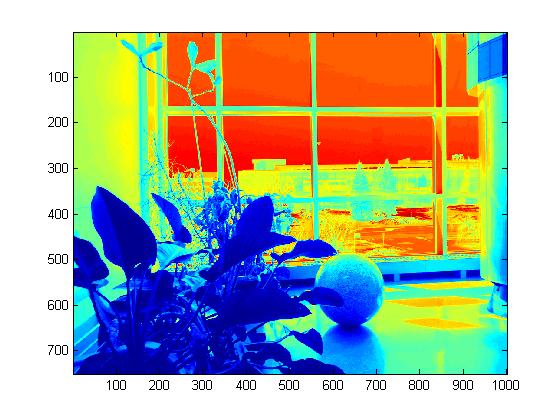

The results of this part are shown below:

The center image is normal image with 0 exposure:

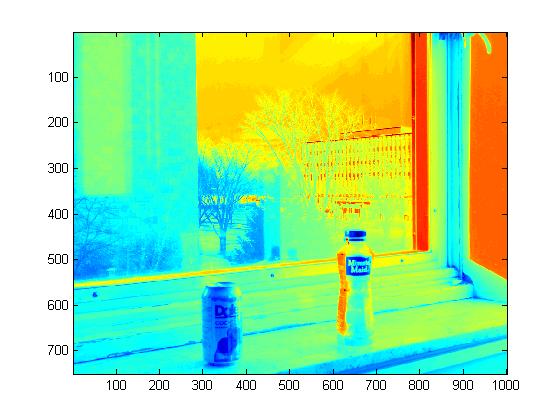

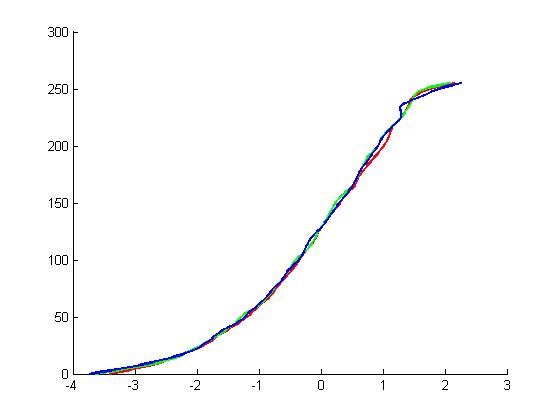

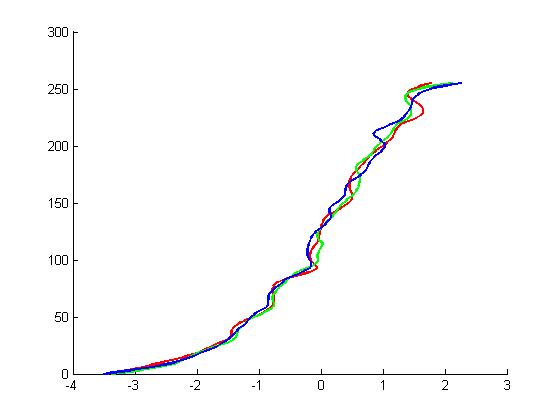

The result of this part is very good. I used a tripod to took images. As you see, the response curve (g-gurve) is very smooth. So, we have a good

tone mapping result. We cannot take image as this result by camera. I want to show that, tripod is so important in take sequence images. If

we do not use a tripod, we wont have smooth response curve then our result wont be good. I repeated this part

with another scene and I intentionally a little moved my hand in first image. We can see the results of this part:

At the center image is normal image with 0 exposure:

As you see, the response curve is not very smooth. So, our tone mapping image is not very good. We have artifact edges in leafs.