Depth Texture Synthesis for Realistic Architectural Modeling

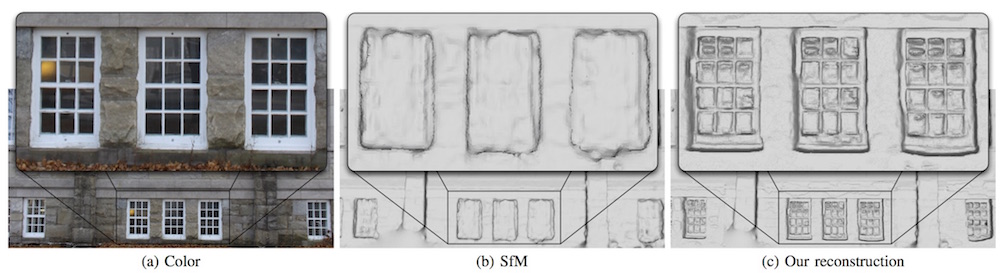

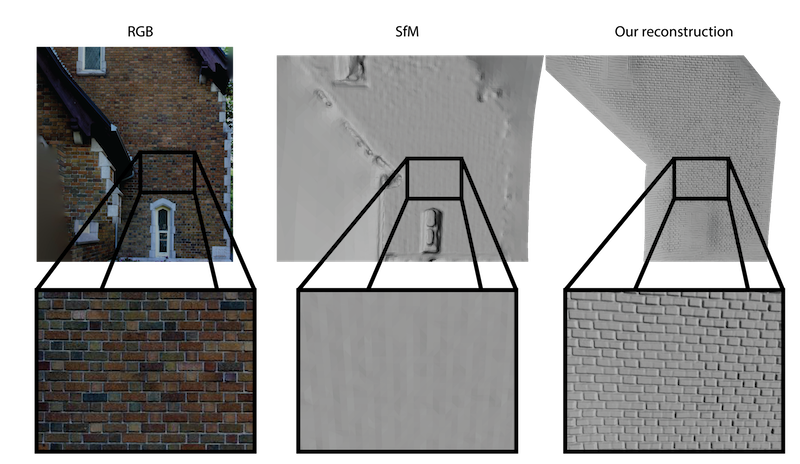

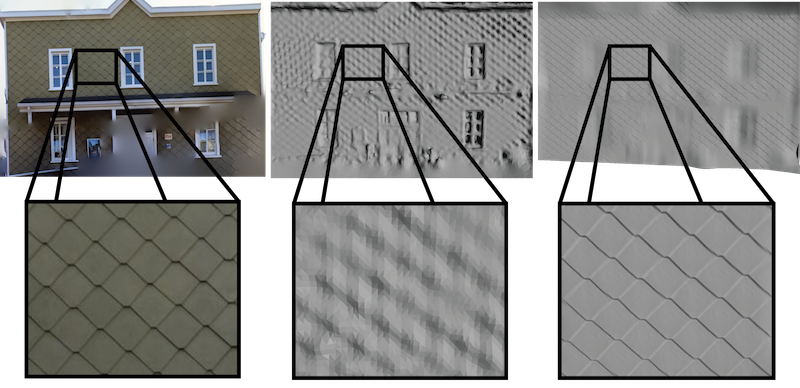

Large scenes such as building facades and other architectural constructions often contain repeating elements like identical windows, brick patterns, etc. In this paper, we present a novel approach that improves the resolution and geometry of 3D meshes of large scenes with such repeating elements. By leveraging structure from motion reconstruction and an off-the-shelf depth sensor, our approach captures a small sample of the scene in high resolution and automatically extends that information to similar regions of the scene. Using RGB and SfM depth information as a guide and simple geometric primitives as canvas, our approach extends the high resolution mesh by exploiting powerful, image-based texture synthesis approaches. The final results improves on standard SfM reconstruction with higher detail. Our approach benefits from reduced manual labor as opposed to full RGBD reconstruction, and can be done much more cheaply than with LiDAR-based solutions.