Deep Outdoor Illumination Estimation (#3565) — Supplementary Material

The purpose of this document is to extend the explanations, results and analysis presented in the main paper. More results on sun position estimation, camera parameter estimation and virtual object insertion on both the SUN360 and HDR dataset are provided. Furthermore, supplementary explanations on the HDR capture is given.

All the provided examples (images and panoramas) are taken from our test set, which the neural network has never seen during training nor validation.

Table of Contents

- Sun Position (extends fig. 6)

- Virtual Object Insertion (extends fig. 9)

- Camera Parameters Estimation

- HDR Captures (extends sec. 6.3)

- HDR Virtual Object Insertion (extends fig. 11)

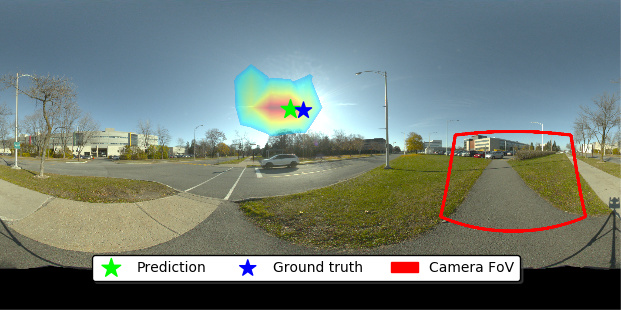

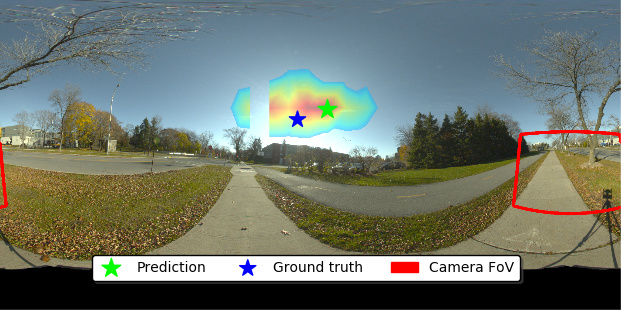

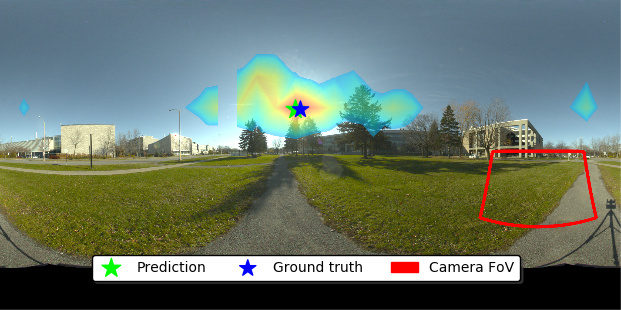

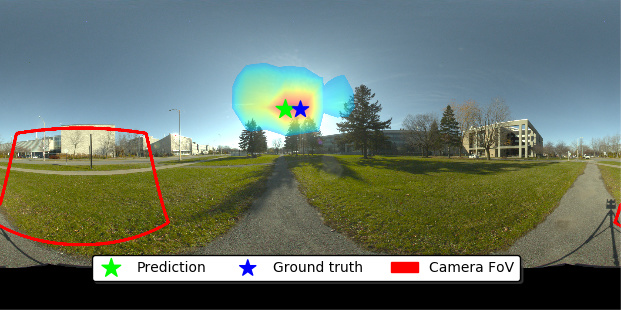

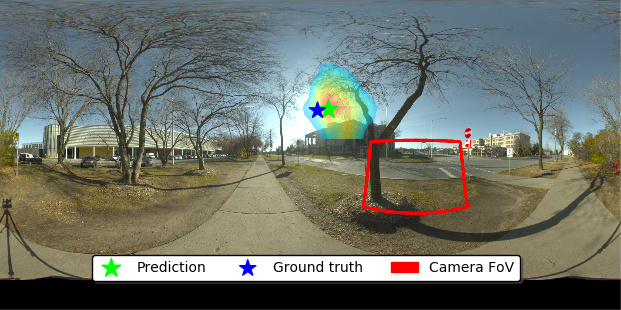

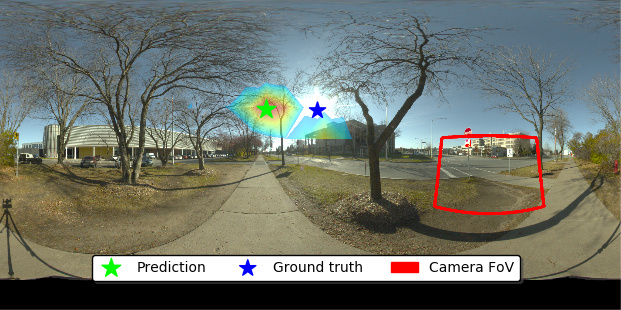

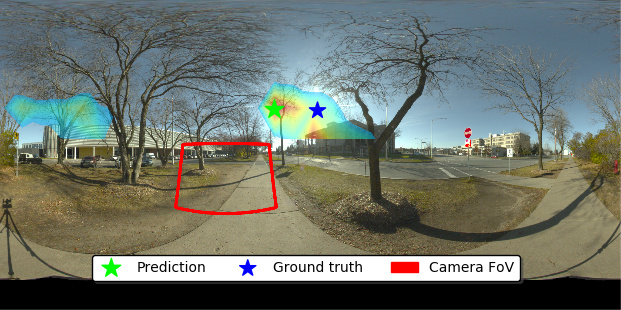

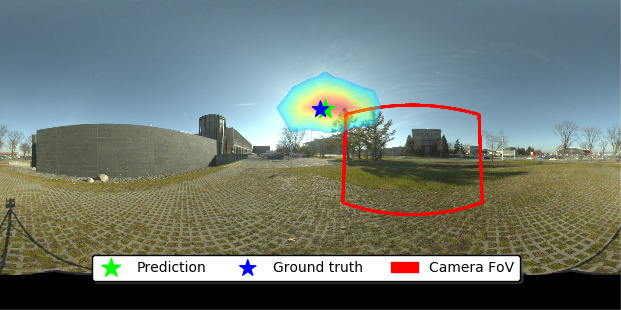

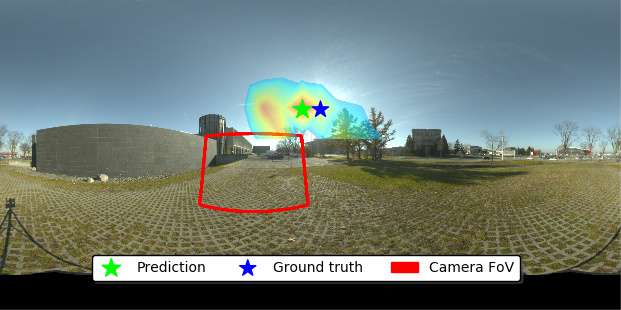

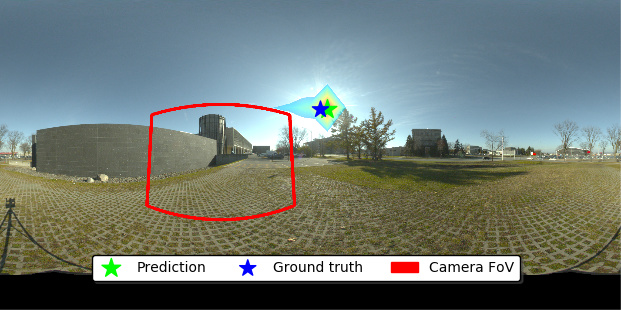

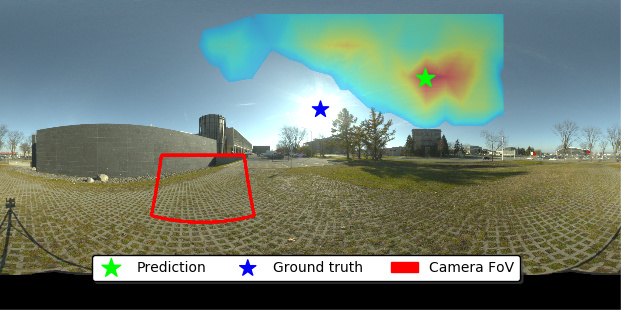

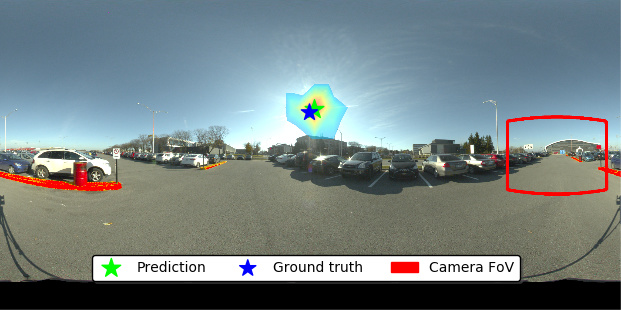

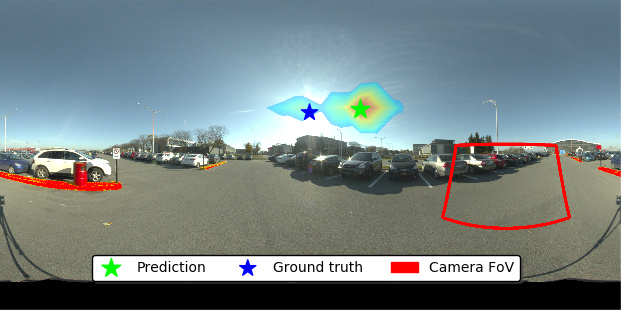

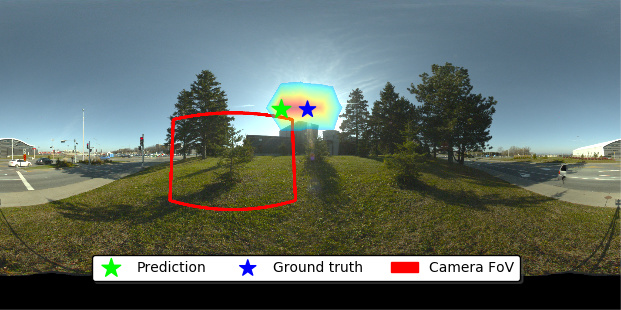

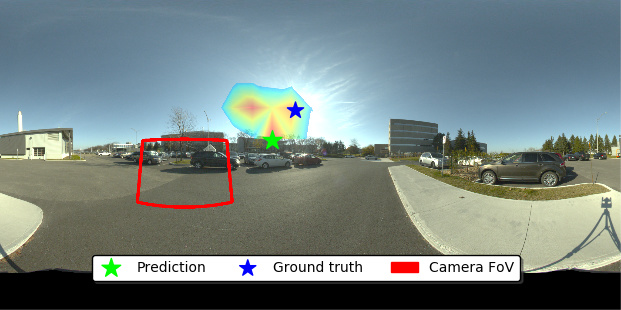

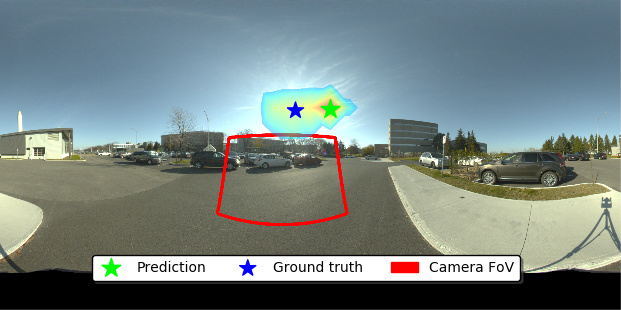

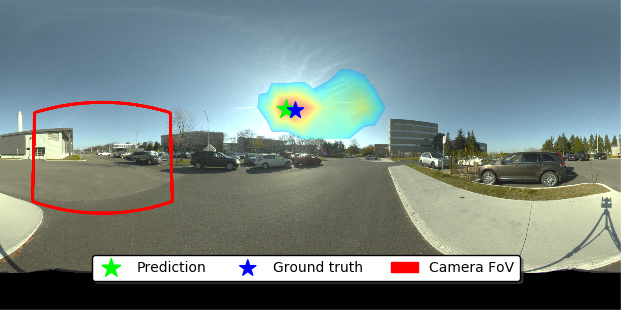

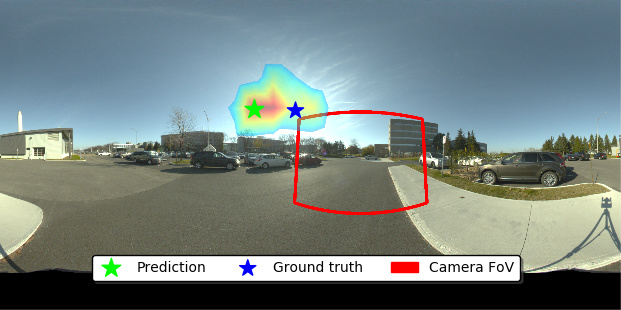

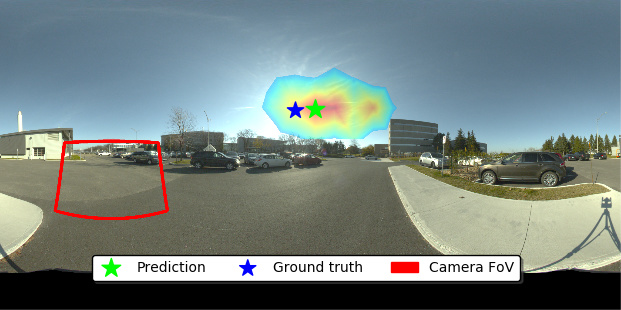

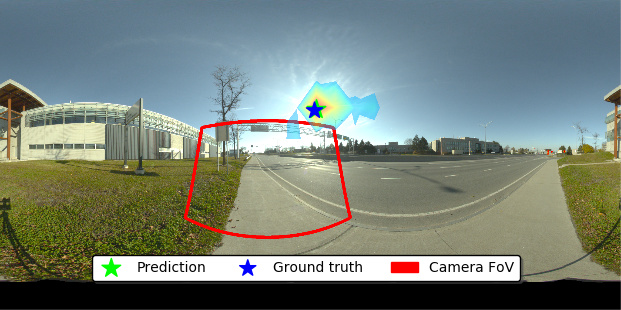

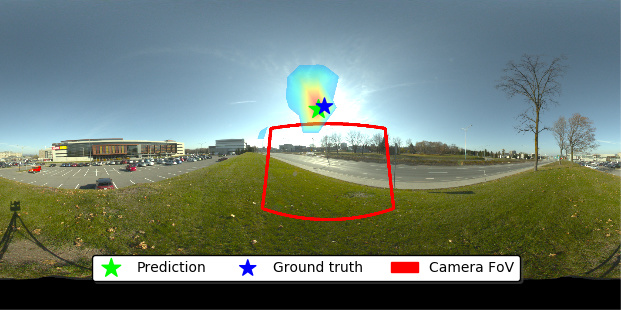

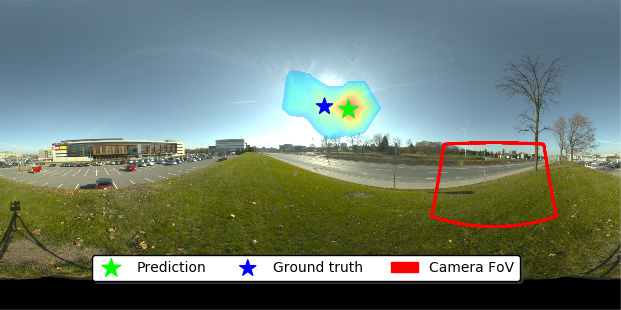

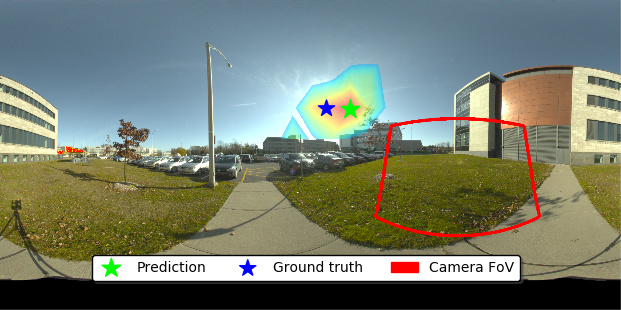

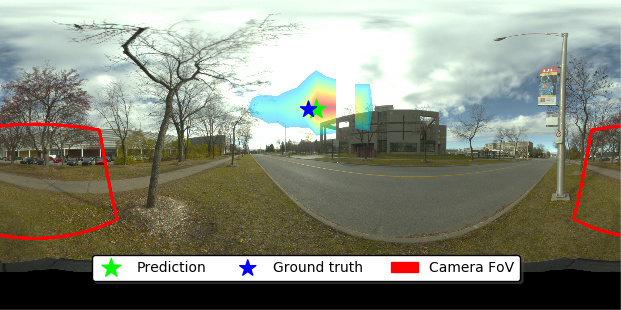

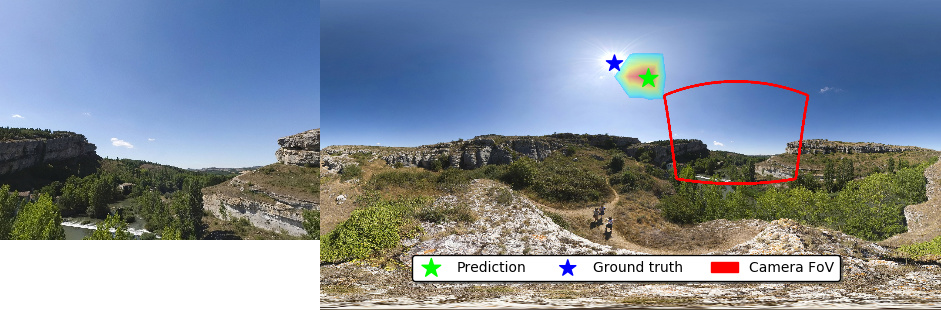

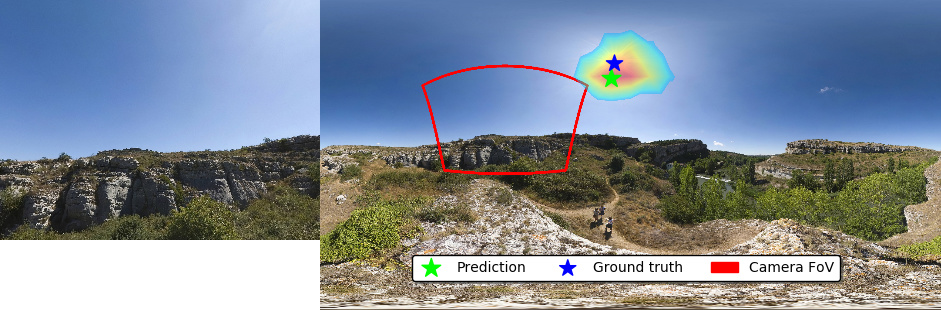

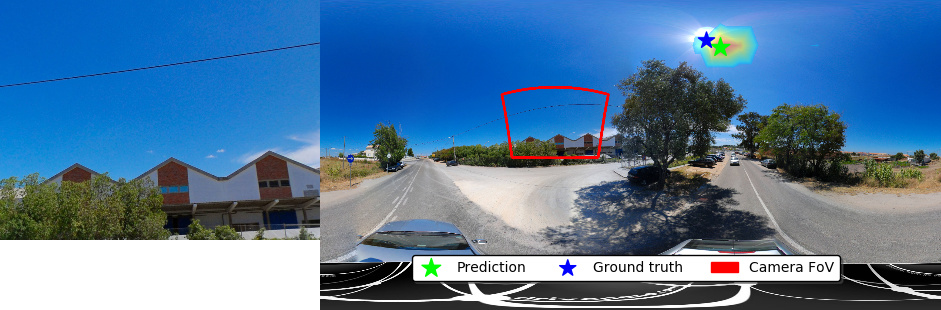

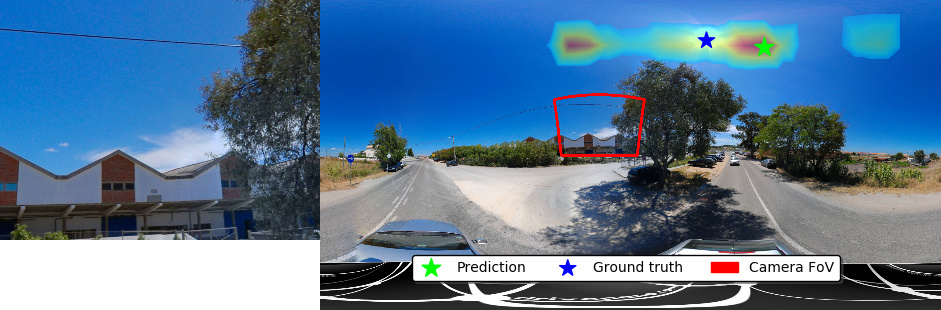

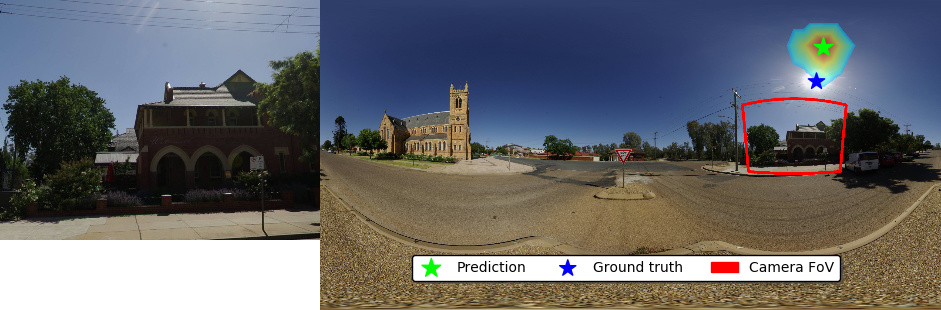

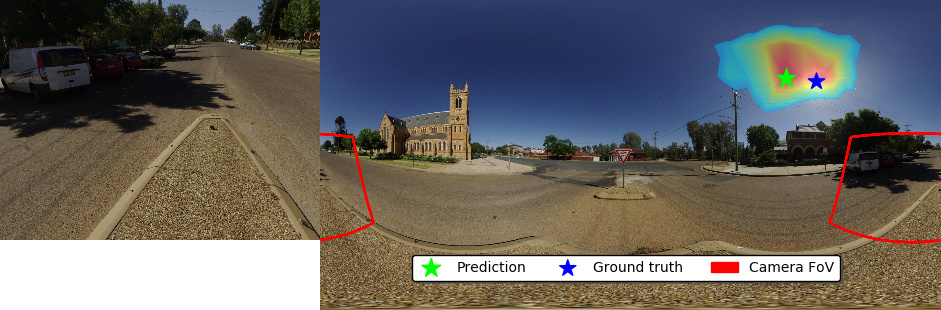

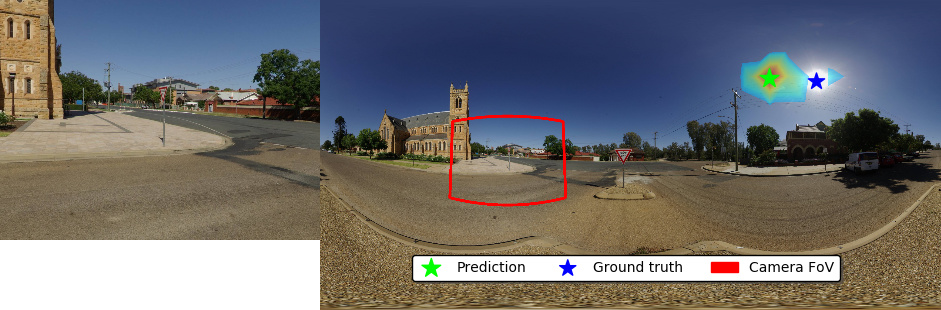

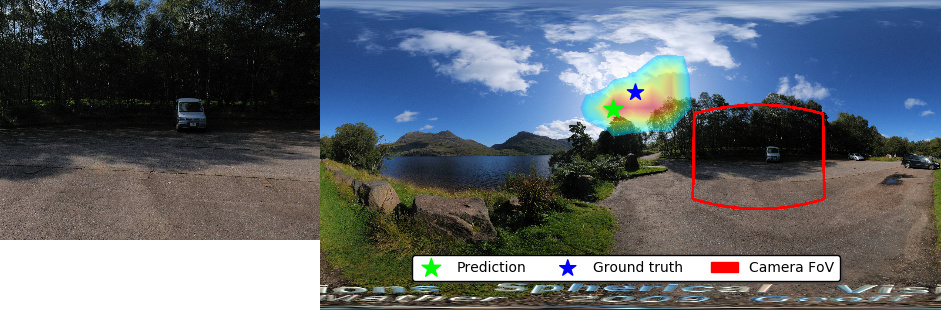

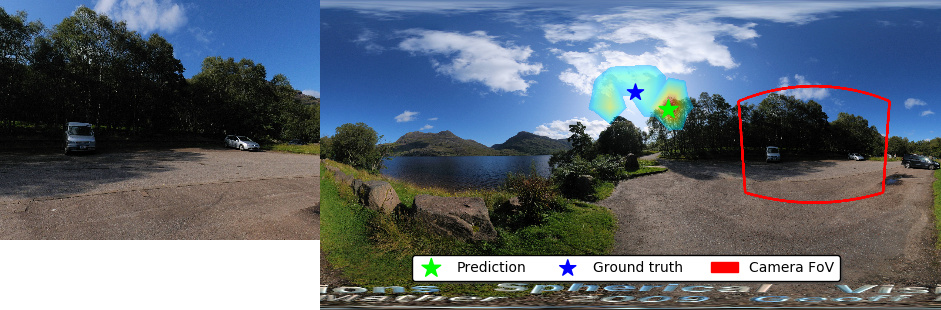

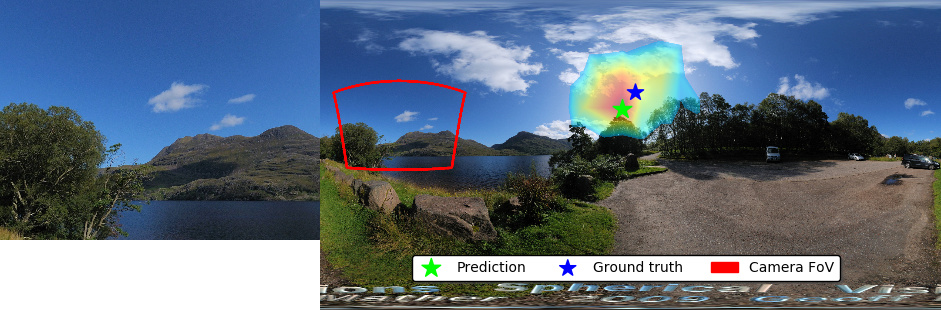

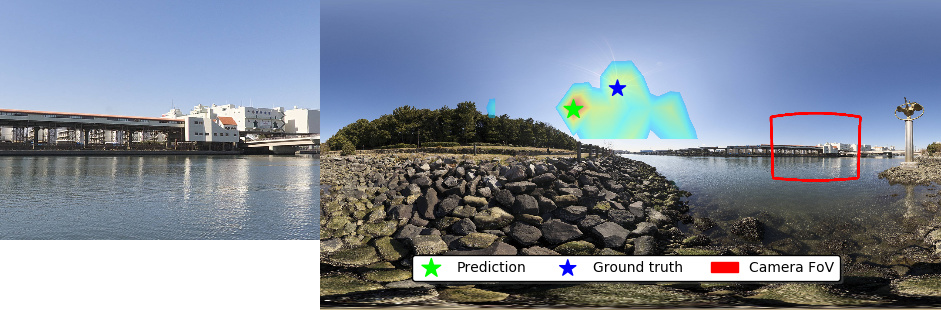

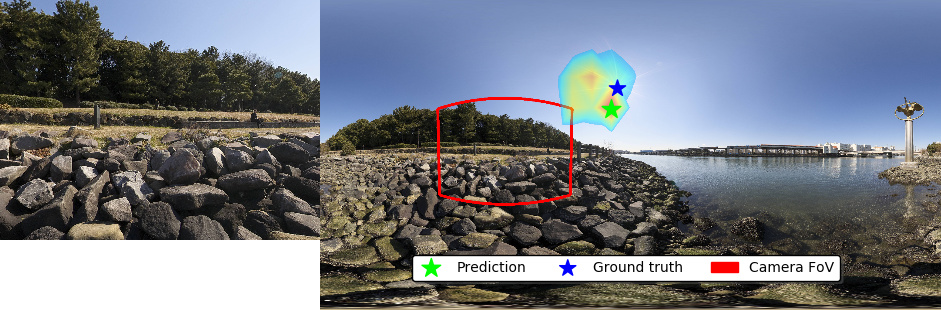

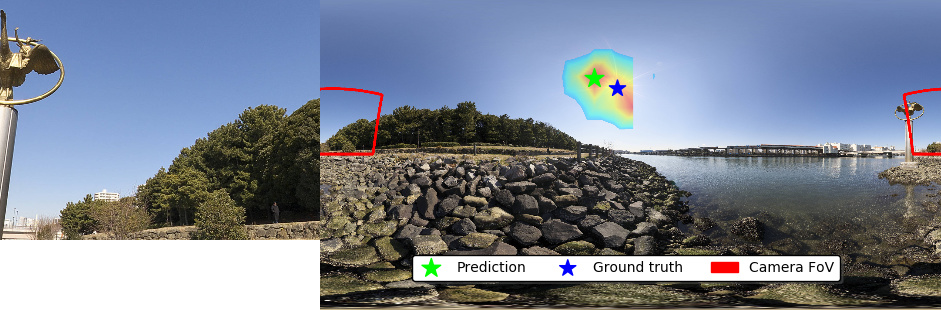

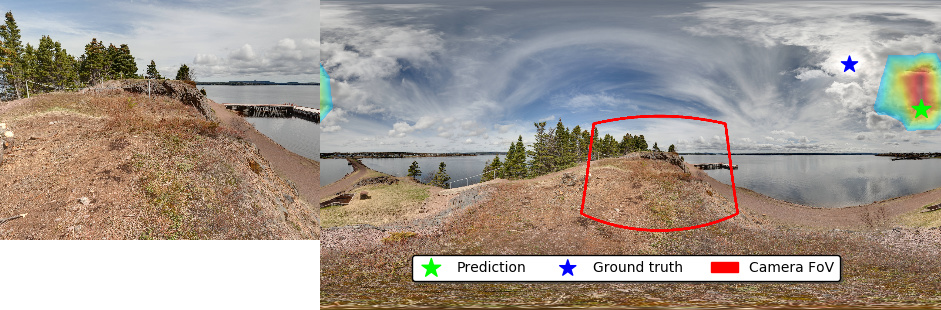

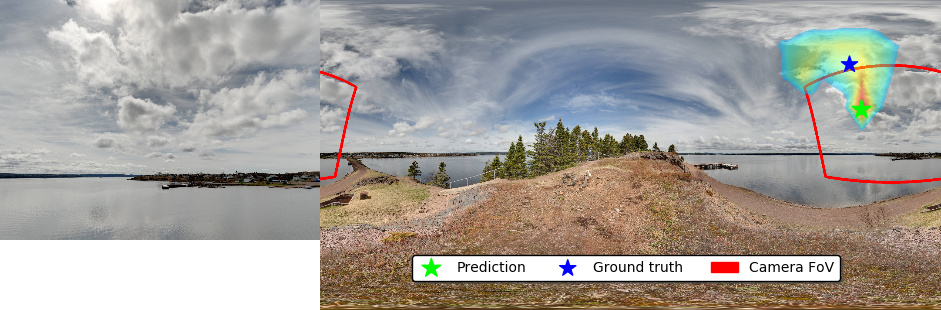

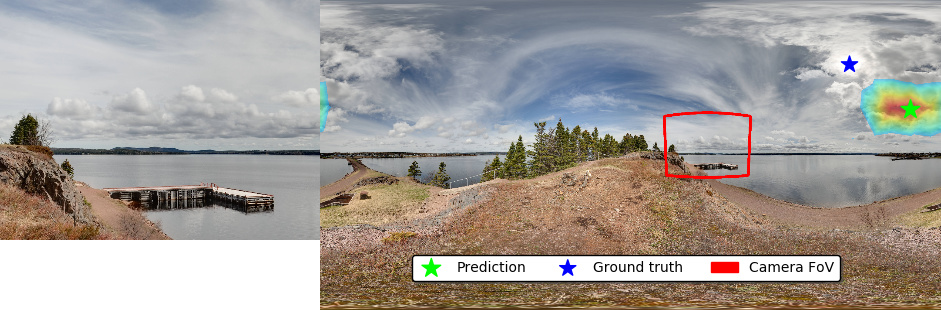

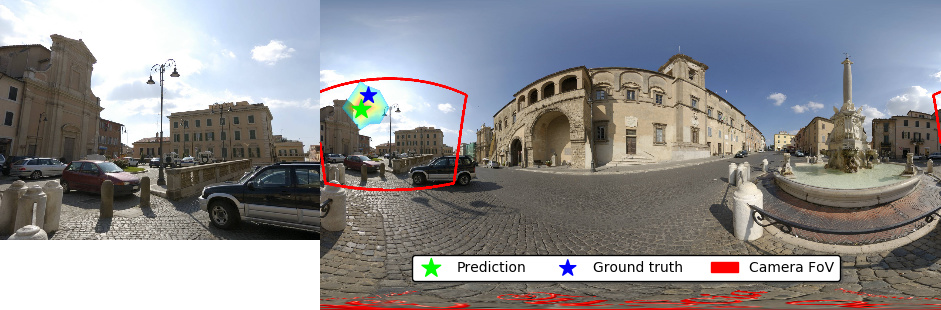

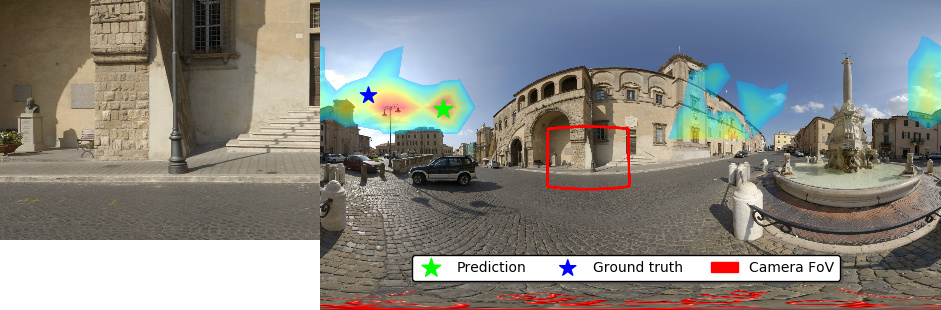

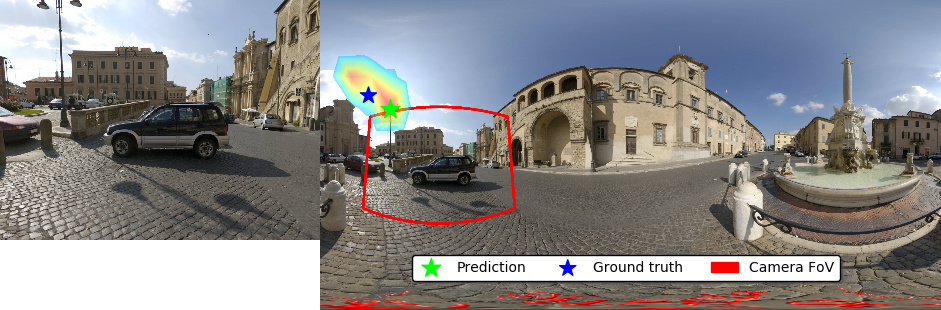

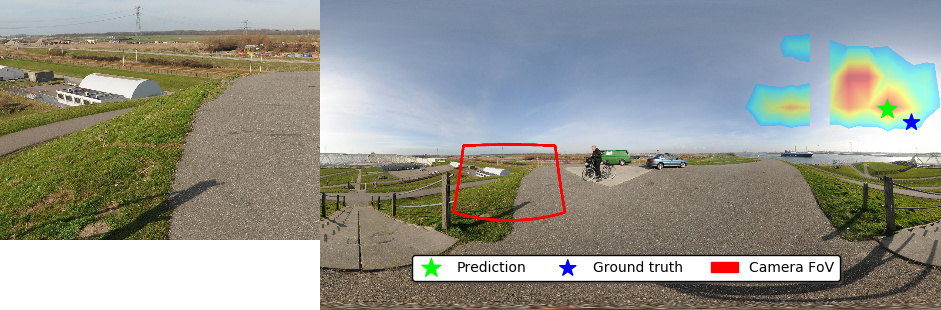

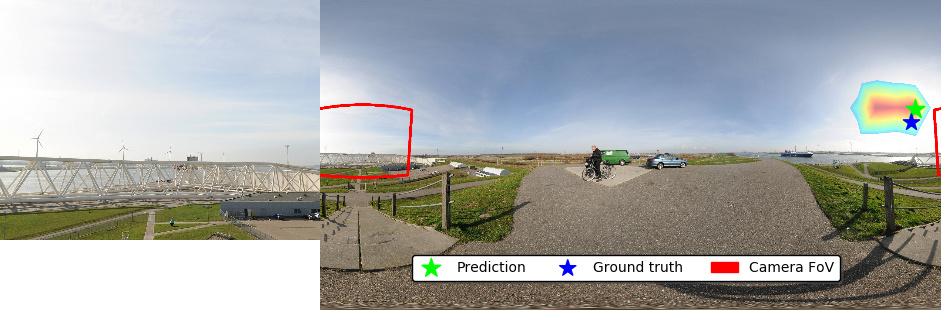

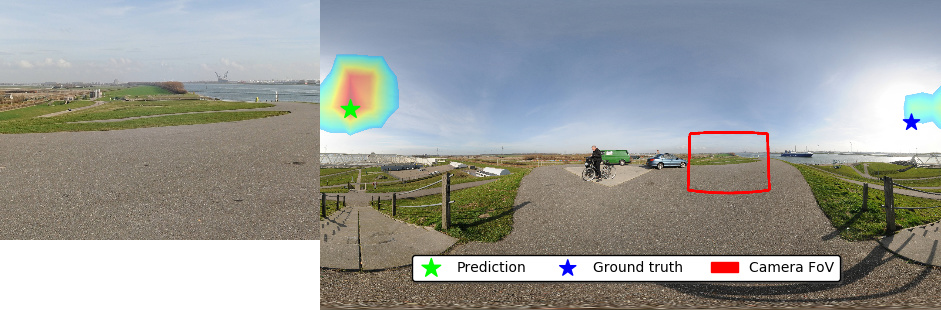

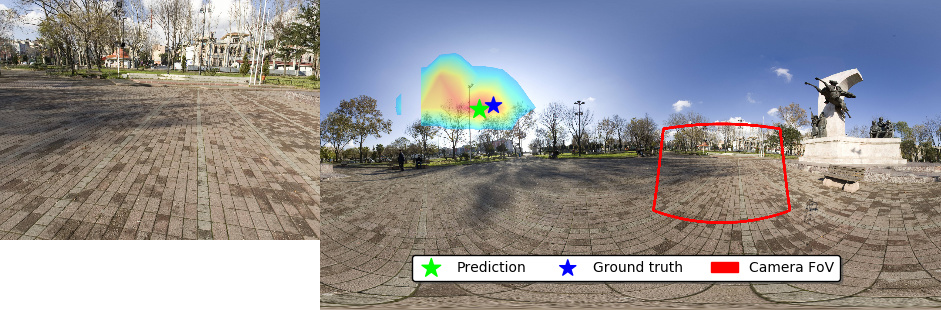

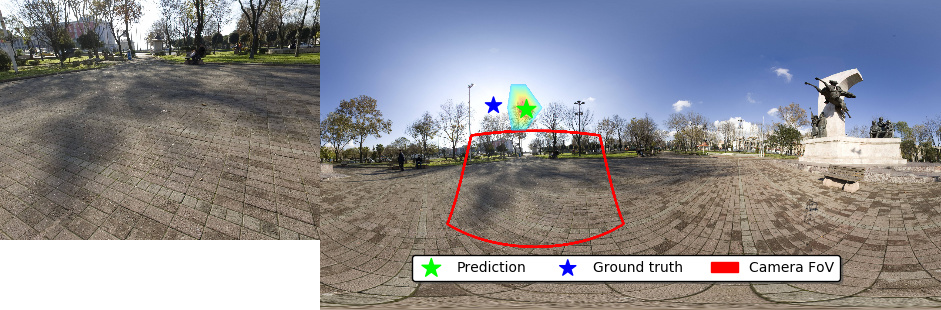

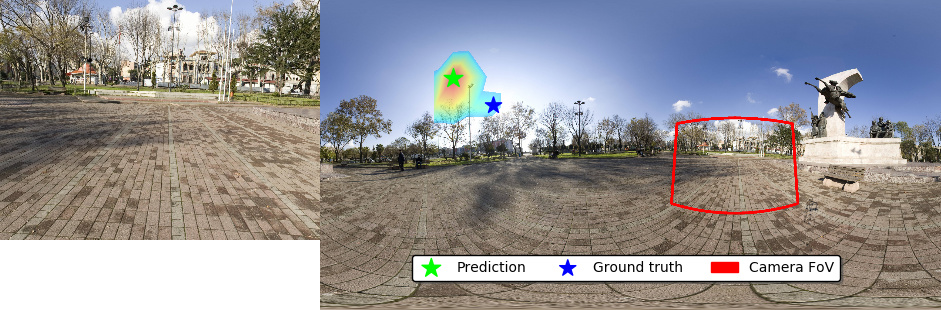

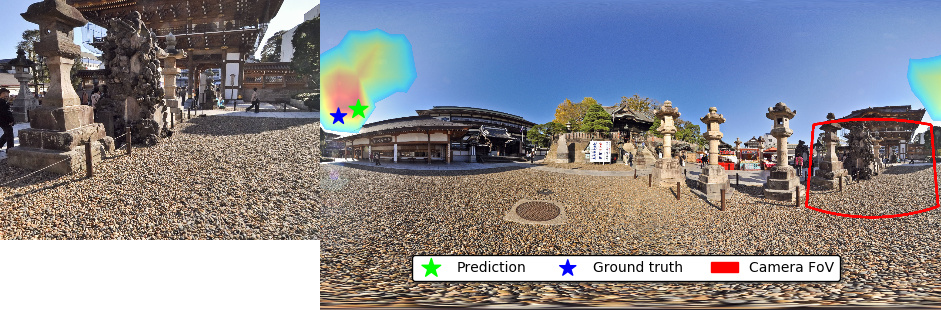

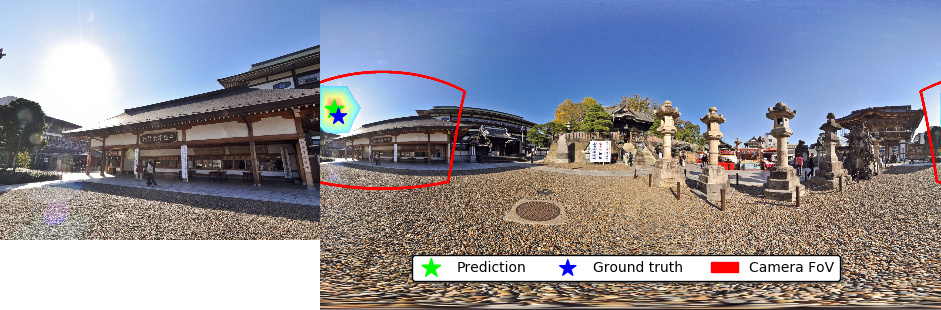

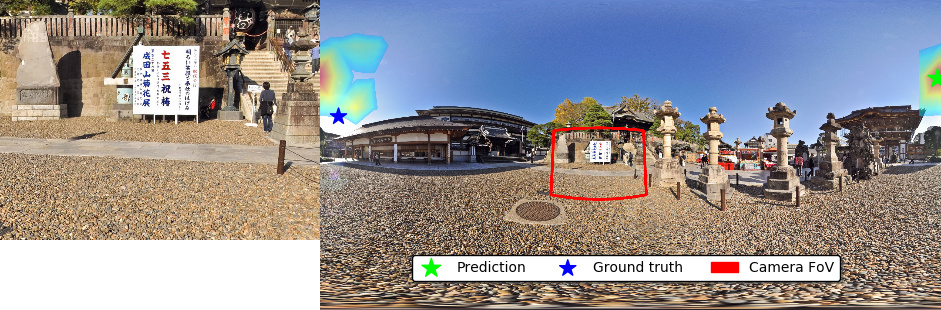

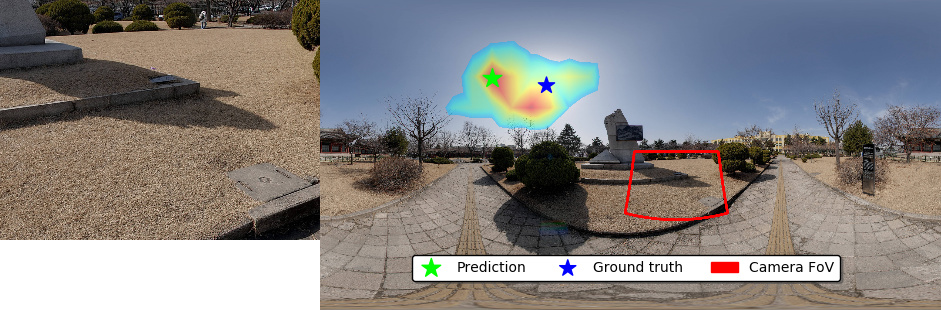

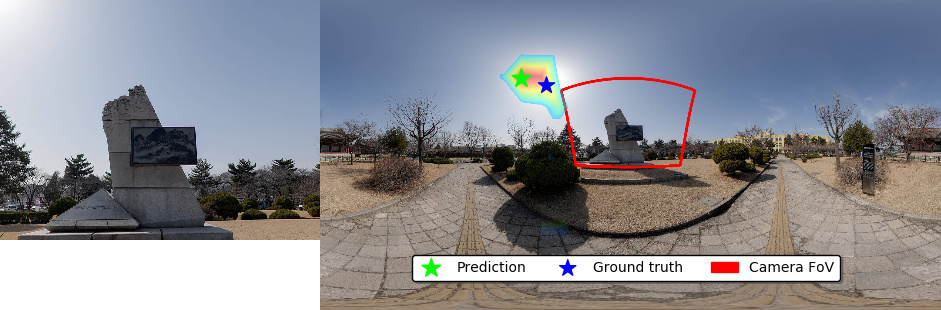

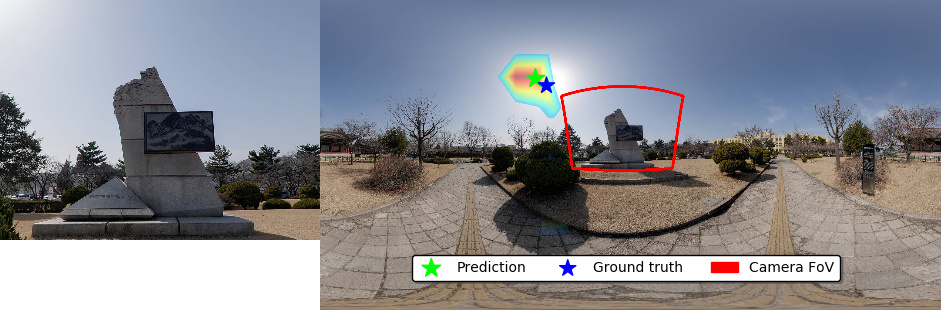

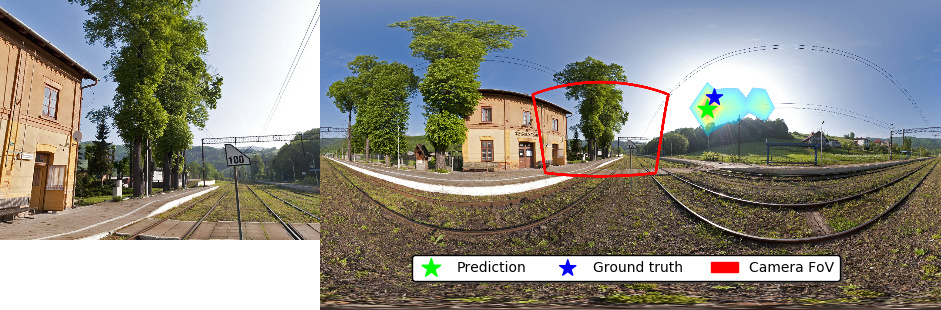

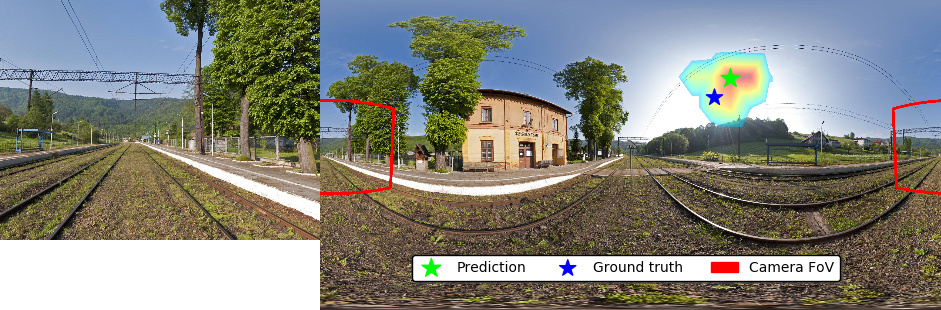

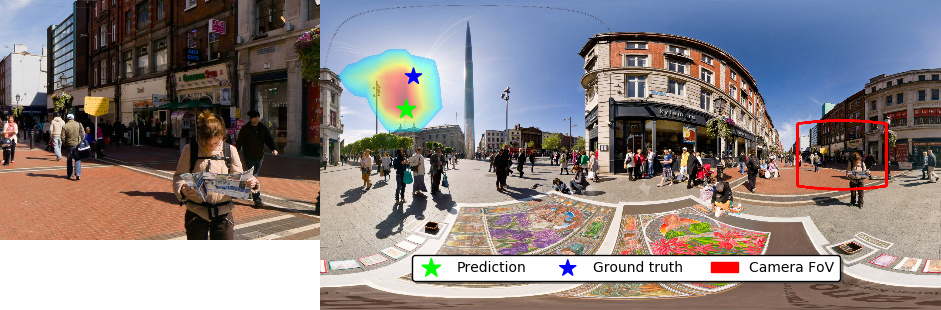

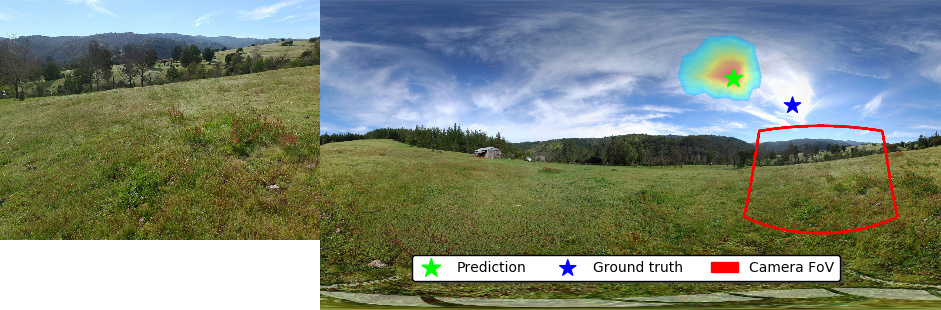

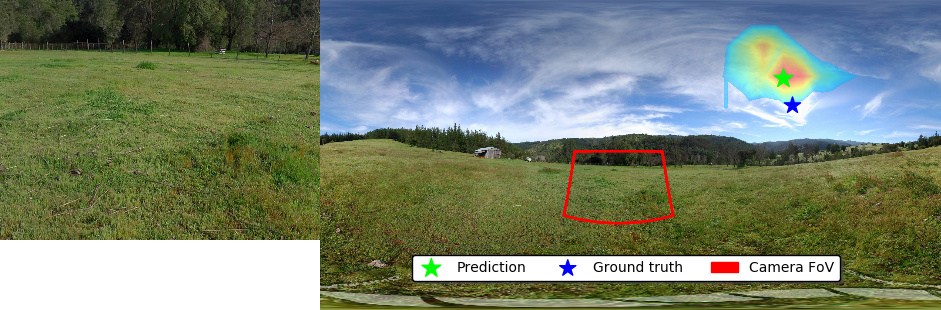

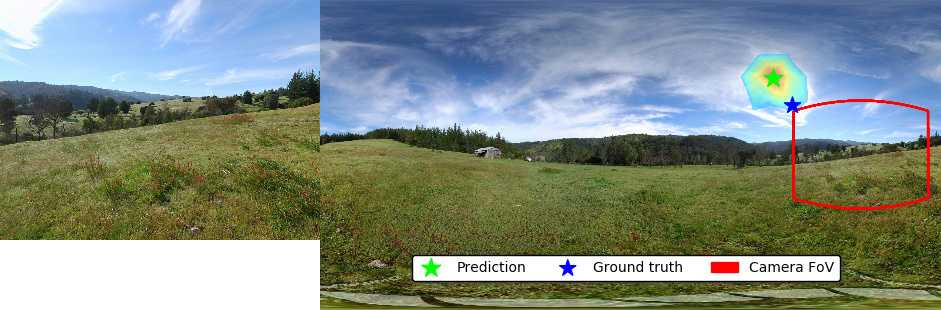

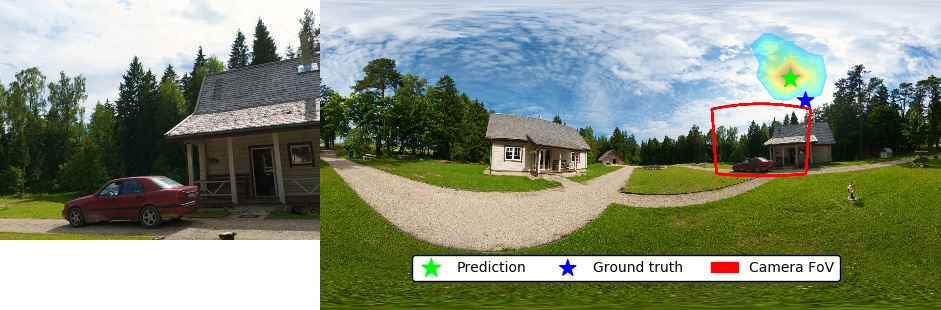

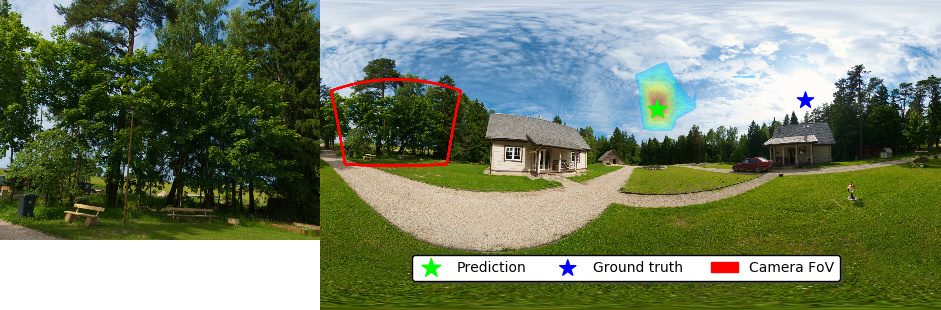

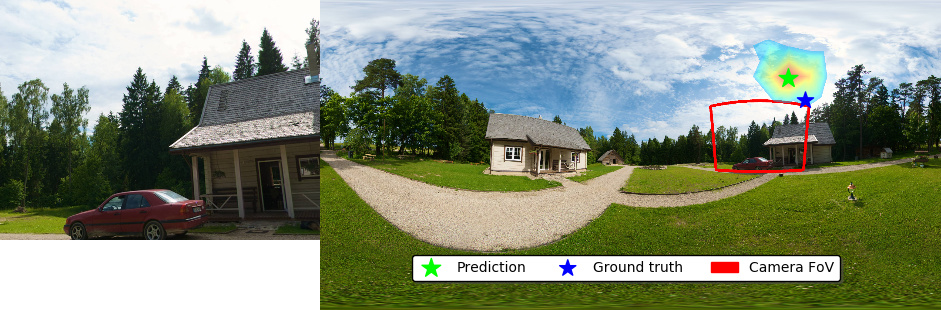

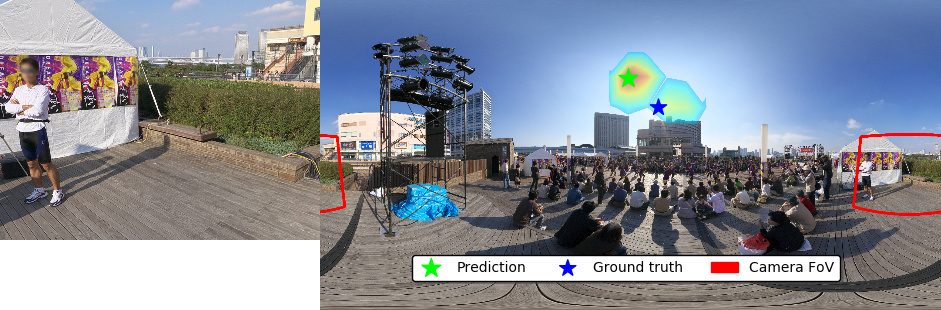

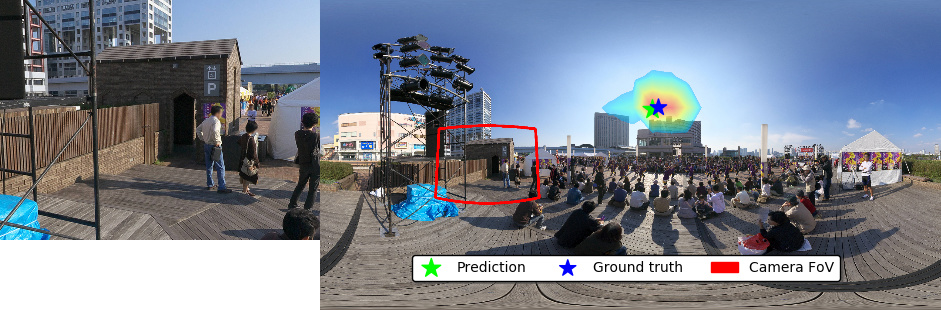

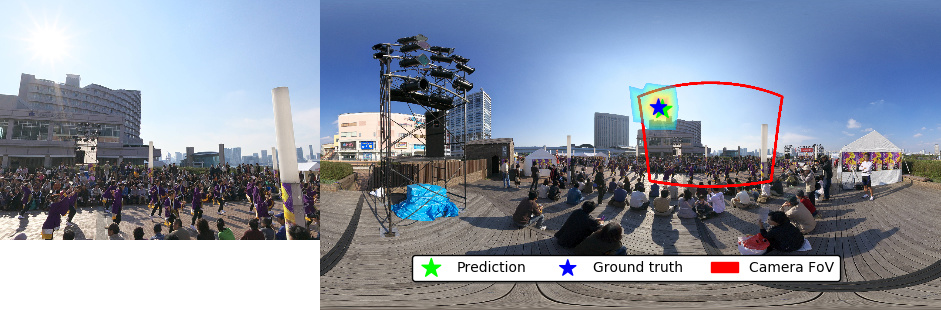

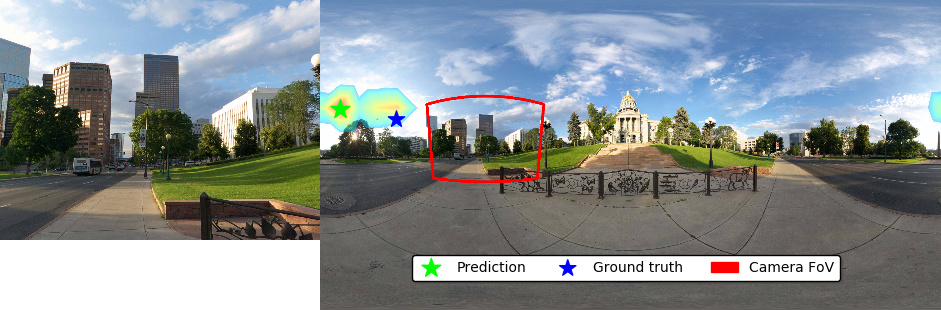

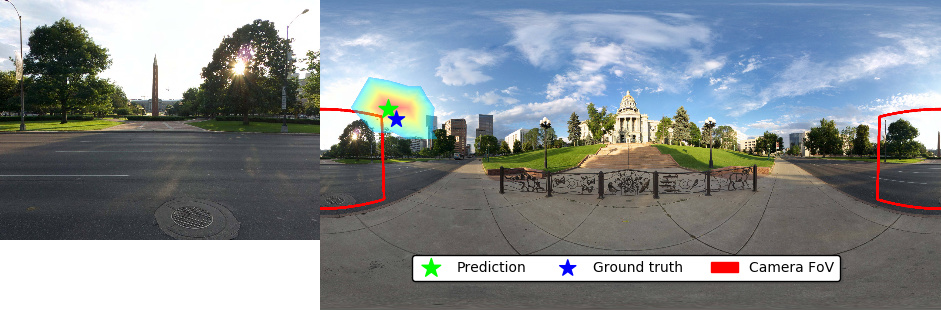

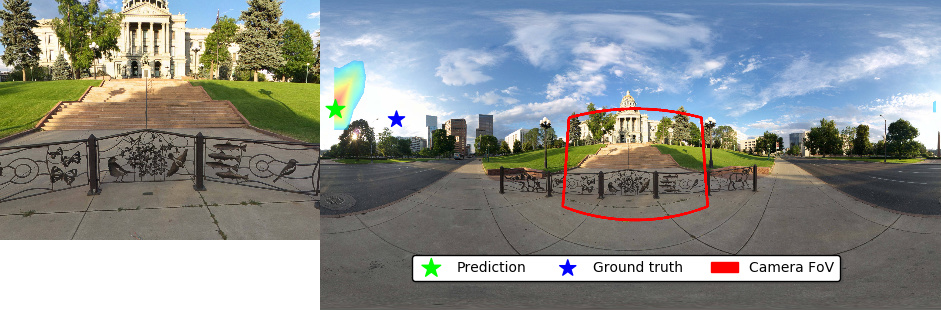

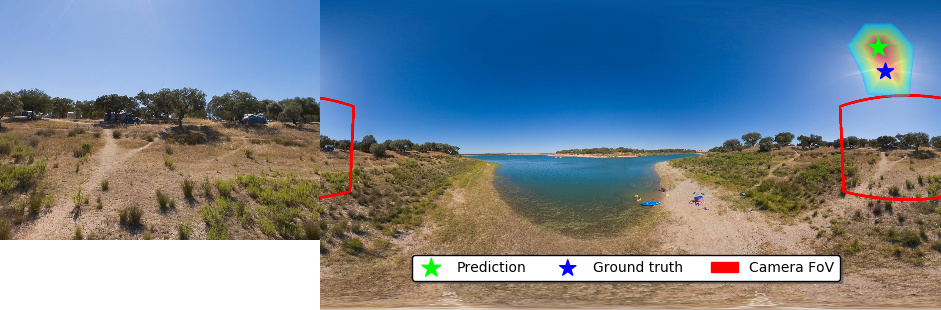

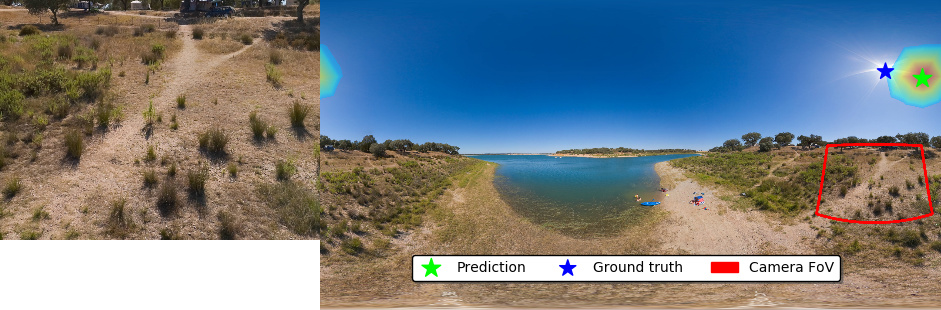

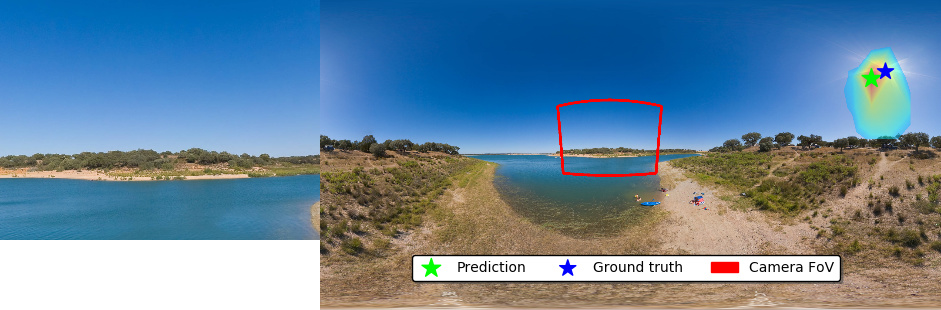

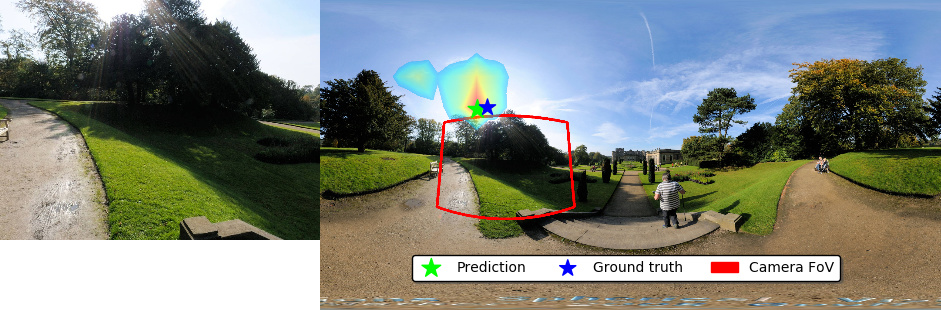

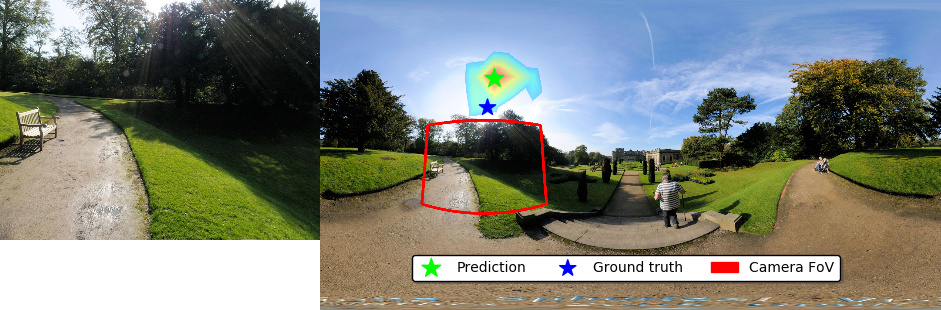

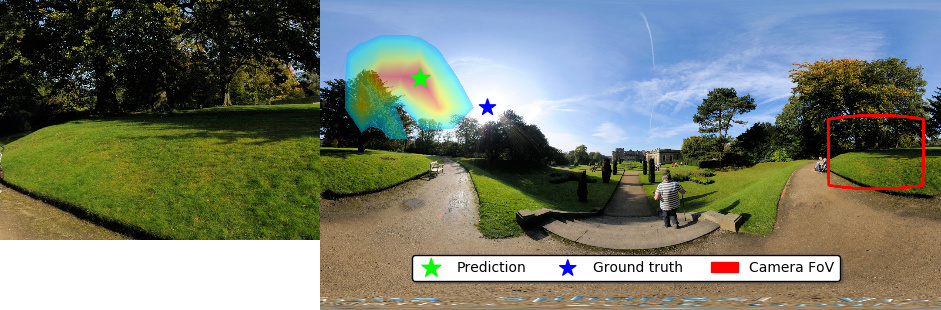

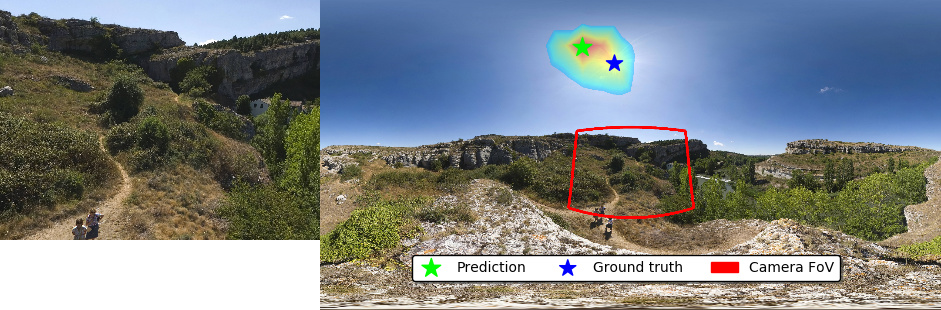

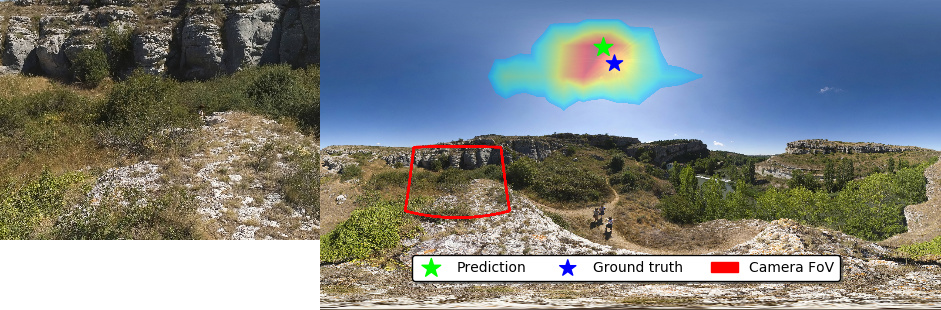

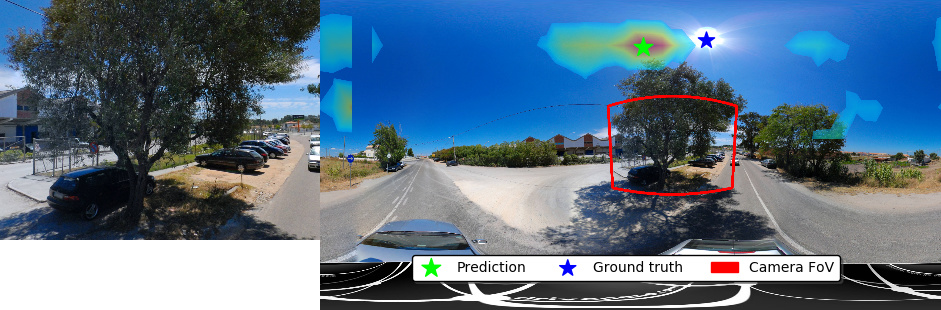

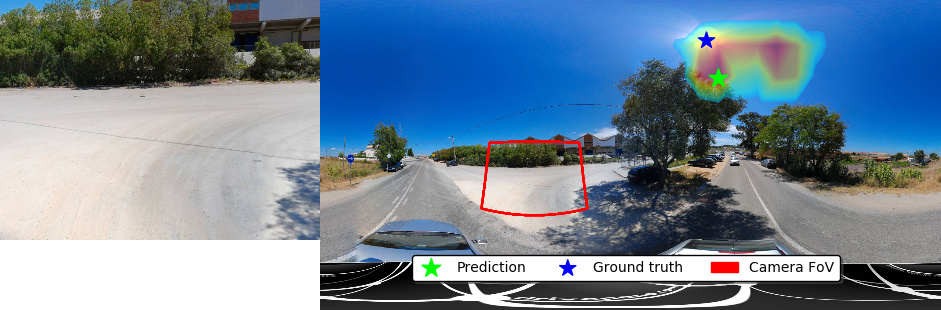

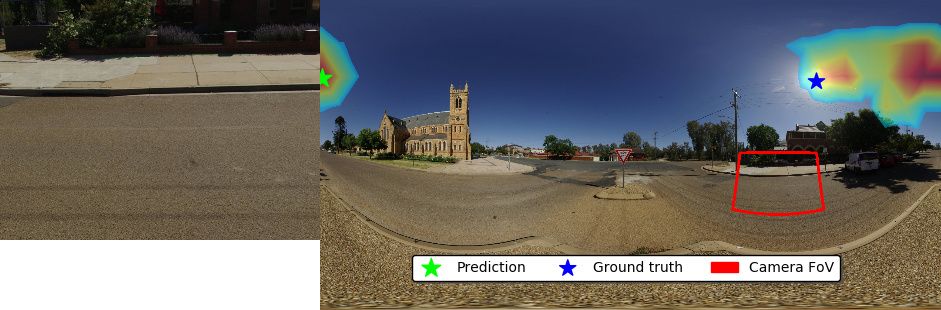

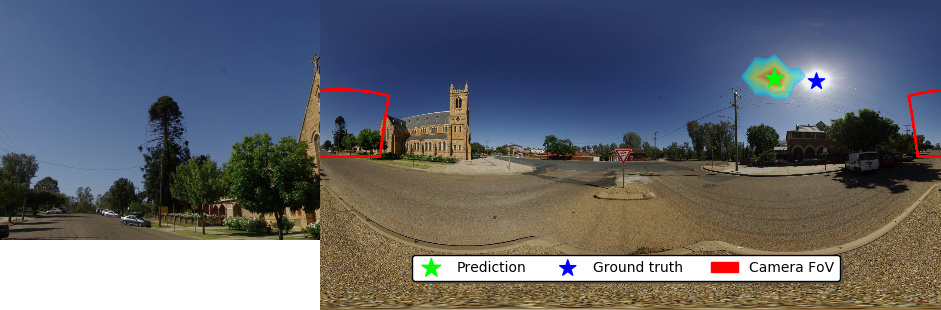

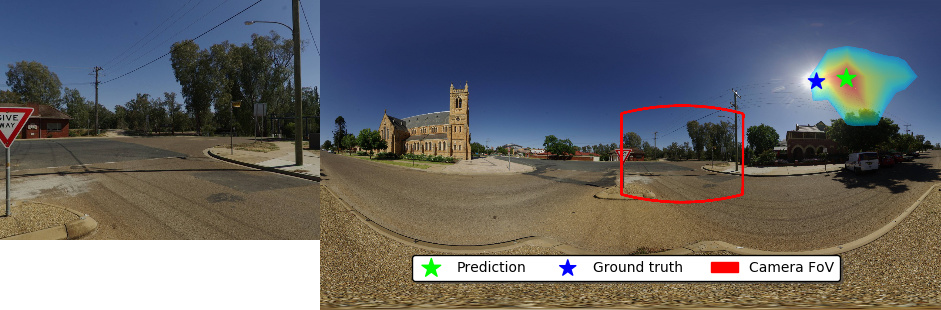

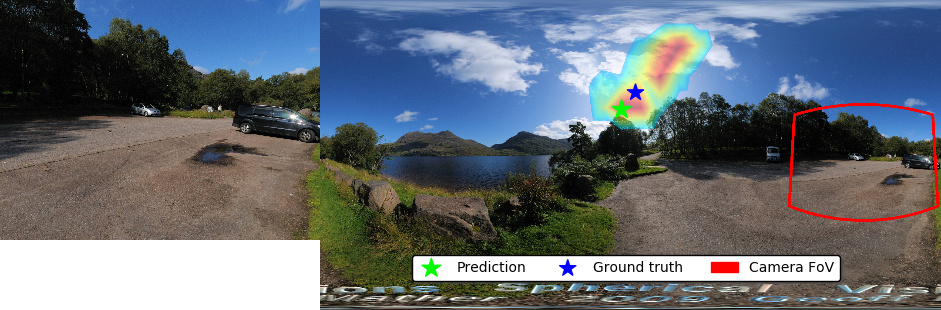

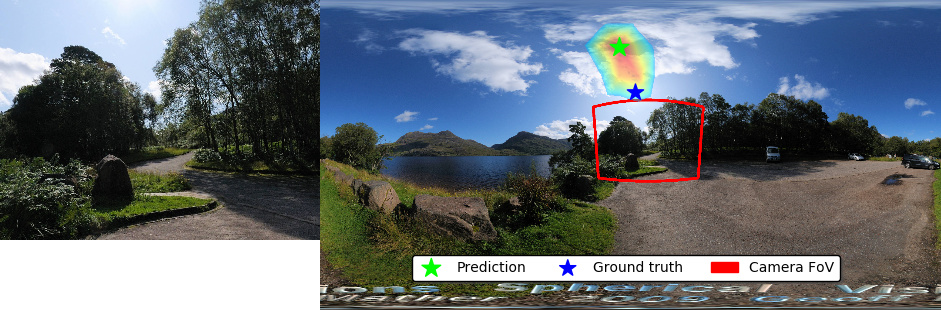

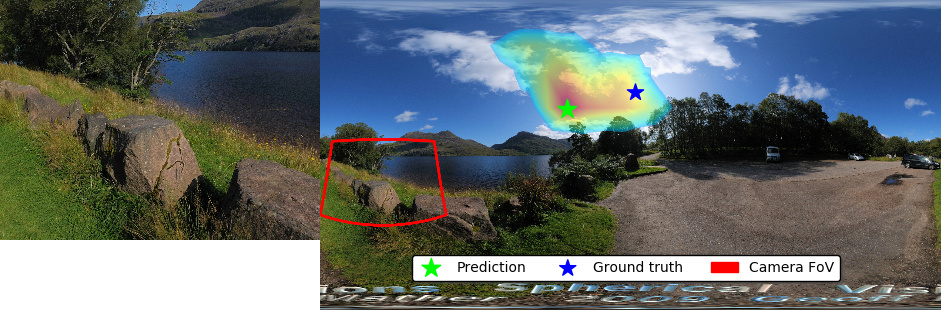

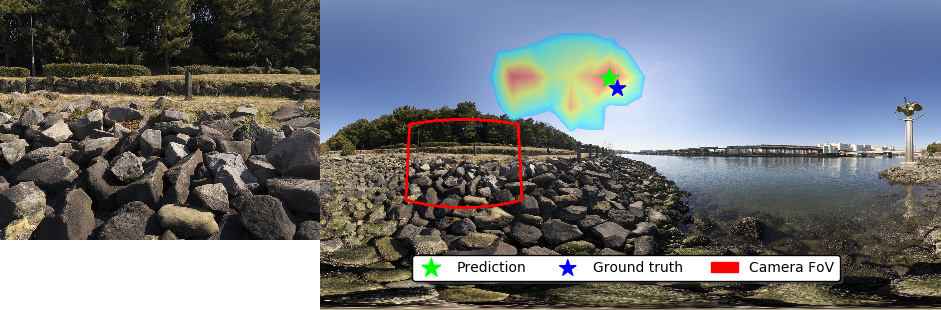

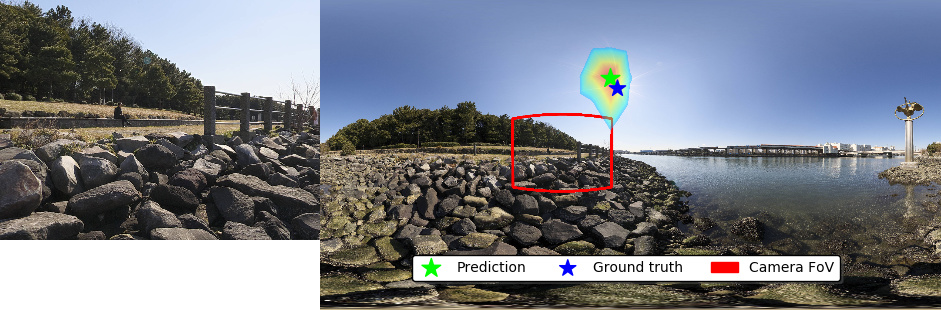

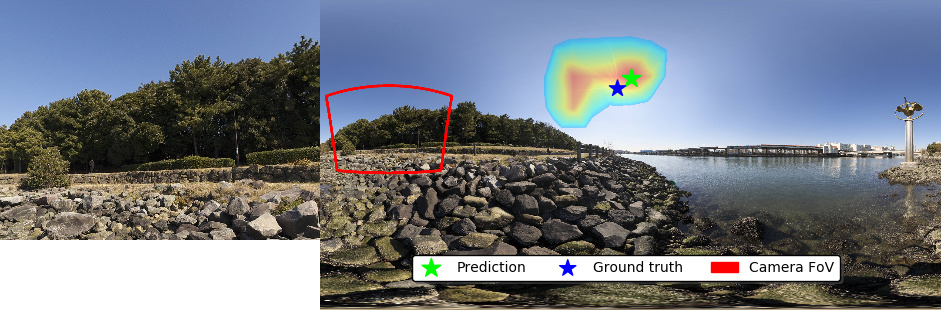

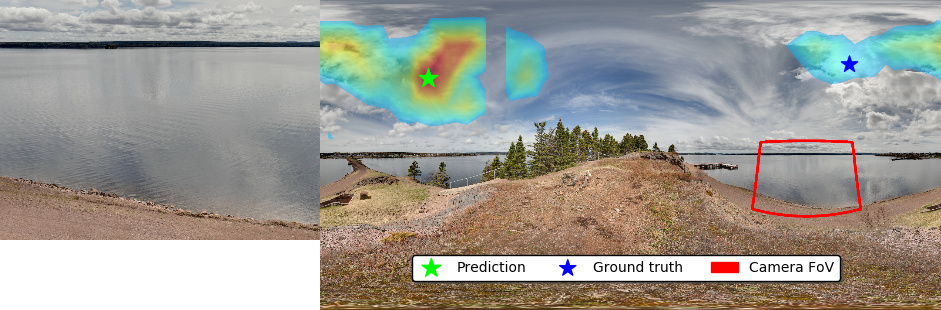

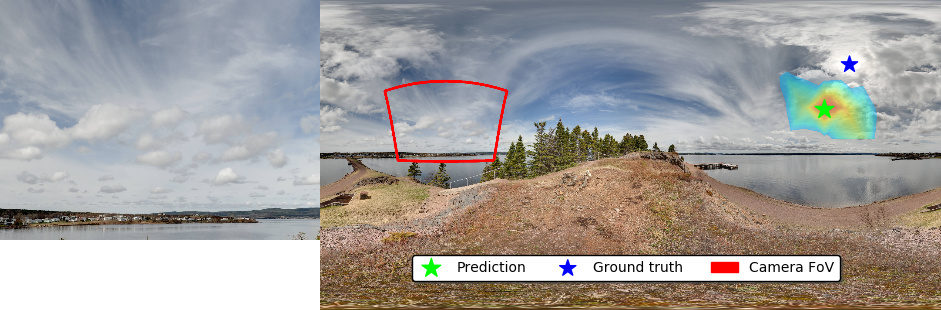

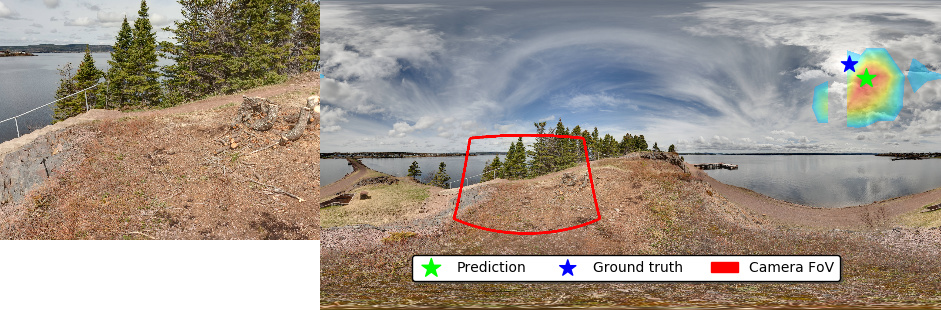

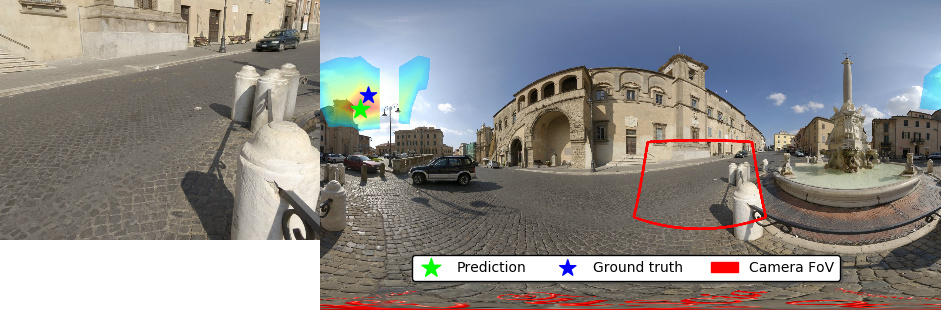

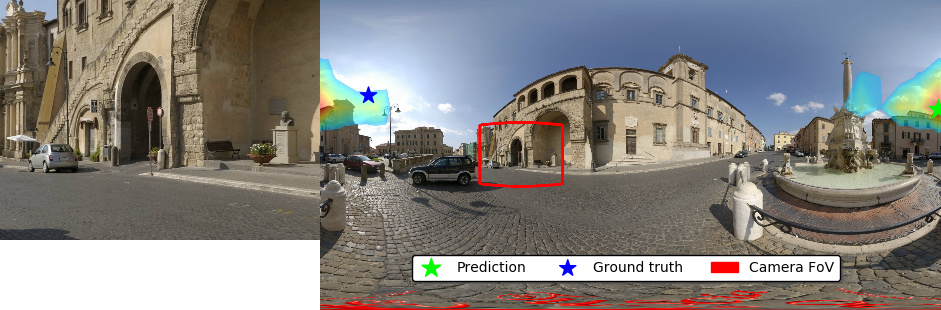

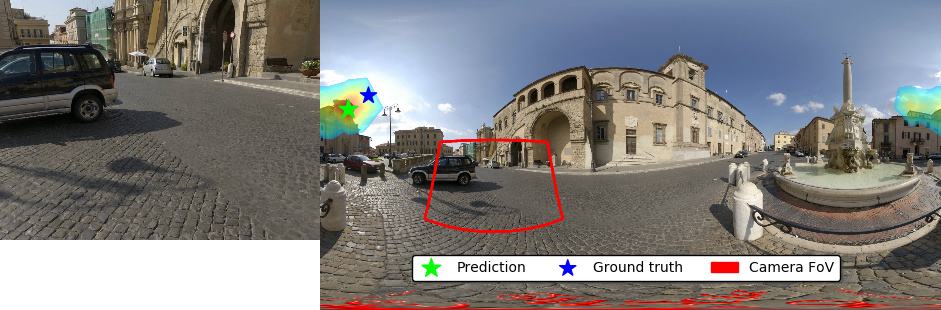

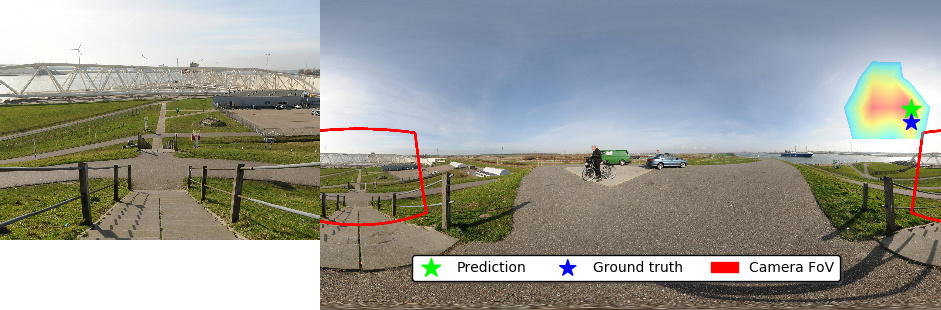

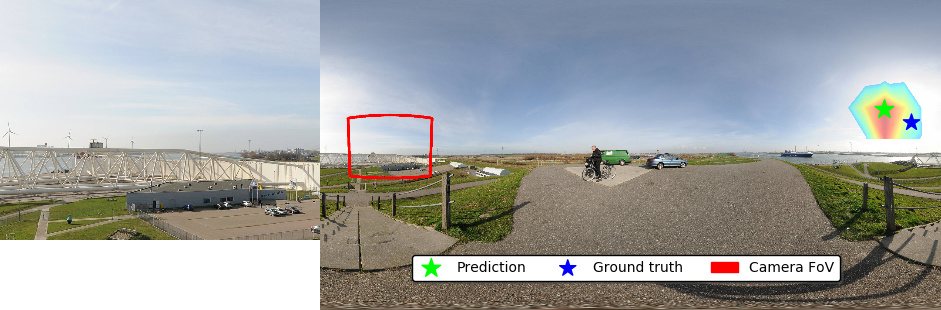

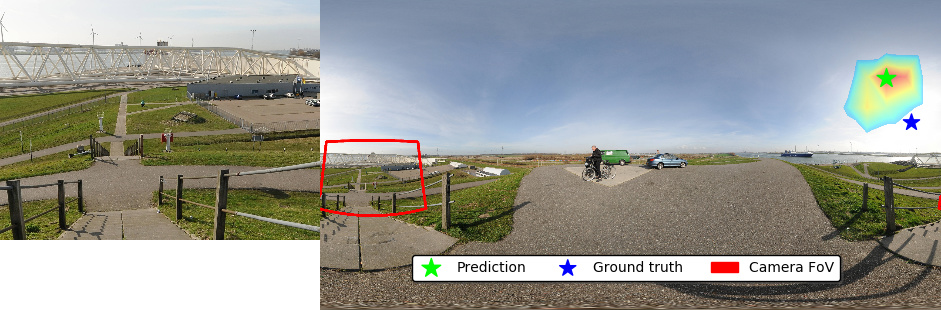

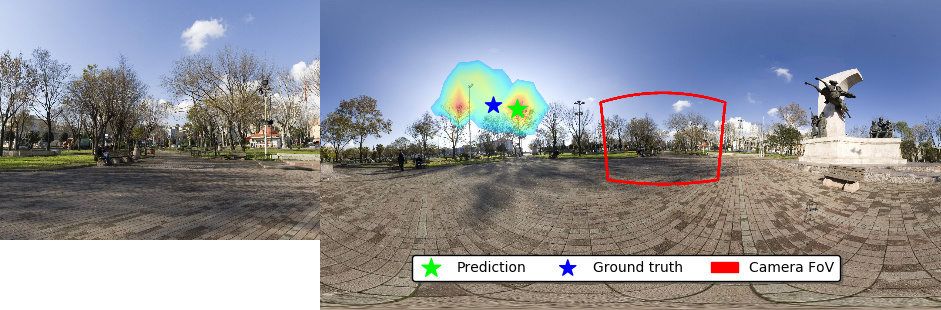

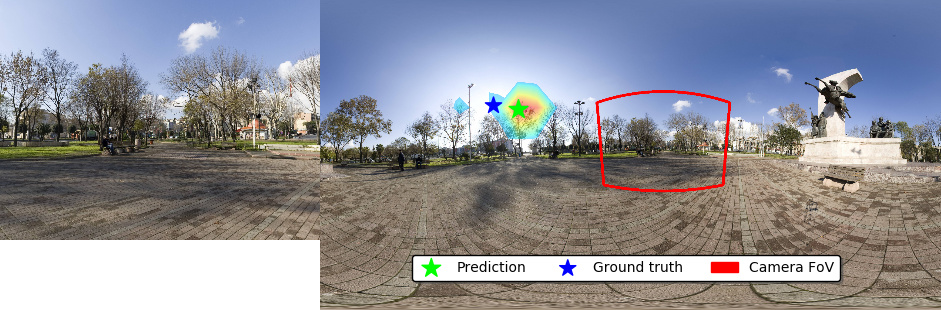

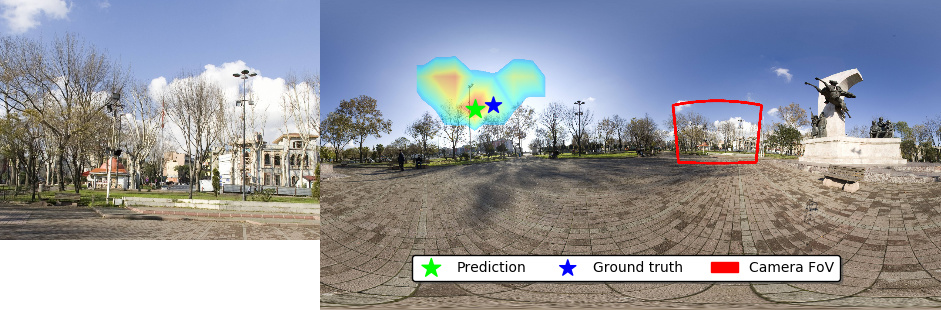

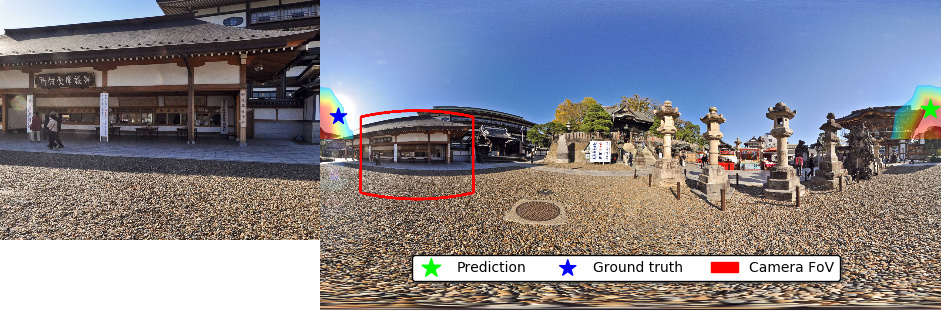

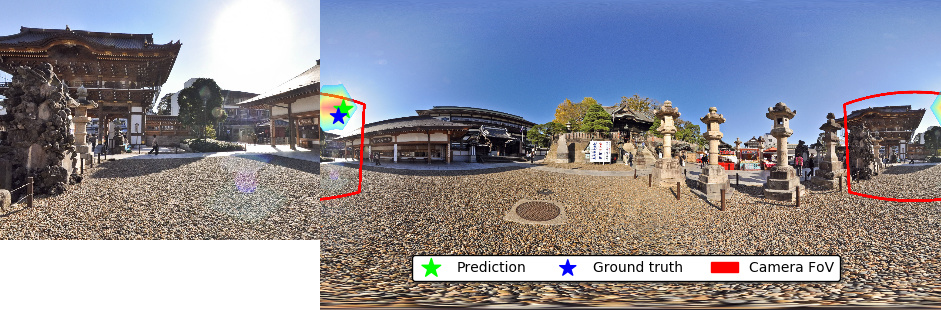

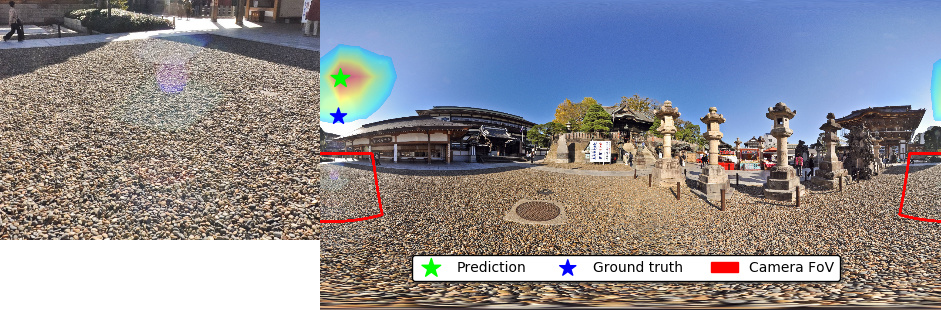

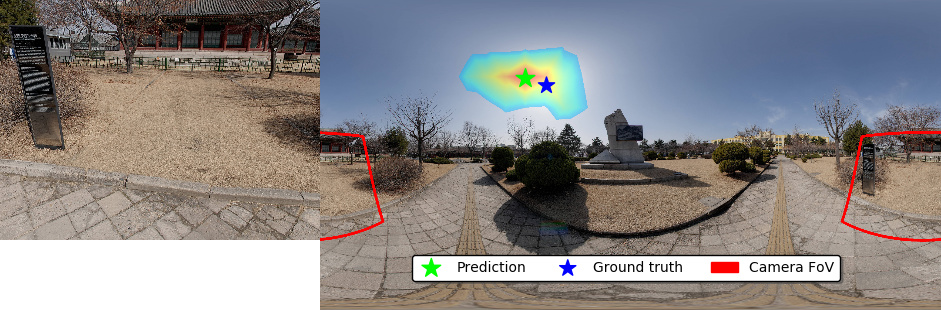

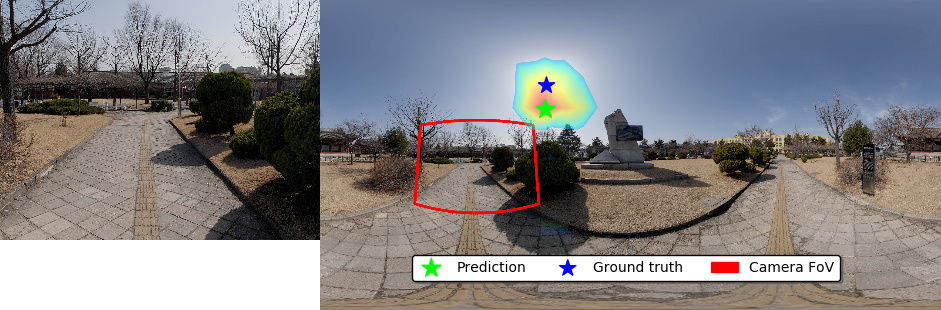

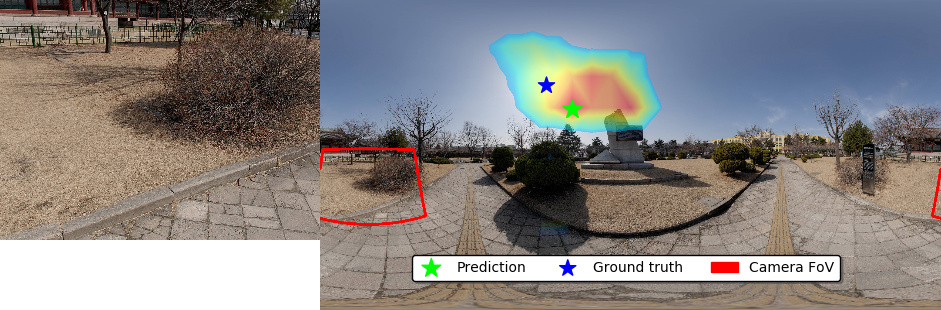

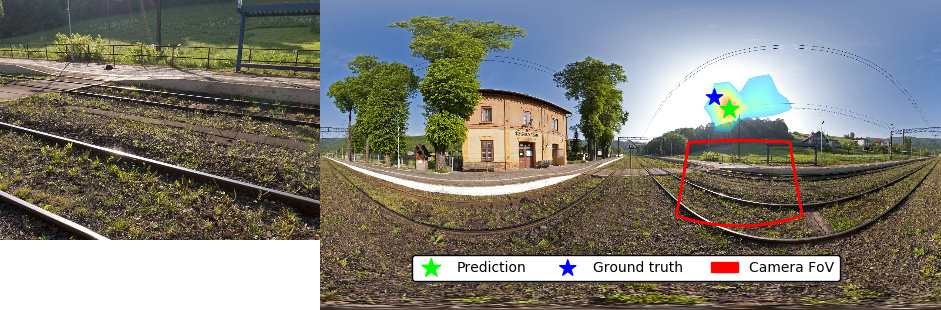

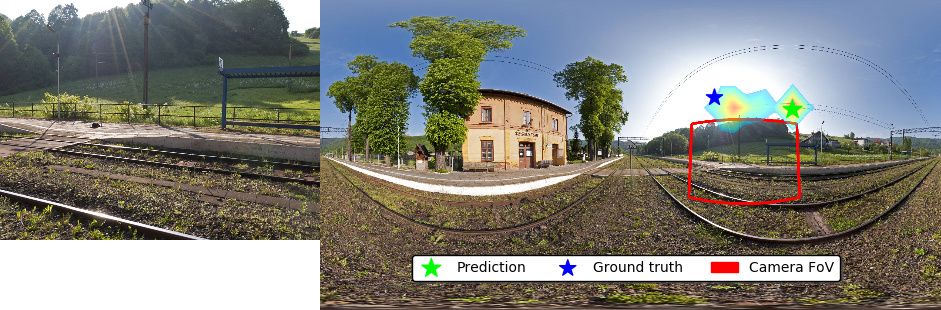

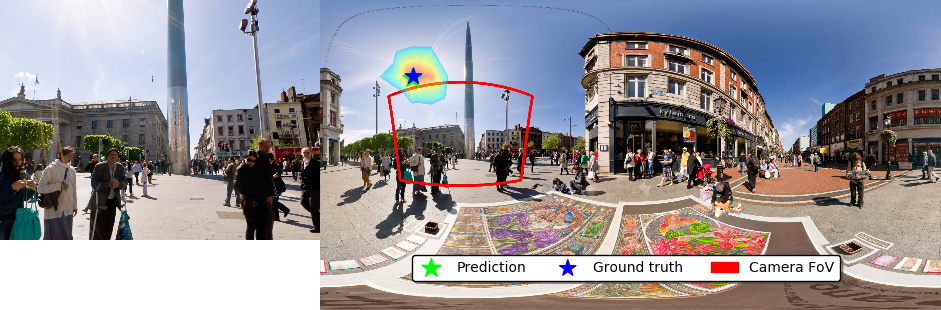

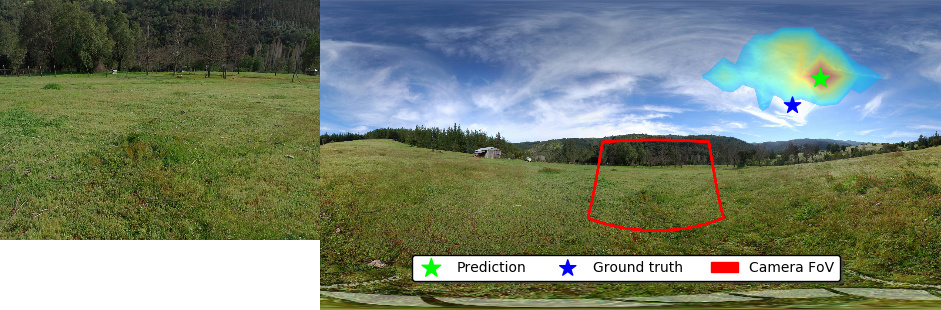

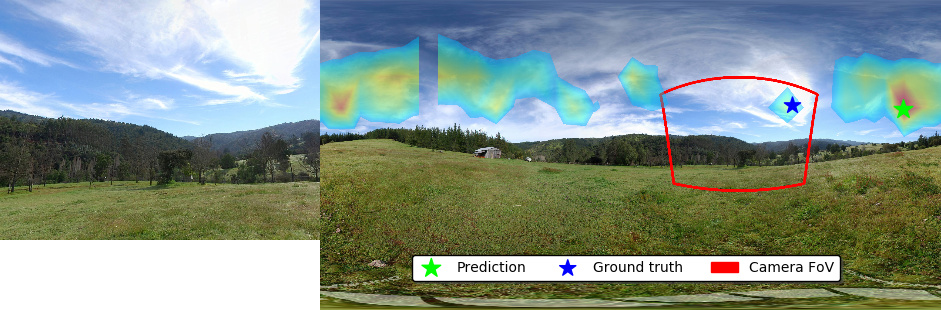

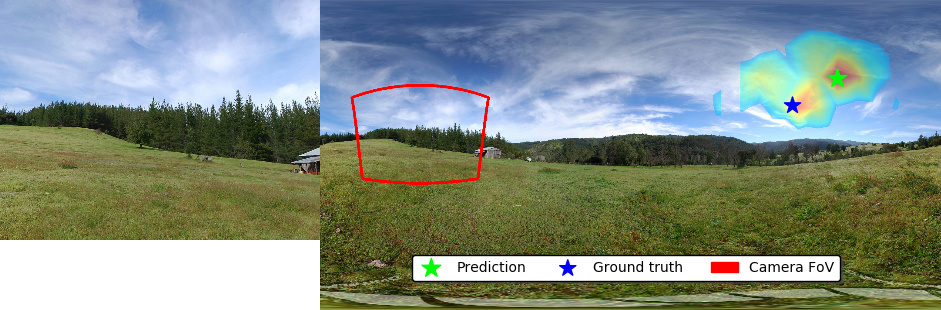

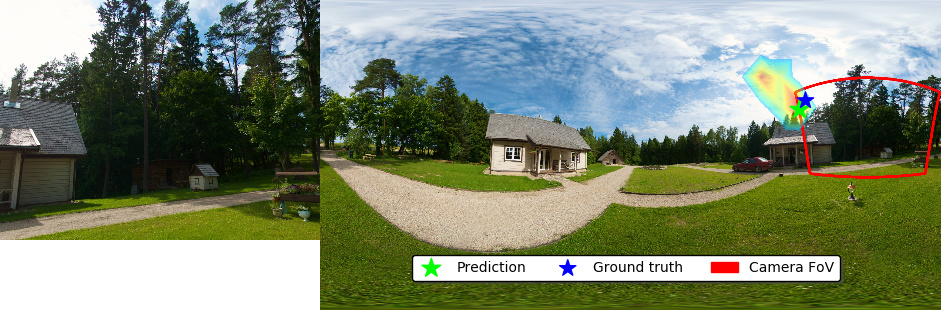

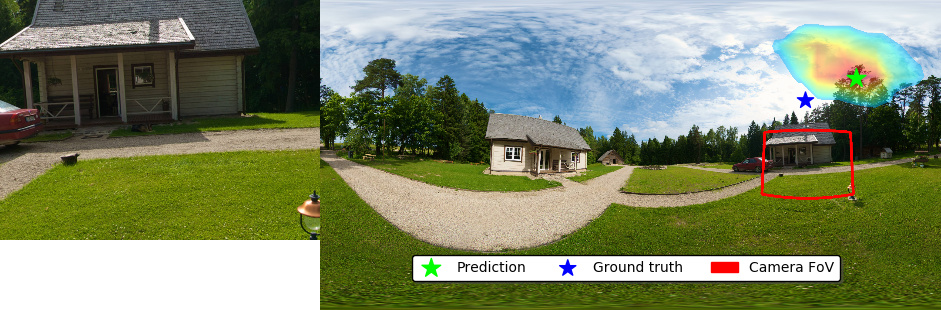

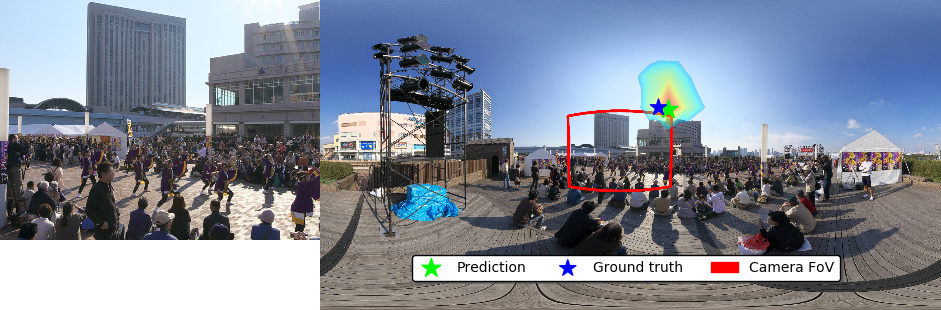

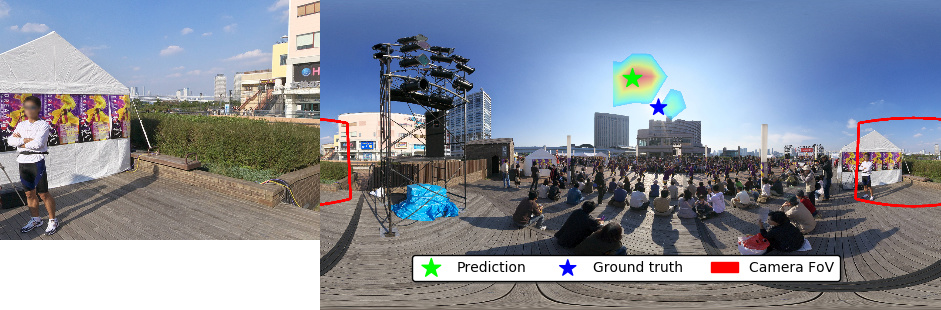

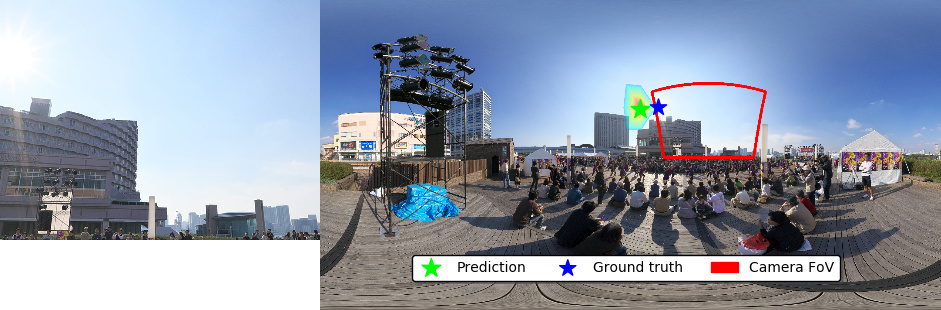

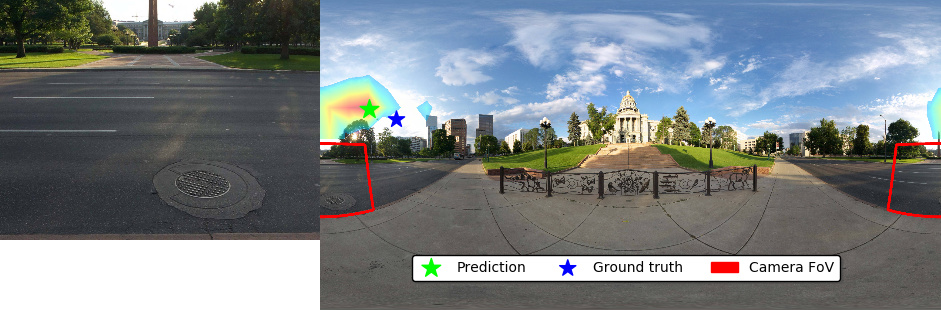

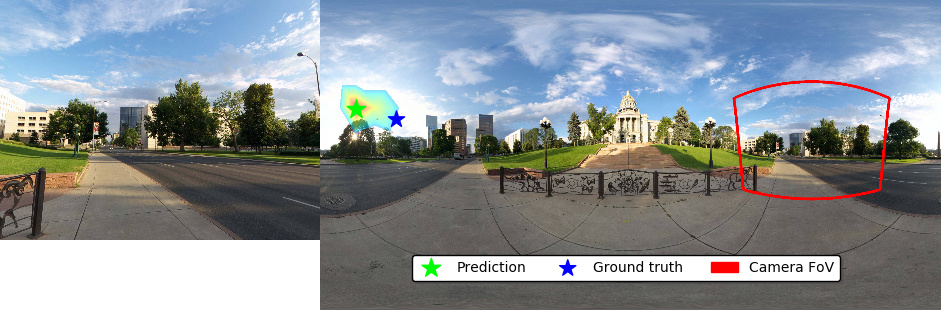

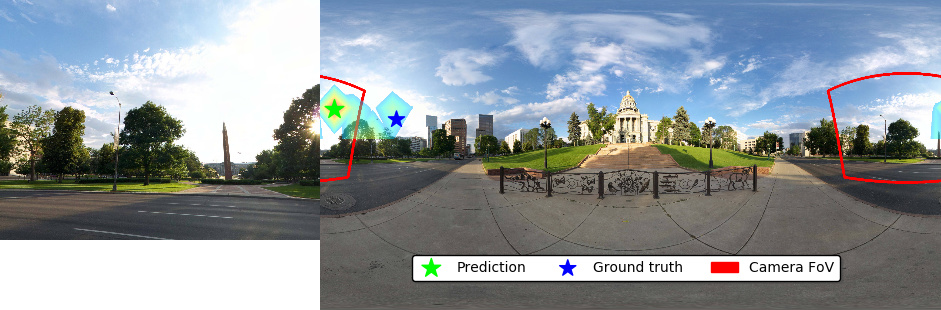

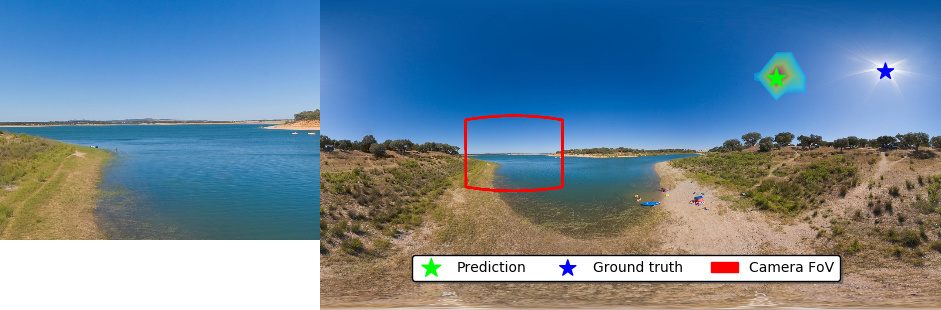

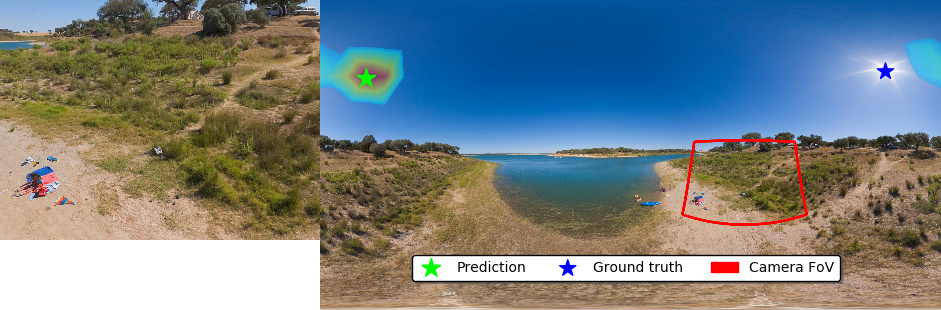

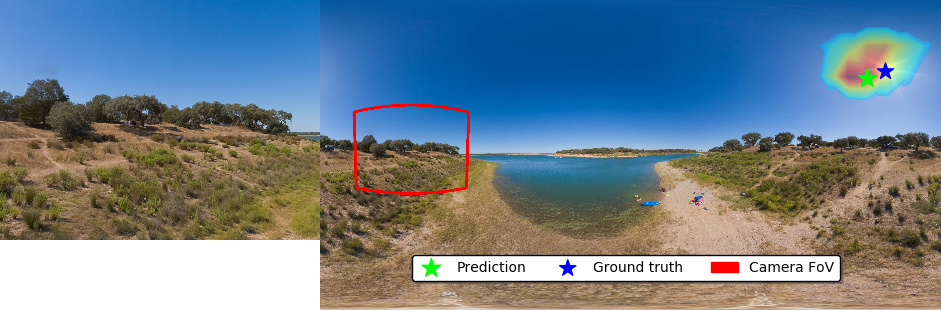

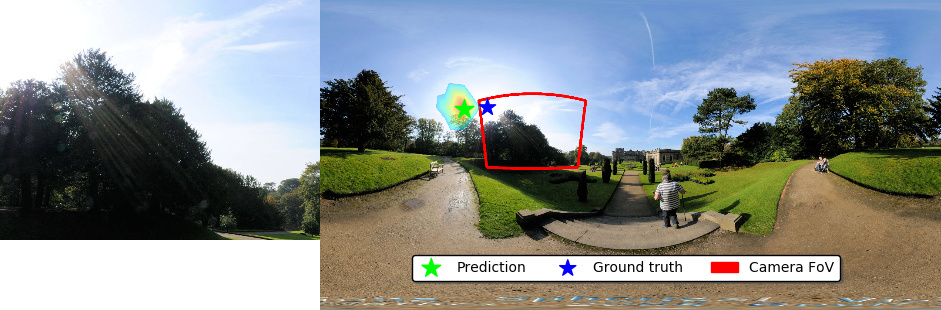

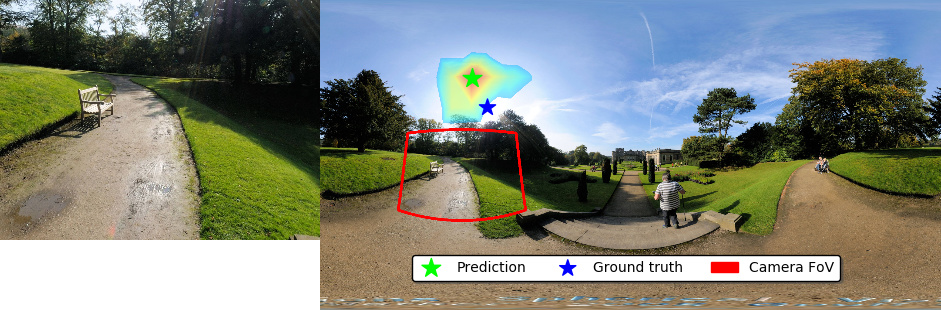

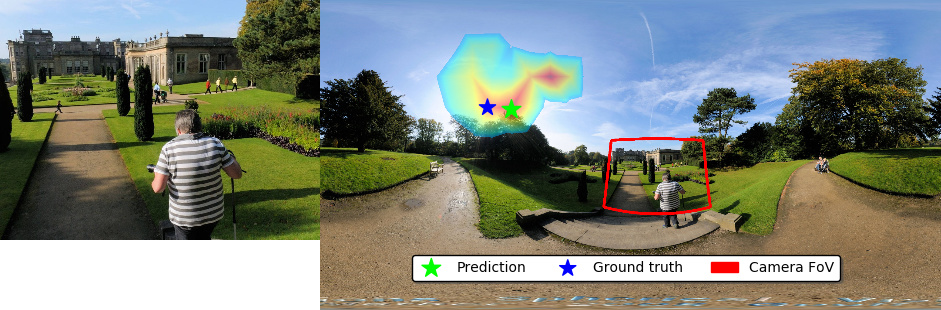

1. Sun position estimation

In this section, we present some additional sun position estimations, extending the results shown in fig. 6.

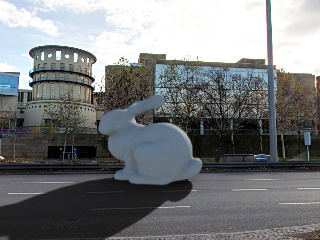

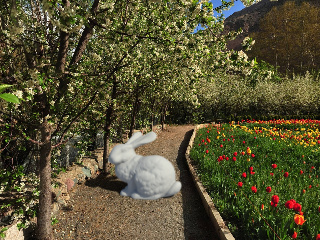

2. Virtual object insertion

More virtual object insertion results on the SUN360 dataset are presented in this section, extending the results of fig. 9. For these examples, the virtual camera parameters are kept at a fixed value (fov=60 degrees, elevation=0 degrees). Please see this section for examples where camera parameters are set by our CNN.

3. Camera parameters evaluation

3.1 Parameter estimation performance

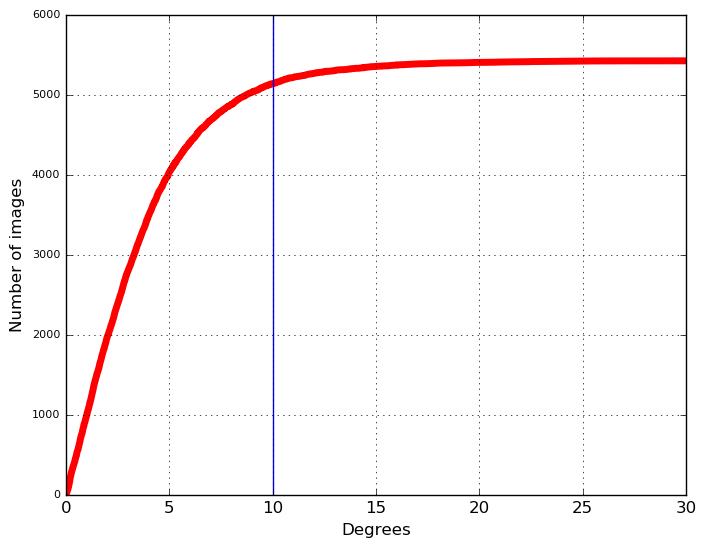

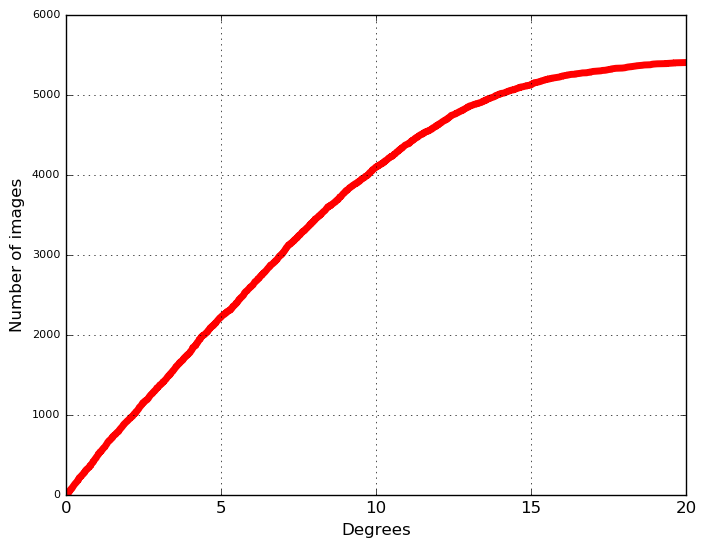

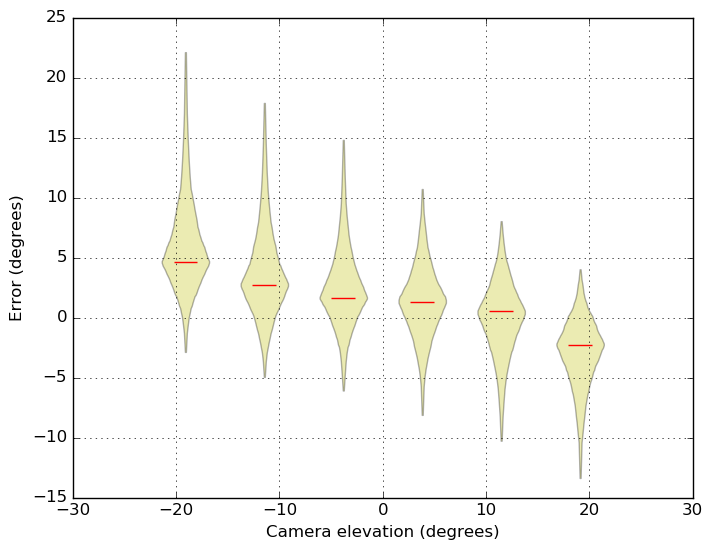

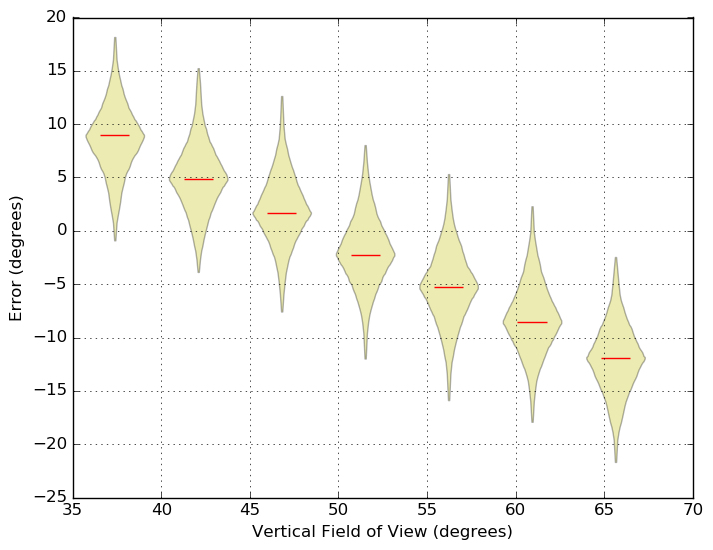

In this section, we show a quantitative evaluation of the neural network estimation performance on the camera elevation (a)-(b) and the camera field of view (c)-(d). The range of camera elevation in our dataset is [-20, 20] degrees, with a absolute median of 10 degrees, as shown in the blue bar of the cumulative distribution function (a). Note that estimating camera elevation is analogous to finding the horizon. Our method recovers a camera elevation within 5 degrees of error roughly 75% of the time. The camera field of view is measured vertically and has a range of [35, 68] degrees in our dataset, with a median of 52 degrees. The proposed method recovers the vertical field of view within 10 degrees roughly 75% of the time. The distributions of errors on (b)-(d) are displayed as box-percentile plots, where the envelope width represents the percentile. The width is maximal at the median (also shown using a red bar), half the size at the 25th and 75th percentile, etc.

|

|

|

|

3.2 Object insertion examples

3.2.1 Camera Elevation

For the following results, we allow the neural network to control the elevation angle (up-down axis) of the camera while performing a virtual object insertion. The camera's field of view was fixed to 60 degrees for those experiments.

Real elevation: -19 degrees

Real elevation: -11 degrees

Real elevation: 0 degrees

Real elevation: -17 degrees

3.2.2 Camera Elevation and Field of View

We now present examples of virtual object insertion where the neural network has control over both the camera's elevation angle and field of view.

Real elevation: -16 degrees, FoV: 40 degrees

Real elevation: -16 degrees, FoV: 45 degrees

Real elevation: 0 degrees, FoV: 60 degrees

Real elevation: -1 degrees, FoV: 59 degrees

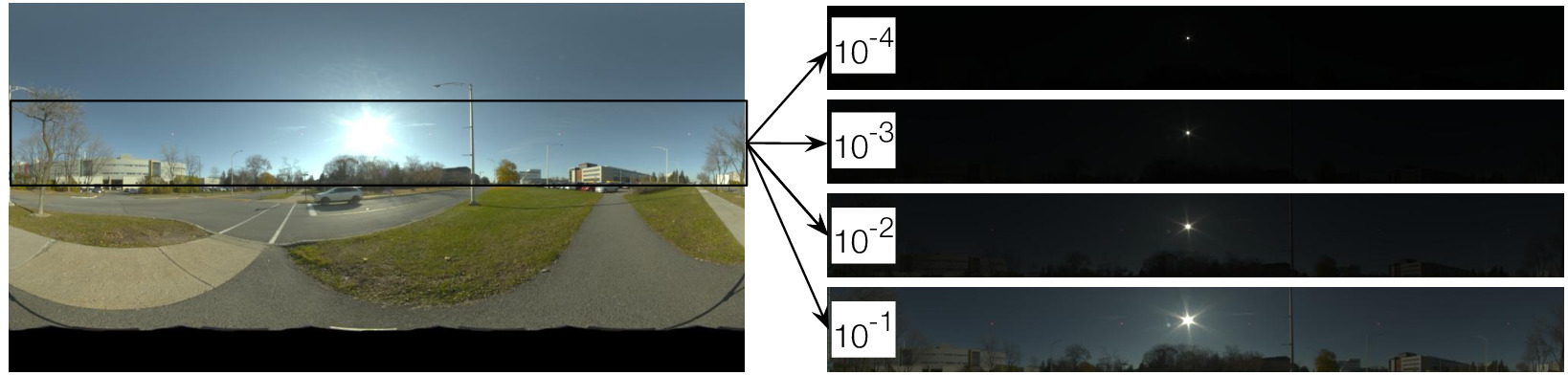

4. Validation High Dynamic Range

In sec. 6.3, we described the process of HDR panorama capture. An excerpt of such a capture is shown in this section, displaying the full dynamic range of a single capture. While most pixels of an outdoor panorama lie in a relatively limited dynamic range, the pixels representing the sun require a scale of roughly 1:10,000 to be unsaturated. Accurately capturing those extremely intense pixels are mandatory for cast shadows and proper shading to appear in renderings.

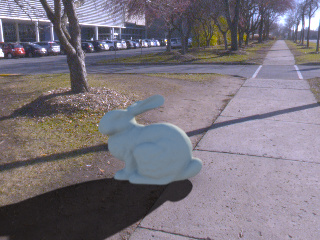

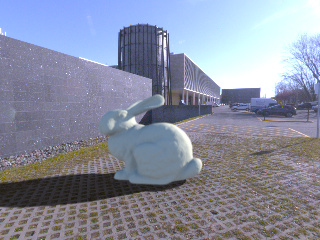

5. Virtual object insertion validation with HDR captures

In this section we evaluate our CNN by comparing objects rendered under our estimated lighting (first column) against those rendered with captured HDR images (left mouse hover and second column), extending the results of fig. 11. To create these results we capture HDR panoramas (last column, see Sec 6.3 for details about our HDR capture methodology), converted them to 8-bit jpegs, sampled limited field-of-view images from them, and used these images as inputs to our CNN. As can be seen from these results, our CNN reproduces the ground lighting conditions faithfully up to a scale factor, and in particular recovers the direction of the sun accurately (estimated sun direction also shown on the panoramas). Since our CNN is trained not on HDR data but on Hosek-Wilkie parameters fit to LDR data, we also evaluate the error introduced by this step. We do this by fitting the parameters of the Hošek-Wilkie model to the 8-bit jpeg panoramas and render objects under this illumination (third column). These results show that part of the error in lighting estimation (especially in terms of camera exposure) is caused by the LDR nature of the data.

Our method

(mouse hover for captured lighting)

Captured lighting

Hošek-Wilkie fit

Panorama