Photographic Algorithm, Winter 2015

Mahdieh Abbasi

Email: mahdieh.abbasi.1@ulaval.ca

Abstract

Image morphing is a process of continuously and smoothly changing a picture (face) into other picture (face). In this assignment, we implemented a triangular based algorithm for image morphing, and wrap two images into an intermediate image, then dissolve the resultant image to produce a morphe image. We apply this algorithm on different images, like human faces, animals, and objects.

Image Morphing

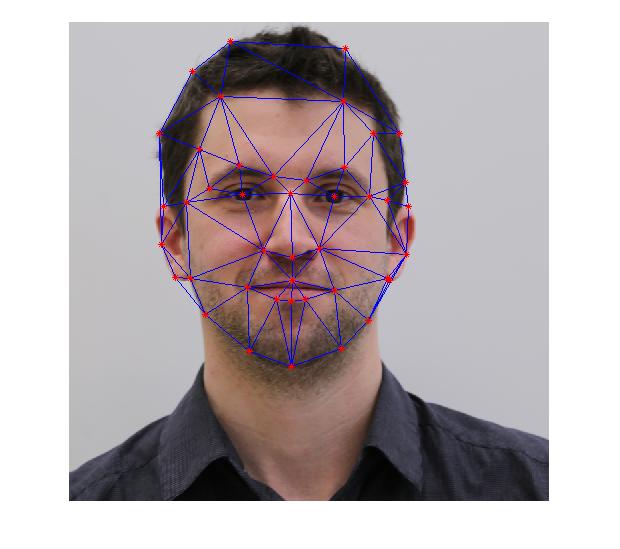

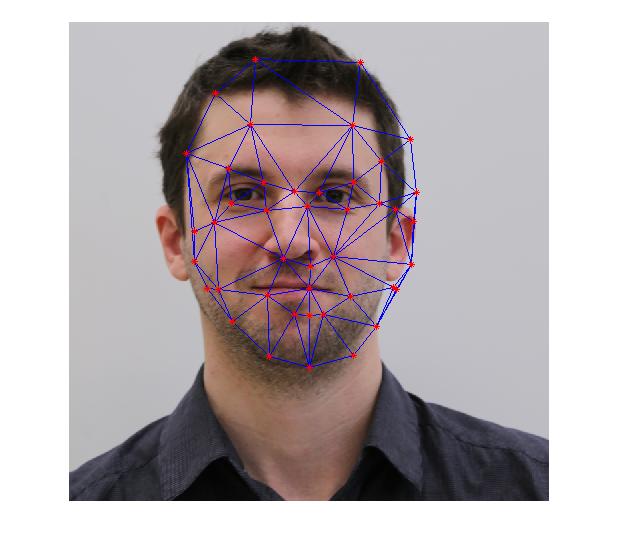

In order to change an image (source) to another image (target), we firstly found correspondence points from both images, which these points define the correspondence parts of the two images. For example, these points determine the place of nose, eyes, mouth, and so on. I used these two sets of points (one set for source and other set for target) to compute a set of intermediate points. Then, By applying delauny function (Matlab) on the intermediate points, some triangles are generated (this process called triangulation) in order to divide the images into parts. For example, correspondence triangles are shown in the two following images. Red points are correspondence points, and blue lines show correspondence triangles. The third image is shown triangles of the intermediate points, which its points are average of the correspondence points. Notice, I used this triangulation (on intermediate points) in whole of the process of morphing.

| Triangulation on source | Triangulation on target | Triangulation on intermediate |

|

|

|

The next step is computing affine transformation matrices. For each triangle in the interpolated image, I calculated two affine transformation matrices (T1, T2), one for its correspondence triangle in the source, and other for its target's correspondence triangle. Finally, I computed 2*number_of_triangle affine transformation matrices. We defined wrap_frc, which vary from 0 to 1, for controlling the deformation of the source and target images. For example for wrap_frc =0, the interpolated image is the source image, and for wrap_frc =1, the interpolated image is the target image

Now, I should find color(RGB) of each pixel in the interpolated image. But before, we should indicate each pixel belongs to which triangle so that transformed this pixel by a correct affine matrix. For indicating a pixel placed in which triangle (we need to know which triangle for finding its correspondence pixel in the source), I did not use mytsearch.m that is introduced in the assignment instead, I utilized poly2mask function to find all pixels of a triangle in the interpolated image (put all these pixels in matrix Y). In this case, my algorithm run very fast than pixel wise approach. So, I efficiently could specify all correspondence pixels( called X) in the source image by X = inv(T)* Y . Finally, color of all pixels in the interpolated image are obtained from the source image (called I_1) by interp2. I did the same process to produce another image form the target image (called I_2). Finally, I dissolved the two wrapped images(I1, and I2) by diss_frc parameter to generate a final image.

I changed diss_frc and wrap_frc to generate an animation (video). To generate a animation (video) with 120 frames, wrap_frc increased by 0.0083 in each iteration. However, wrap_frc and diss_frc are not increased equally, a lot wrapping done, then dissolve the images (in the assignment this indicated). It means diss_frc = wrap_frc-5*0.0083 in each iteration. I should mention for the first 6 frame, diss_frc =0, and for the last 6 frame diss_frc=0 because I want to show in the first 6 frames, how the source wrapped into the target, after these frames, cross dissolving is done so that information from target image brought. In the following, the result of my algorithm is show, which the source image is Jean-François, and the target is Maxime when I set wrap_frc and diss_frc as explained above. So, in all experiments, I used diss_frc = wrap_frc - 5*0.0083 for frame =6 to 114.

| diss_frc = wrap_frc -5*0.0083 |

|

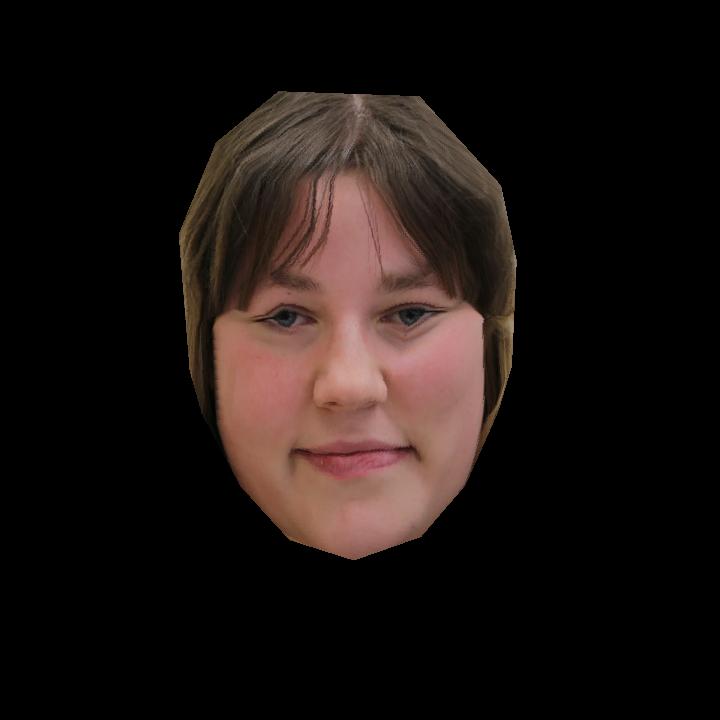

To enhance my results, I added background by adding corner points, and middel points on the border lines. In other words, 8 points are added to the previous control points (43 points). I tested the algorithm on two images (mahdieh and Claudia) with different poses of face. I think that if we added 4 extera correspondence points for two eyes(2 points for corners of left eye, and 2 points for corner of right eye), the results be more greater. since the size of eyes differs from person to person.

| Source image | Target image | Morphed image |

|

|

|

|

|

|

Mean Face

The steps for computing mean face are:

| mean face of all students face | mean face of women's face |

|

|

After finding mean face of women, I tested my algorithm by morphing Michael's face, and Maxime's face into mean face of women.

| Original | wrapped to mean face of women | morphed into mean face |

|

|

|

|

|

|

Below, some interested faces are chosen that wrapped to mean face of all students. As you can see, the wrapped faces are similar to caricature.

| Caricatur faces |  |

|

|

|

|

| Original faces |  |

|

|

|

|

Extera Results

Some other results are obtained by applying the triangular based morph algorithm on different faces. Since I pretty know how to select correspondence points for face( because of TP3 :) ), and the pose of the pair of faces are the same, my results for the faces are good, and reasonable. For all the face images, I chose 51 points (43 for face, and 8 for corners, and borders), else the squash, which I took 38 control points from each images (squash, and racket). | >

|

|

|

|

|

| Spiderman | Transformer | morphed |

|

|

|

Disscution

From my view point, the hardest part of the algorithm is finding correspondence points, especially when images have many details. In such cases, we should select densely correspondence points from such images. The algorithm will fail in other case, when some information appear in the source (target) image, while the same information missed in another image due to of difference in their poses. For instance, when the source is a full face, while the target is a half face, the algorithm fails.Because of limitation on size of uploaded file on Pixel, I have to reduce the size of my gif, so their quality is reduced, too.