The purpose of this homework is to compute the homography that maps each image into the base image and then stitches all the mapped images together to form a whole panoramic scene. This homework consists of two parts, Part 1 involves using the manually selected correspondence points to compute homography 'H'. In part 2, the 'H' is determined by a automatic feature points matching procedure.

Part 1: Manual Matching

The stitching process contains several steps (see the code 'TP4.m'):

(1) The first step is to manually select the correspondences between the images (see the code 'features_matching.m'). The homography transformation matrix that is used for projection consists of 8 parameters. Therefore, at least 4 correspondences are required to compute the homography 'H'.

(2) The second step is to compute the homography that will project each image onto the standard base image (see the code 'compute_homography.m'). The parameters in 'H' are determined by solving a system of 2*N linear equations with 8 unknowns.

(3) The third step is to project the input image onto the space of base image (see the code 'warp_image.m'). Specifically, the input image is frist mapped into the space of base image based on the 'H' computed in the previous step, then we use the invese of 'H' to find the color of each mapped pixels.

(4) The final step is to blend the warped image (see the code 'blend_image.m'). Now, all the prejected images belong to the same standard space as the base image, we blend the color of each image together to form the final panoramic scene image.

Part 2: Automatic Matching

The automatic correspondence detection process (see the code 'TP4_auto.m') follows the same projection steps except that all the correspondences are identified automatically.

(1) The first step is to detect the Harris corners that are used as the candidate points for the correspondences. However, there is a large number of Harris corners and must be reduced down. And we apply an adaptive non-maximal suppression approach to choose a subset of all Harris corners (see the code 'adaptive_non_maximal_suppression.m'). Specifically, for each Harris point, the minimum suppression radius is the minimum distance from that point to a different point with a higher corner strength. The points are ordered based on their suppression radius, and we choose the 500 points with the highest radius.

(2) The second step is to extract feature descriptors (see the code 'extract_feature_descriptors.m'). Each candidate point can be represented by an 8x8 feature descriptor, which is taken from a 40x40 pixel window centered on the point sampled by every 5 pixels. Then a normalization step is performed on the resulting descriptor with zero mean and the standard 1 deviation.

(3) The third step is to identify the matching pairs between two images (see the code 'match_feature.m'). The similarity between two points in the respective images are compared in terms of sum of squared error based on the feature descriptor. The points with the least error are considered for matching. The ratio between the lowest and second lowest errors is computed and compared with a threshold. The point pairs that are less than threshold are considered as matching points.

(4) The last step is called RANSAC and is used to remove the outliers from the candidate correspondence points. (see the code 'ransac_homography.m'). Specially, we first randomly select 4 correspondence points from all the candidates, and use 4 pairs to calculate the homography 'H'. Then all the candidates are projected onto the base image and the number of outliers are counted. We repeat the process 1000 times in order to track the best 'H' in terms of least number of outliers. Having found the best 'H', all the candidates are mapped to base image again to locate all the inliers which can be use as the final correspondences.

Part 1:

the result of panorama 1:

the result of panorama 2:

the result of panorama 3:

The problem with my implementation is that for the three cases I can't achive the desired number of stitches. I can not prevent the image getting larger and larger and finally the computation is out of memory.

Part 2:

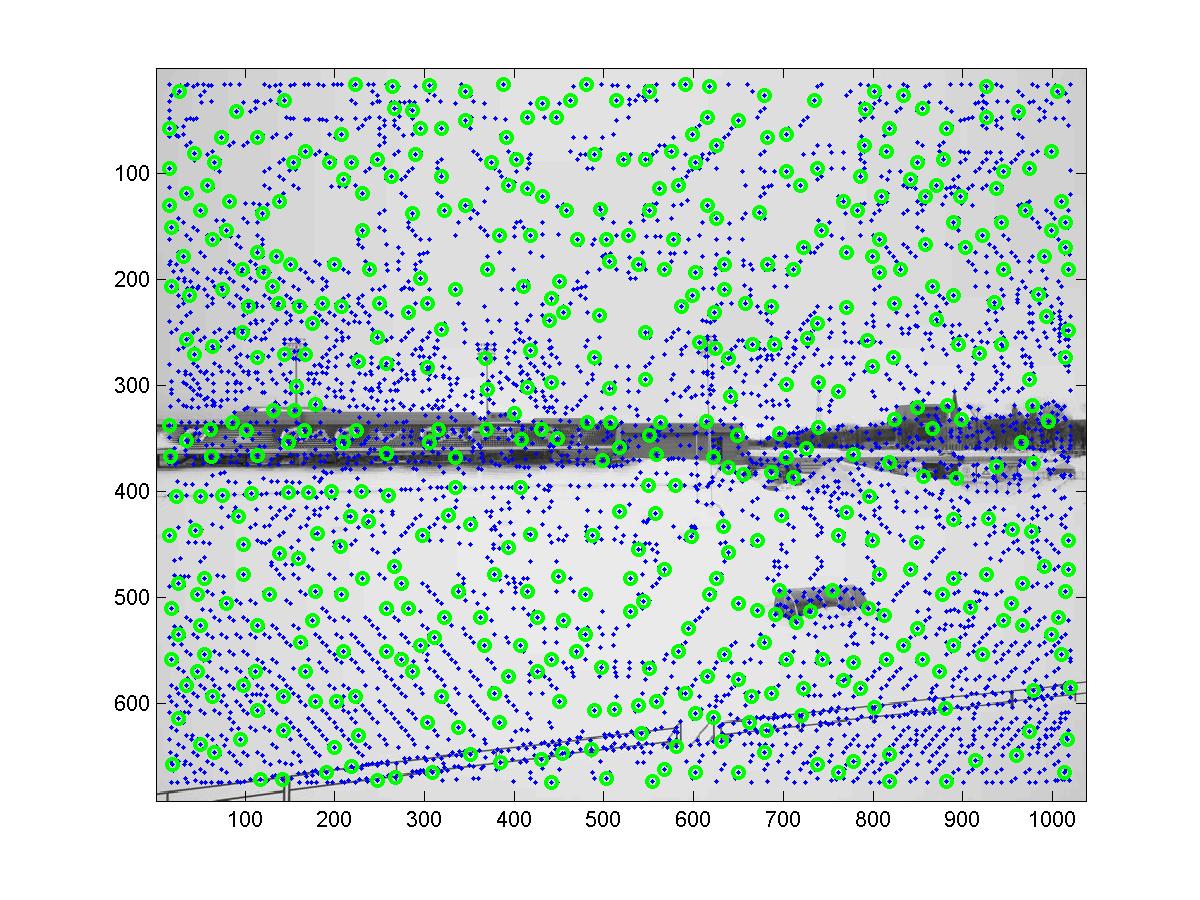

Adaptive non-maximal suppression: (left) full and surpressed for base image (right) full and surpressed for input image

Extract Feature Descriptors: (left) an unnormalized and normalized descriptor example in base image (right) its corresponding unnormalized and normalized descriptor example in input image

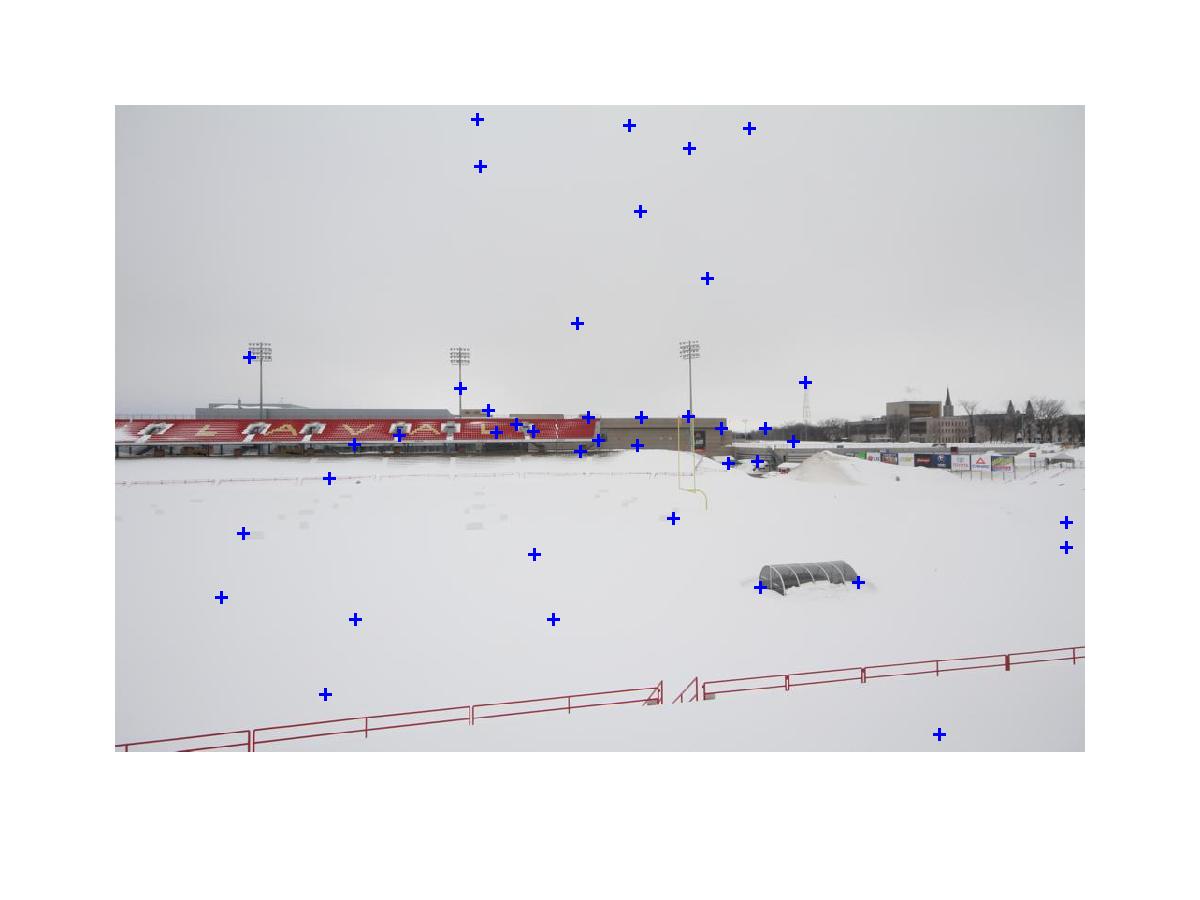

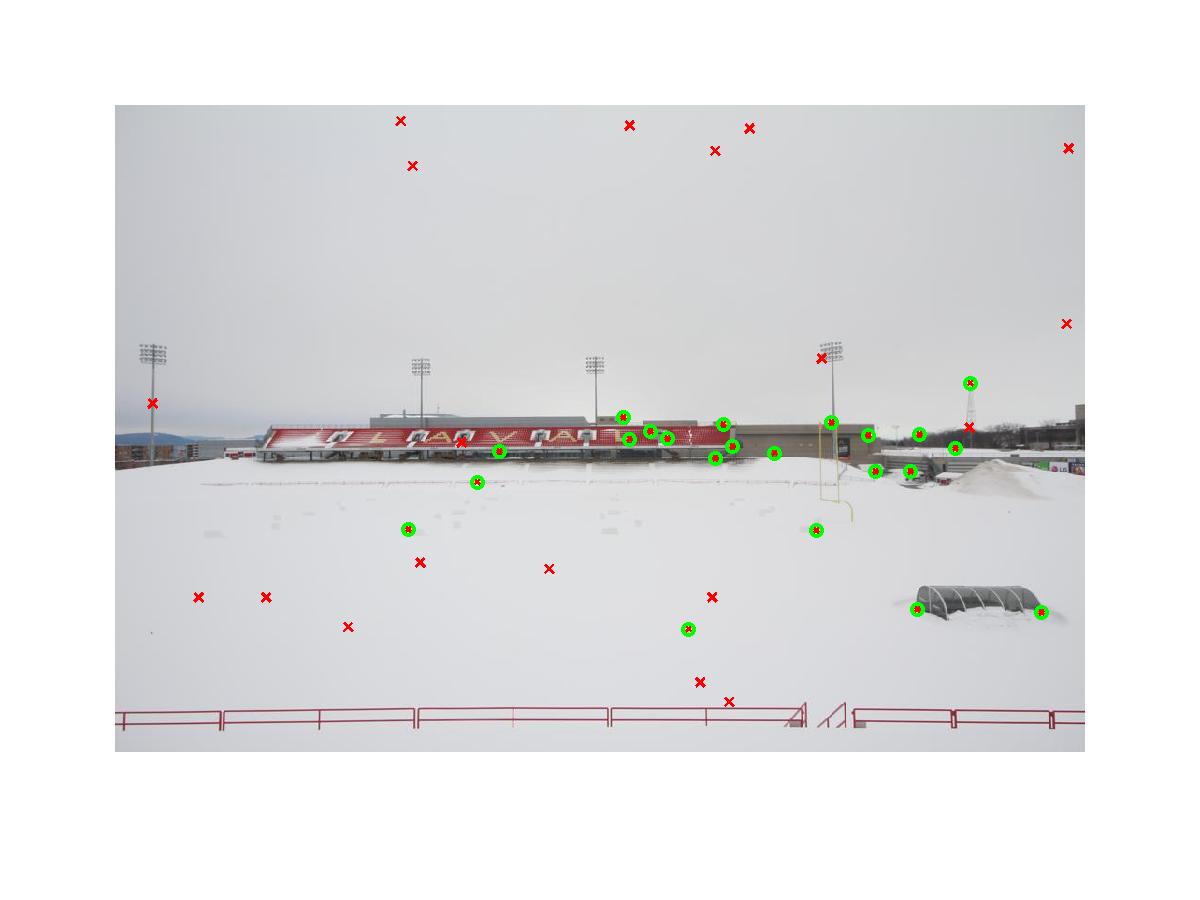

Identify matches between both images: (left) the candidate points in base image (right) its corresponding candidate points in input image

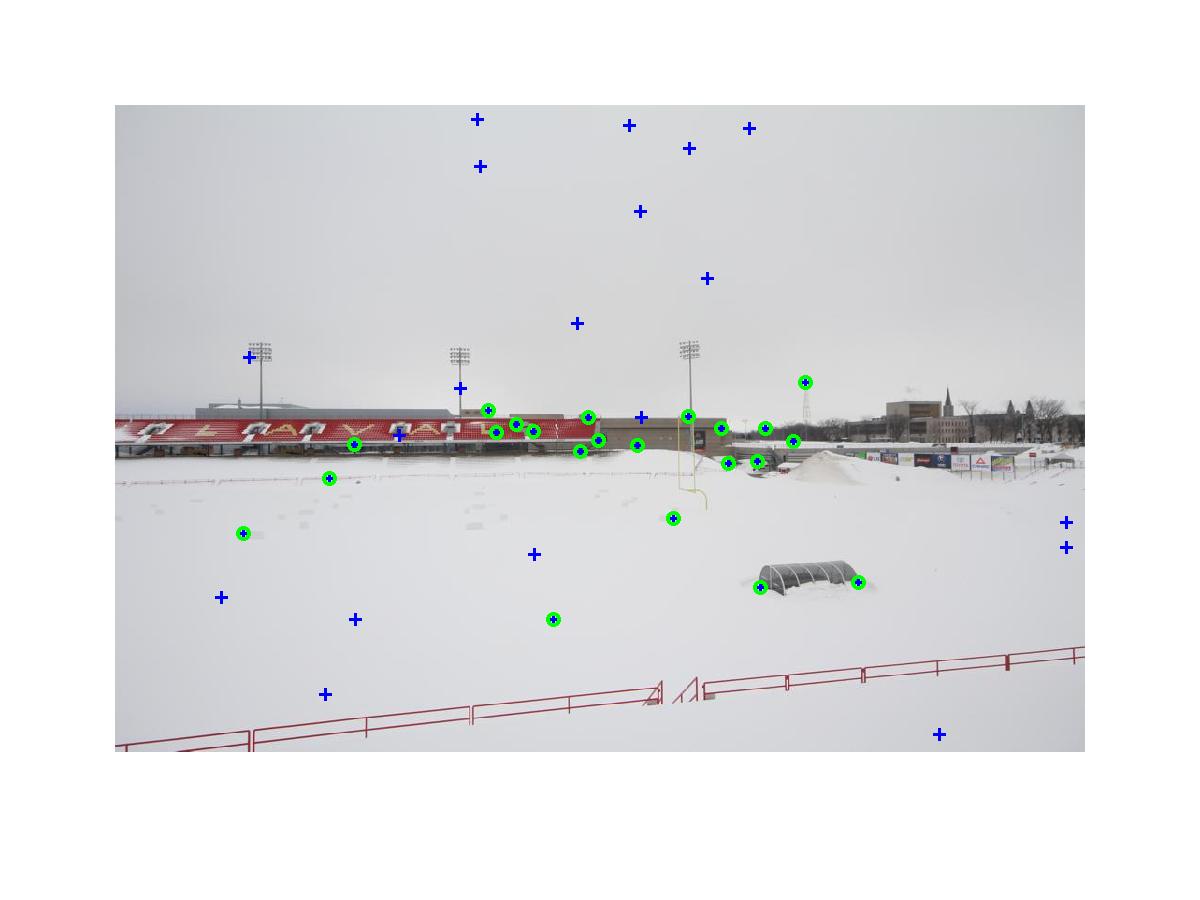

Identify matches between both images: (left) the candidate points after removing the outliears in base image (right) its corresponding candidate points after removing the outliears in input image

(1) The selected image.

(Pavillon Dejartin):